Voice At The Edge Helps Technology Work For More People

AI-powered edge processing opens possibilities for voice command on any device.

Image: Tecla

Voice in devices

A voice user interface (VUI) allows you to interact with a device, such as a computer, smartphone, or home appliance by simply talking to it. VUIs allow you to ask Siri the weather, request Alexa to play a song, or tell your smart globe to dim the lights without ever touching anything.

The history of voice commands goes back to the early 1950s when Bell Laboratories introduced the “Audrey” system, which could recognize a single voice speaking ten digits aloud. About a decade later, IBM designed “Shoebox,” a computer that understood and responded to 16 words in English.

Interestingly, one of the first widely available consumer products with voice recognition was a children’s toy, the infamous Julie Doll, which could understand eight vocal sounds as commands.

In 2011, Apple released Siri, opening the way to a range of other digital assistants in smartphones, stand-alone devices, and laptops.

The advent of Siri and her voice assistant colleagues happened thanks to cloud computing which allowed the complex processing required to synthesize speech to occur in a remote data center with massive computing power.

We are becoming more familiar with operating devices using our voice especially in mostly private settings such as in our cars or houses. VUI is currently estimated to be in almost 3 billion devices worldwide and is expected to soar to 8 billion by the end of 2035.

Processing at the edge

Alexa is constantly waiting patiently for your commands. However, she can’t be constantly recording you and analyzing every sound in your house to check if it is a command. This would not only cause extreme crowding in the cloud, but it would pose a significant security issue. Instead, Alexa is only listening for “wake words,” - in her case “Alexa,” - which then wakes up her full system, activating her full voice recognition power. When you then ask Alexa to give you a weather report, your voice is recorded and sent over the Internet to Amazon's Alexa Voice Services, which parses the recording into commands. The system then sends the relevant output back to your device and you can plan your outfit accordingly. Alexa responding to your wake command is known as edge processing, which happens on the processor in the device, while the weather request is cloud processing and occurs in a data center connected to your home via the Internet.

Not all voice command devices need to be connected to the Internet. Advances in edge processing mean more complex commands can be processed on the device that are free from a cloud connection, enabling faster computation with less latency and more security. Thanks to the development of ultra-low-power chips that can do neural network processing on the device, voice commands can be added to almost any device, including super small earbuds and wearables.

Why voice is important

“Speech is the fundamental means of human communication,” writes Clifford Nass, Stanford researcher and co-author of Wired for Speech. Nass illustrates how humans are wired for speech and verbal communication, and provides insight into how voice offers a fast, intuitive method of accessing technology and navigating through its functions.

Voice isn’t just a more intuitive method of communicating with technology, it also offers an opportunity for using devices in new ways under new circumstances.

Because speech requires less cognitive abilities than a touchscreen, voice-enabled devices allow a user to continue to concentrate on a task and issue commands rather than reallocate precious thinking resources to solve a tactile interface. Workers in highly concentrated environments, such as surgery, factory floors, and labs, could be assisted by voice-enabled robots to pass them tools, read charts, or monitor their health.

These qualities can be extended to all users of voice interface devices, making our interaction with technology more enjoyable, more intuitive, and more personal.

At last year’s CES, a very expensive toilet that flushed via voice command was exhibited. While the demonstration seemed like a humorous application of technology in the moment, in today’s new age of extra sanitary caution it could be lifesaving.

New audiences

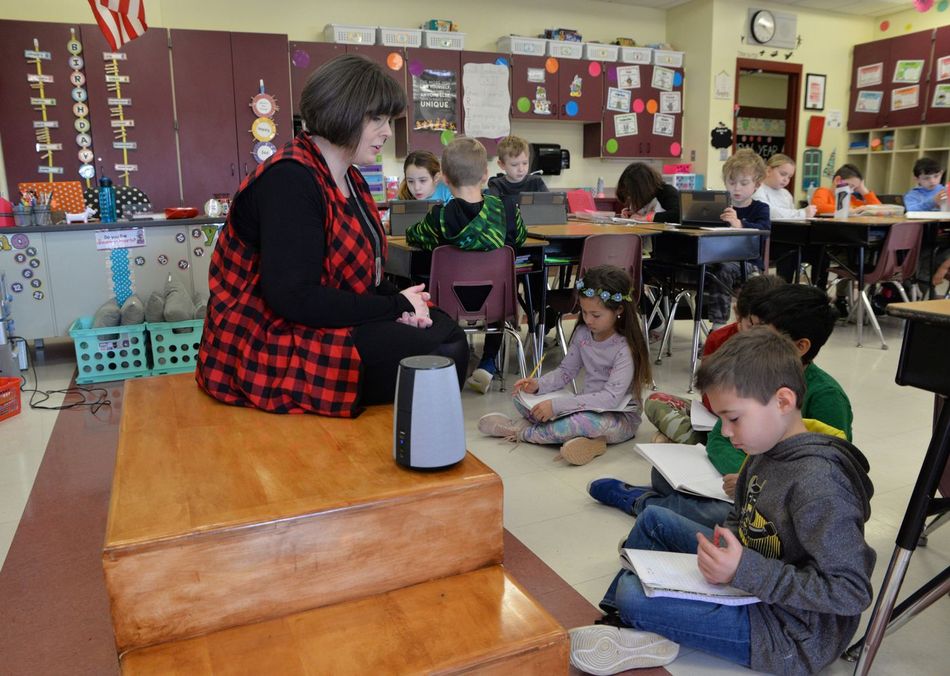

Voice command offers opportunities to introduce new and existing technologies to audiences in several ways. First, voice command devices can be easier to learn and more intuitive to use, so increasing technological skills across the globe. Additionally, voice control brings technology to people who have been overlooked by touch interfaces, such as people with disabilities and the elderly, as well as to audiences who have been left out of cloud anchored devices due to the lack of a reliable Internet connection. Voice command functionality also opens possibilities for people with neural diversity to interact with devices, such as touch tablets, whose logic can cause confusion and frustration. [1]

Users with physical limitations, such as reduced arm and finger dexterity, vision impairment, or blindness, may be able to naturally and easily interact with devices using their voice without additional adaptation to meet their needs. While many tools have been built for users with vision impairment and blindness access, devices that are brought to market with integrated voice commands reduce the gap that can open between product launch and ability adoption. It can also reduce the cost of technology that is made especially for less able audiences. These applications can range from asking your Kindle to read you a book to managing the temperature of your home.

Edge processing means sophisticated voice command devices can be introduced into devices for use in remote areas where Internet service is limited. This may bring voice command devices to remote communities that could improve education and training. It could also open possibilities for voice command devices, such as search and rescue robots, archeological equipment, and environmental monitoring systems.

New Applications Due to Edge Processing of AI

Advanced edge computing is available in large part due to the speed and power of the latest AI semiconductors used in voice processing. These chips have ultra-low power consumption, which makes them suitable for use in almost any portable device, from Bluetooth earbuds to smartphones to wearable health devices. The next generation of voice command devices will have the advantages of on-device processing. This proximity to end-users means designers can use embedded computing resources to build behavioral-based messaging, offers, and experiences, without compromising privacy or relying on cloud connectivity. These devices will be:

Secure

No cloud requirement means voice command devices can be highly secure, operating without a network connection. Voice-recognition has the chance to become the most secure biomarkers we possess. With more AI-enabled devices on the market, voice can become part of dynamic encryption mechanisms that can provide different levels of devices in different locations.

Fast with low latency

One of the biggest advantages of on edge processing is the shrinking of latency, the measurement of how long it takes for a data packet to travel from its point of origin to its destination. By resolving more processes closer to the source and relaying less data back to the center of the network, AI-enabled edge devices can greatly improve performance and speed.

Private

AI-powered edge processing allows for a user's data to be stored on a personal device rather than in a remote data centre. This not only meets increasing consumer demand for more control and ownership over their personal data, but it also makes it much easier to meet emerging legislation requirements such as the EU’s General Data Privacy Regulation (GDPR).

Conclusion

Voice control devices have the potential to enhance the way we communicate with current technology and create new ways of interacting with our environment.

Highly powerful, low-energy, AI-enabled chips mean voice interfaces can now be added to anything, anywhere - with and without the cloud.

Recommended reading: Neural Network Architectures at the Edge: Modelling for Energy Efficiency and Machine Learning Performance

As ‘no-touch’ rules and etiquette become essential for public health, how can voice command bring us closer and keep us safe? How would our attitude and experience of working closely with machines change if we can communicate with our most instinctive method? And what technologies can be introduced to audiences who have been overlooked by the complexity and necessary dexterity of touch interfaces?

About the sponsor: Syntiant

Voice at the edge.

Semiconductor company Syntiant is pushing forward voice at the edge possibilities with the development of the Neural Decision Processors™ (NDP10x). These high power devices offer highly accurate wake word, command word, and event detection in a tiny package with near-zero power consumption.

Currently, in production with Amazon’s Alexa Voice Service firmware, the NDP10x family makes Always-On Voice interfaces a reality by supporting entirely new form factors designed to wake up and respond to speech rather than touch. These processors will move voice interfaces from the cloud on to devices of all sizes and types, including ultra-portable wearables and devices.

References

1. Balasuriya S, Sitbon L, Bayor A, Hoogstrate M, Brereton M. Use of voice activated interfaces by people with intellectual disability. Proceedings of the 30th Australian Conference on Computer-Human Interaction. 2018;.

2. Pyae A, Joelsson T. Investigating the usability and user experiences of voice user interface. Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct - MobileHCI '18. 2018;.