Teaching AI CAD (is hard)

The vision for text-to-3D generative AI is not without its challenges.

This article was discussed in our Next Byte podcast.

The full article will continue below.

Text-to-image is soooo 2023.

This year we're adding a new dimension to generative AI: text-to-3D CAD models, and we need your help!

The Backdrop

We founded polySpectra with the mission to help engineers make their ideas real.

For the past seven years, we have been focused on turning bits into atoms. 3D models into rugged end-use parts.

Recent advances in AI have given us the opportunity to go one step further: ideas into bits.

In this post, I'll share some of our challenges in building neThing.xyz, our new generative AI tool for 3D CAD models. The tool converts natural language prompts into code, which is then converted into a 3D model.

The Problem, The Insight, The Vision

Problem: We have been incredibly energized by the pace of innovation in generative AI, but we were unable to find a text-to-3d gen AI tool that was able to create objects that we would actually want to manufacture.

Insight: The key insight that led to the invention of neThing.xyz was that AI is actually quite good at writing code, and by training our "code gen" AI on domain specific languages, our AI can produce code that can be rapidly converted into a 3D CAD models.

This is a fundamentally different approach than other text-to-3D generative AI, and it has some really interesting implications for the future of AI and CAD. Most of the other apps in this space are using Neural Radiance Fields (NeRFs), which is basically a combination of text-to-image and then 2D-to-3D. (Lumalabs “Genie” and Commonsense Machines “Cube” are our two favorites.)

Vision: ideas => bits => atoms

What if you could instantly generate 3D objects from a brief verbal description?

The Challenges

Teaching AI to understand and generate CAD models presents a unique set of challenges:

1. The AI is Blind: Traditional language models (LLMs) are inherently "blind" and lack the ability to visualize. This means that they struggle to interpret or generate visual content, such as CAD models, from text descriptions alone. While multimodal models, which can understand both text and images, offer a potential solution, they are still in the early stages of development. Their ability to accurately generate complex CAD models from textual descriptions is not yet reliable.

2. "Code-CAD" is Niche: The field of generating CAD models through code is highly specialized. There are few experts and, consequently, limited examples to train AI models on. This scarcity of data poses a significant barrier to teaching AI the nuances of CAD generation. On this topic, we would like to extend a huge shoutout to the Build123D community for their tremendous generosity in helping us get neThing.xyz off of the ground.

3. Multiplicity of Correct Solutions: For any given CAD design prompt, there can be numerous correct solutions, each employing different methods and approaches. For example, just watch the Too Tall Toby "CAD vs. CAD" tournaments (the spring tournament is happening now). Competitors in the speed CAD tournament adopt varied strategies to achieve the same design goals, highlighting the challenge of teaching AI to navigate the vast landscape of valid CAD solutions.

As we mentioned in the first point: the inability of AI to "see" or visually understand the models it generates complicates the training process. Coupled with the fact that there are many valid approaches to a single design problem, this creates a complex reasoning challenge. Traditional foundation models may completely lack the necessary context to navigate these intricacies effectively.

These challenges underscore the complexity of bridging the gap between textual descriptions and visual, three-dimensional CAD models. As we continue to explore and innovate in this space, addressing these issues will be crucial to generating high quality outputs.

Our Approach

Our vision for neThing.xyz is to create a generative AI tool that generates truly useful 3D CAD models from straightforward natural language prompts. With neThing we can dramatically expand the number of people who can participate in engineering. We believe that this tool will be useful for a wide range of people, from engineers to artists to students to hobbyists.

Community-driven: Our hope is that this audacious goal can be realized through the support of our community. The AI will only ever be as smart as the sum of the community that trained it, and we are excited to see what you will create.

Inspired by Midjourney, we are making the generated models "public by default", which we believe has a number of benefits:

First, it will provide a rich showcase of examples for both humans and AI to learn from.

Second, it means that even users who aren’t able to contribute financially are contributing to the overall community goal of making an amazing text-to-CAD generative AI tool.

Finally, it encourages sharing and openness, which is absolutely essential for a product that strives to be community-supported.

Introducing neThing.xyz

neThing.xyz (pronounced "any thing dot x,y,z") is free to try. After you sign up, you will be able to...

...query the AI to create a 3D model from your prompt...

...preview your creations in AR (on compatible devices)...

...change the physical appearance of the 3D model...

...share your creations with friends...

...edit the code to change the model...

...download the model as STL, STEP, or GLTF...

...click the "Make it real" button to have polySpectra 3D print the part for you!

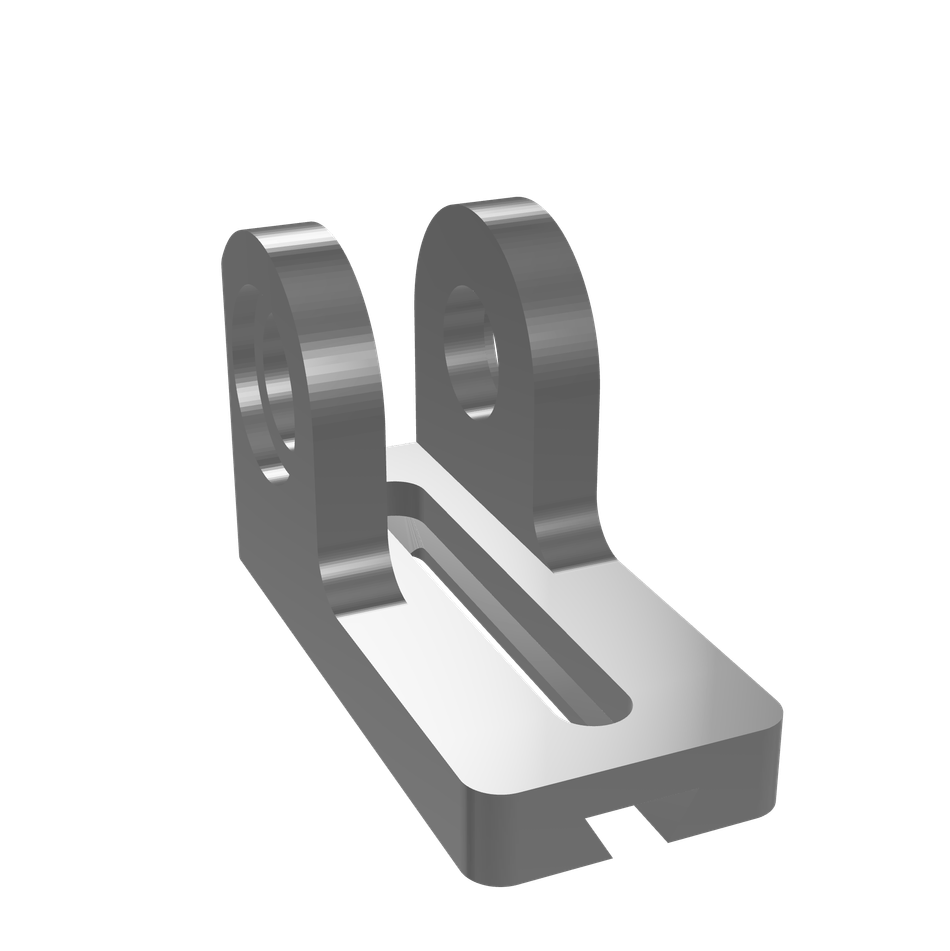

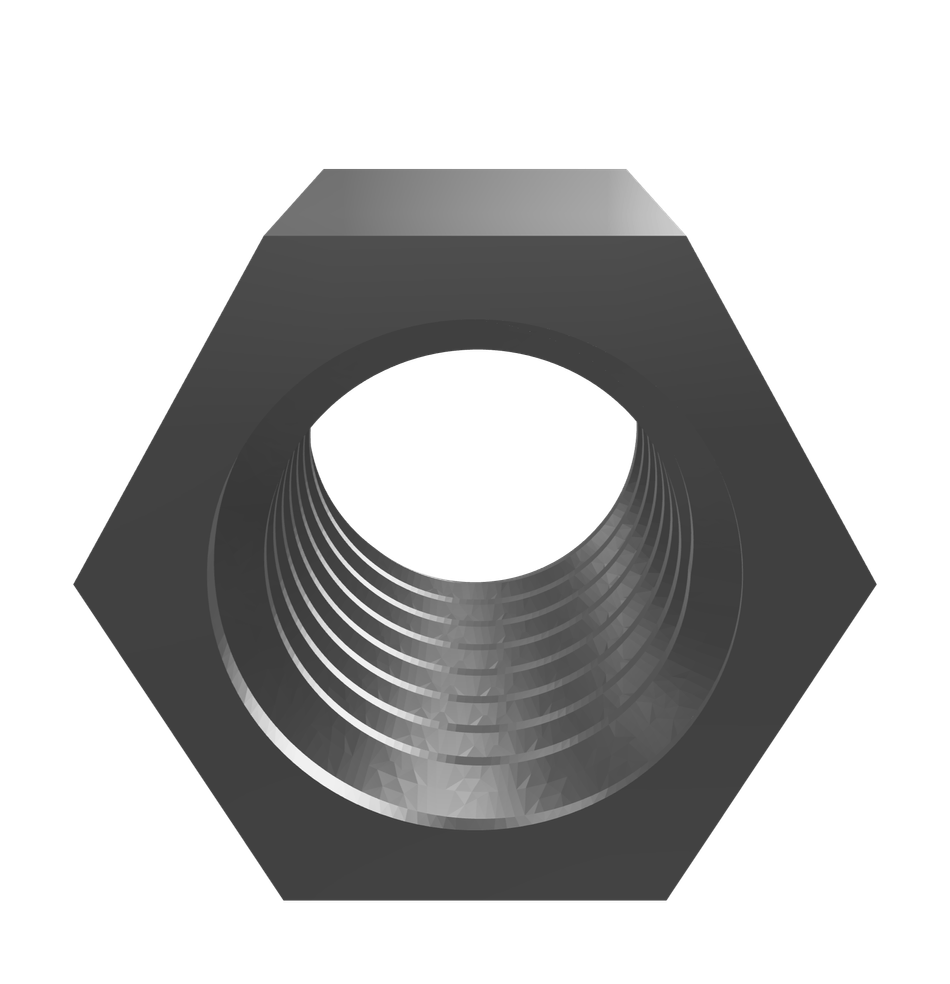

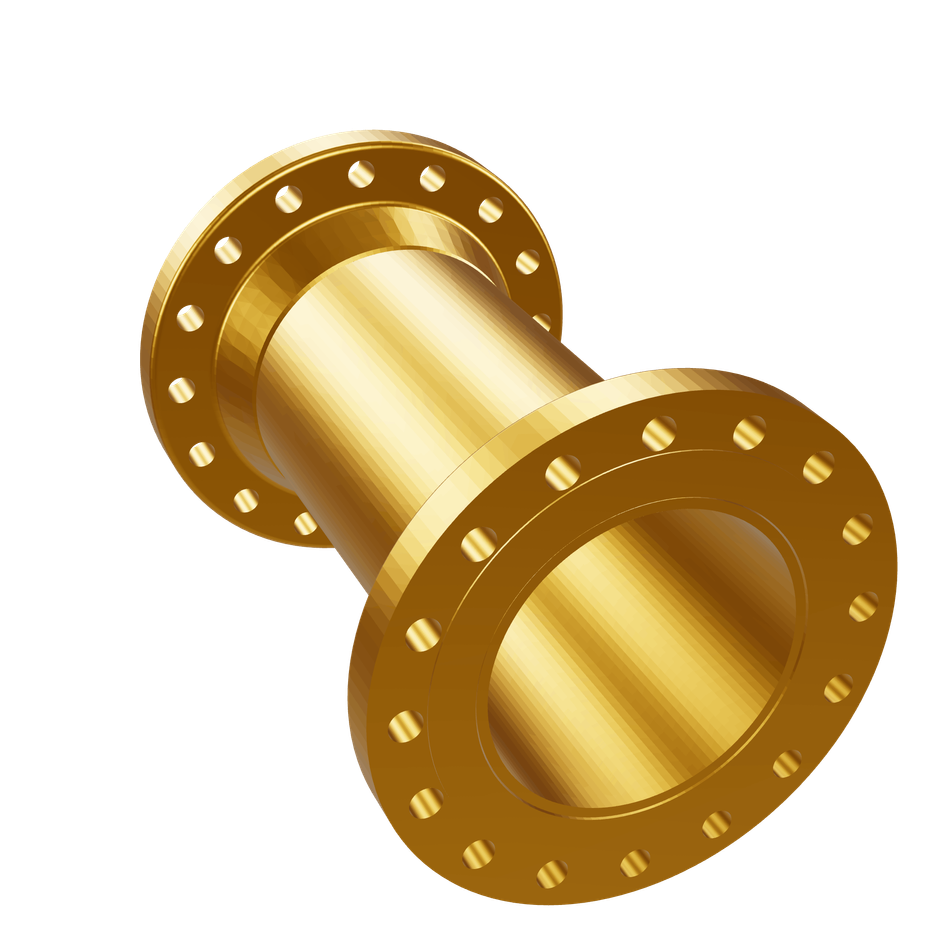

Here are just two examples of the types of objects that you can create:

We are hungry for your feedback. There is a long road ahead to train the AI to create truly useful 3D CAD models from natural language prompts. The AI is only as smart as the good examples that we can train it on, and this will be a community effort. (And honestly, our AI is not very smart yet - we need your help!)

Check out the tool today: https://neThing.xyz

Join our community: https://forum.neThing.xyz

Make it real.

polySpectra