Mixed Robotic Interface (MRI Gamma)

Using AR and robotics as Storytelling Mediums

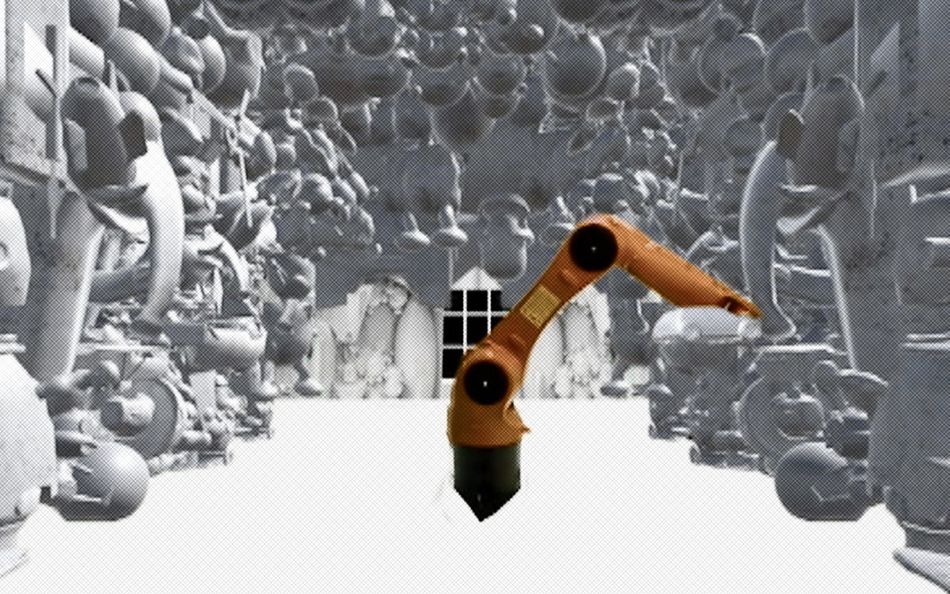

Using Chroma Key image processing techniques in Unity 3D, the green screen is being replaced by the animated digital scene.

After the introduction of digital tools in design and creative disciplines almost three decades ago, there has been an ongoing interest in connecting the physical and the digital worlds. Most of these hybridizations have been directly influenced by the industrial/engineering origins of some of these digital technologies. For instance, using CNC/Robotic arms as tools for fabricating digitally designed artifacts or seeking time/cost-efficient production methods are a few examples.

Although there is a tremendous value in this "tool-oriented" perspective, there seems to be a missed opportunity to use these digital/physical platforms, not only as "tools"--for translating one platform to another, but to combine them into a synthesized mixed design/experience "medium"; a mixed medium where it benefits from both digital and physical to curate a new design process, artifact or experience.

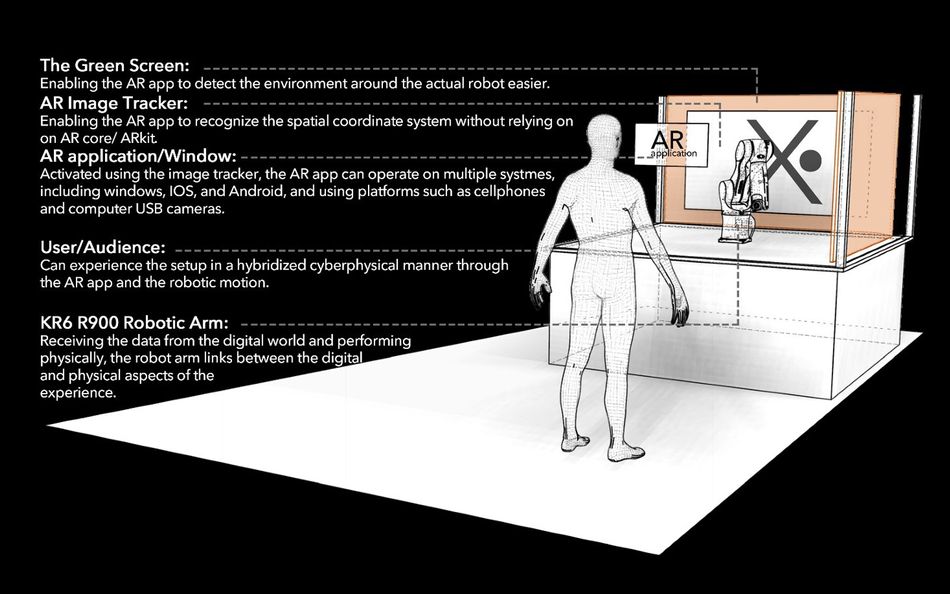

In one of their latest experimental research projects Mixed Robotic Interface (MRI Γ), researchers at the Robotically Augmented Design (RAD) Lab at the College of Architecture and Environmental Design (CAED) at Kent State University, explored potentials of robotics and augmented reality technologies as possible mediums for hybrid cyberphysical storytelling and atmosphere making. Using the natural power of robotics and AR as platforms that co-exist in both digital and physical environments, MRI Γ explores possibilities of creating/curating a hybridized experience arrangement, to narrate a story with actual and virtual actors and setups.

Developed as part of the MRI Γ research investigation led by Ebrahim Poustinchi assistant professor of architecture and the founder/director of the RAD Lab, graduate students Bradly Bowman and Trever Swanson formed a performance setup for storytelling, using a combination of physical images/posters, AR content as the performance set/stage, and an industrial robotic arm as the performer.

The story—called “Child Robot!,” is based on a para-fictional scenario speculating on a future child room where the robot arm plays the role of the child, illustrating a futuristic/post-human ambiance. As a cyberphysical storytelling arrangement that simultaneously uses element from the actual and virtual to narrate, precision, and coordination between the digital and the physical is crucial. The setup is a product of a precise calibration of the physical robot arm—KR6 R900 Sixx KUKA robot, the physical and virtual room, and the virtually animated toys in the scene (child-robot room) coordinated in a responsive time-based manner. Using augmented reality procedures in conjunction with the physical setup, “Child Robot!” enables the users to experience a blend of reality, fantasy, actual and virtual through a custom-made software application, curated motion, and a physical scene.

Powered using Unity 3D game engine, custom animation based robotic motion-control platform—Oriole, and image tracking techniques, “Child Robot!” facilitates its audience to experience the story—and its hybridized digital/physical content, from multiple points of view and perspectives. Liberating the experience from both completely immersive digital experience—VR experience, and a physical single perspective performance—regular physical experience, MRI blurs the boundaries between the audience, the performer, and the performance setup.

To synchronize and coordinate the robot's movement and the digital game events in the AR platform, two sets of data have been used. A physical image-tracker works as an anchor for the digital—AR, content, and establishes a coordinate system for the scene's virtual overlay. This tracker enables the coordination between the robot's static position and the static framing of the digital setup. However, for the robot—from the physical world, to interact with the digital game objects, there is a need for realtime communication. Throughout the experience/performance, the robot continually sends its realtime position/orientation (TCP Plane data) to Unity 3D game engine. This information enables interaction between the game objects—toys, and the robot.

To overlay the digital renderings—AR material, over the real/physical footage of the setup without overwriting the robot itself, RAD Lab researchers used greenscreen techniques to separate the robot from its physical context. Later in the process by using a custom-made “Child Robot!” AR application, and employing Chroma Key image processing techniques, the green background has been replaced with a coordinated digital content in realtime.

While holding a device—e.g., cellphone, equipped with the custom-made “Child Robot!” AR application, the audience can walk through the MRI Γ setup, physically interact with the robot—the performer, and watch the playful interaction of the physical robot and the digital room.

Although MRI Γ research—and “Child Robot!” as one of its examples, are at the early stages of experimentation and development, one of the main aims of this research is to move beyond immersion—both complete digital immersion (VR) or physical. It seeks a hybrid medium—a mixed interface, where the physicality of the sound, presence, and breeze of the robot’s motion, combines with the digitality of rendered animated content of AR to narrate one consistent story and create/curate one cohesive cyberphysical experience.