Can Robots watch Humans and learn a task?

Cobots when combined with Artificial Intelligence(AI), can effectively work alongside humans in the real world on their daily tasks. With an AI technique called Inverse Reinforcement learning (IRL), the robot can observe a human performing the task and learn how to do it quite intuitively.

Robots are increasingly becoming a part of our society and although right now they’re just predominantly deployed in industrial warehouses and automation lines in factories, robots with additional safety features and environment-aware motion planning are at the very cutting edge of robotics research. These collaborative robots or cobots for short, are specially designed to work alongside humans in an unrestricted environment where humans can freely move around.

These cobots when combined with Artificial Intelligence or AI, can effectively work alongside humans in the real world and help humans with their day-to-day tasks. AI helps computers make smart decisions to solve problems efficiently.

Usually, warehouses are what roboticists call a structured environment, meaning, everything is exactly where it was always placed, and the task is repetitive and has no dynamically changing parameters. For such a task, the involvement of AI would be overkill and can easily be programmed from start to end using simple code. A semi-structured environment is something like a hospital, where most things have a fixed place, there are regulations set about the movement of people and goods around the area, however, there are certain aspects that are volatile and non-repetitive that need dynamic decision making. An example of an unstructured environment is a vegetable sorting line conveyor system, where the throughput of the vegetables is variable, the number of good and bad items varies with time and their locations on the conveyor are dynamically changing all the time.

This is one of the hardest problems to solve as it involves so many different aspects of robotics, such as AI for deciding when to do which action, path planning, and trajectory optimization, for finding ways to perform that action, control systems, for accurately following that plan and computer vision, to identify, track and provide 3D coordinates for the robot to reach. This is the problem THINC Lab researchers are currently engaged in solving.

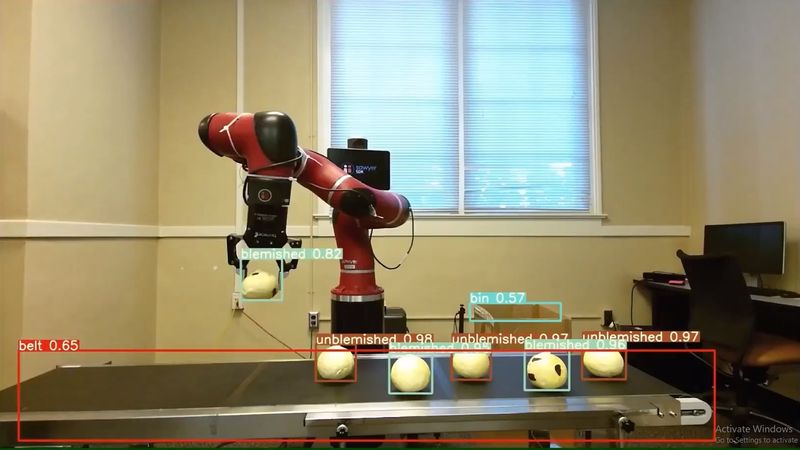

To give a brief overview of the complete architecture, we have an RGBD camera observing the items on a conveyor belt, the RGB or color images are fed into a trained deep learning neural network that identifies the salient objects in the image. These object locations are then identified in the real world using computer vision transformation techniques. In the following image, you can see the cobot Sawyer, a conveyor belt with onions, and a bin to place the bad onions recognized by the neural network. From here, some robot programming as mentioned before will do the job of sorting the onions.

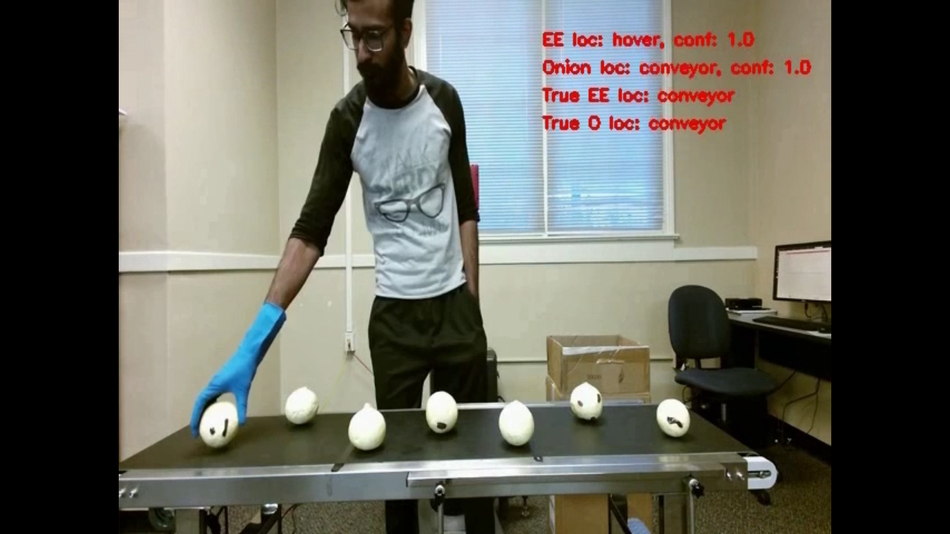

However, how does the robot know what to do after identifying and picking up an object? The task may sometimes be too complex to explicitly program. This is where AI steps in again. Using an AI technique called Inverse Reinforcement learning (IRL), the robot can observe a human performing the task and learn how to do it by itself.

Recommended reading: How far along are we in the AI-Human "race"?

The idea behind IRL is to learn the intent of the expert performing the task. Once that is understood, the robot can do it in a way that it deems plausible while preserving the intent. The following image shows another deep-learning network called the SA-Net processing the data of the human performing the task and extracting the salient features needed to learn.

The system is still in its prototype stage and this proof of concept setup would soon be improved to the stage of commercial deployment. For further details about the research we do, check out the THINC Lab website.