The Robotic World of the Near Future

We are just entering a time driven by intelligent and specialized machines, and the possibilities are limitless.

Photo by Arseny Togulev on Unsplash

A fully-automated Earth in which robots work with humanity in every conceivable way has been imagined a million times over in science fiction books, film, games and television. According to our dreams, we might end up living in a world reminiscent of “WALL·E”, in which machines assisted humanity in our environment-shattering quest for more-more-more that ruined the planet; a post-apocalyptic result of AI seeing Homo sapiens as a blight to be wiped out portrayed in “The Terminator”; or an Earth and Solar System where robokind and humans exist together in a strange dichotomy of harmony and distrust as envisioned by the works of Isaac Asimov.

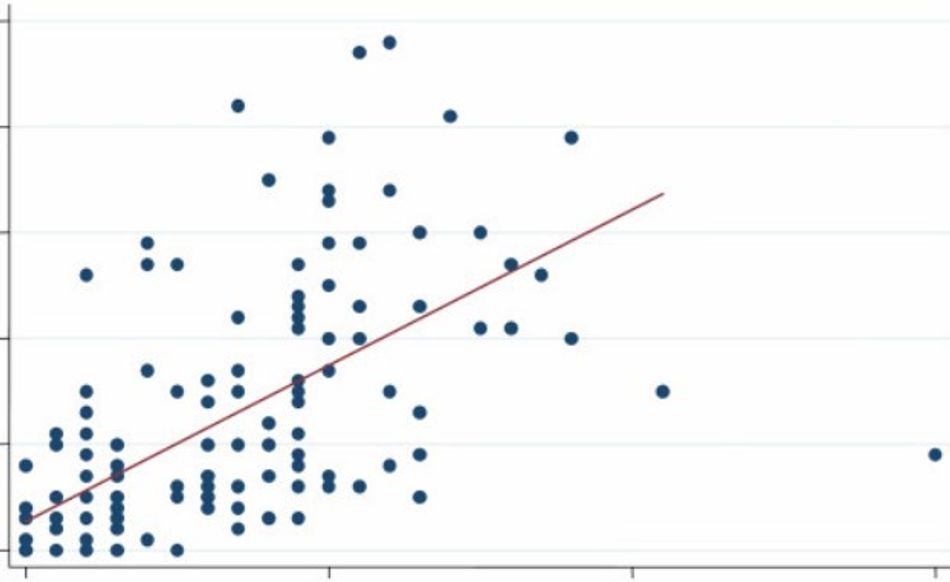

If we were to place all of our imagined versions of a Robo-enhanced future on a scatter plot, where the X-axis is the timeline starting in the present (at 0) and going into the future (say, up to 1,000 years from now), and the Y-axis represents the degree to which we get along with our robot companions as a percentage — with 0 being “Robopocalypse”-level mutually-assured destruction and 100 representing some kind of perfect utopia in which humankind and machines exist together in perfect harmony or have otherwise blended together into a new species — it might look a little like this:

The majority of what many people expect in the nearer future tends to the darker side of things. We compare robotics and the research into artificial intelligence that usually accompanies that with other similarly large advances in technology that often had some scary results. Indeed, work on atomic power began with bombs, led by military programs, and that is where much of the headway is being made today with robotics.

Everyone working in robotics and AI today generally agree that our creations need to be designed from the ground up with distinct rules in place for how they will regard human life. A robot’s AI must be able to unmistakably recognize humans and, at all costs, avoid harming them…

But then we are back to the military forces of the world being some of the primary organizations spearheading robotics work. As I write this, the United States, Russia, China, and other countries are all actively running programs to create drones and AI-controlled battlefield robots expressly designed to kill people.

Robots similar to the one in the video below will, one day soon, be self-directed by onboard AI.

This contradiction is where the problem lies, because already before we have really entered our upcoming transhuman, robotic era, we are seemingly ignoring the advice of two generations of scientists and futurists who have collectively thought about such problems to the tune of millions of hours. Geniuses, visionaries and tech pioneers like Kurzweil, Musk, Hawking, Page & Brin, Asimov, Vinge, and many others have warned of the inherent dangers of AI and robotics for decades.

We, as in most of the world’s citizens, do not want an Earth dominated by killer robots. We want the kind of world envisioned in The Jetsons.

We want a world where robots and AI work alongside us to make everything better. But if most of the earliest autonomous robots are built with military objectives in mind, we will be setting ourselves up for a dark future.

There is a reason that a large percentage of our science fiction revolves around robots and AIs that become self-aware and either try to take over the world or make humankind extinct. We recognize that this is a possibility. Humanity excels at coming up with novel ways to endanger itself. That, too, we realize.

To try and avoid this scenario, we have two options:

Abandon and/or outlaw all work in AI. Just as with any attempts at worldwide control of nuclear arms or ethically-questionable medical research, this is a non-starter. Some players will agree, but many will not. The human lust for satiating curiosity and gaining an advantage over others is insurmountable.

Barring that, all we can do is try and make sure everyone realizes the potential dangers and builds a framework within which their creations must operate that does its best to prevent our forthcoming AI-controlled robots from harming us.

Fortunately, we have been thinking about this for as long as we have been working toward the Singularity.

If we find ourselves living in the Singularity, and our children rapidly exceed any of our abilities to keep tabs on them, we may not be able to rely on any rules we set for them. Their evolution will be out of our control. We could quickly find ourselves in a position similar to where we have placed the lowland gorilla or giant panda.

Because of this, it may be best to instill in them the morals we hope we ourselves would better follow and hope that our children can police themselves.