Gesture Recognition and Classification Using Infineon PSoC 6 and Edge AI

Gesture recognition, leveraging the advanced capabilities of embedded devices and streamlined through specialized platforms is creating new means of human-machine interactions, paving the way for more intuitive and user-friendly device interfaces.

Image credit: Marxent Labs

Introduction

Gesture recognition is not just a buzzword; it's an indispensable technology that's changing the way we interact with devices. From smartphones to smart homes, the ability to control devices through simple hand movements offers an unparalleled level of convenience and accessibility. However, implementing robust gesture recognition systems is fraught with challenges, from data acquisition and preprocessing to machine learning model training and deployment.

In this article, we will look into the technical aspects of developing a gesture recognition system using Infineon's PSoC 6 microcontroller. We'll explore each stage of the development process, from sensor data acquisition to neural network model deployment. We'll also share a link to a GitHub project detailing a step-by-step guide on implementing gesture classification, empowering you to create your own gesture recognition application. By the end of this article, you'll have a comprehensive understanding of the complexities involved and how specialized machine learning platforms such as Edge Impulse can simplify the process.

The Need for Gesture Classification

The demand for intuitive human-machine interfaces is growing exponentially across various sectors. Whether it's automotive infotainment systems, medical devices, or consumer electronics, the ability to interact through gestures adds a layer of convenience and user-friendliness. However, implementing these systems on embedded platforms like the PSoC 6 involves challenges such as computational limitations, real-time processing requirements, and power constraints.

To address these challenges, developers often have to make trade-offs between system performance and complexity. For instance, simpler algorithms may require fewer computational resources but may not provide the desired level of accuracy. On the other hand, complex algorithms may offer high accuracy but may be too resource-intensive for real-time applications. This delicate balance makes the choice of hardware and software platforms crucial in the development process. With these considerations in mind, let's delve into the capabilities of the Infineon PSoC 6 and see how it stands out as a viable solution for these challenges.

Infineon PSoC 6: An Overview

Infineon's PSoC 6 is a versatile microcontroller unit (MCU) designed with low-power IoT applications in mind. It features a dual-CPU architecture, combining an ARM Cortex-M4 and an ARM Cortex-M0+ processor, allowing for efficient task partitioning. The PSoC 6 also offers programmable analog and digital blocks, making it highly customizable for specific application needs. Its rich set of features makes it an ideal candidate for implementing complex tasks like gesture recognition.

The PSoC 6 is not just a powerful MCU; it's a complete ecosystem that provides developers with a range of tools and libraries to accelerate the development process. From ModusToolbox, a comprehensive software development environment, to a rich set of middleware components, the PSoC 6 ecosystem is designed to simplify complex tasks.

Sensor Data Acquisition

For gesture recognition, sensor data is the raw material. PSoC 6 can interface with a variety of motion sensors, such as accelerometers and gyroscopes, using SPI and UART protocols. These sensors capture the 3D spatial movements that are then classified into specific gestures. However, data acquisition is not just about reading sensor values; it's about doing so reliably and efficiently. Specialized machine learning platforms like Edge Impulse can offer features like sensor fusion and automated data collection, ensuring that the raw data is as accurate as possible.

Suggested Reading: Sensor Fusion: The Ultimate Guide to Combining Data for Enhanced Perception and Decision-Making

Data acquisition in real-world applications is often noisy and inconsistent. Factors like sensor drift, environmental conditions, and hardware limitations can introduce errors into the collected data. Therefore, it's essential to implement robust data acquisition strategies that can mitigate these issues. Techniques like sensor calibration, data validation, and outlier detection are often employed to improve the quality of the collected data.

Data Preprocessing and Filtering

Once the raw sensor data is acquired, it needs to be preprocessed before feeding it into a machine learning model. This involves filtering out noise and normalizing the data. Techniques like Infinite Impulse Response (IIR) filtering and min-max normalization are commonly employed for this purpose. While these preprocessing steps are essential for the accuracy of the final model, they can be time-consuming to implement and optimize. Specialized machine learning platforms can automate much of this, allowing developers to focus on other critical aspects of the system.

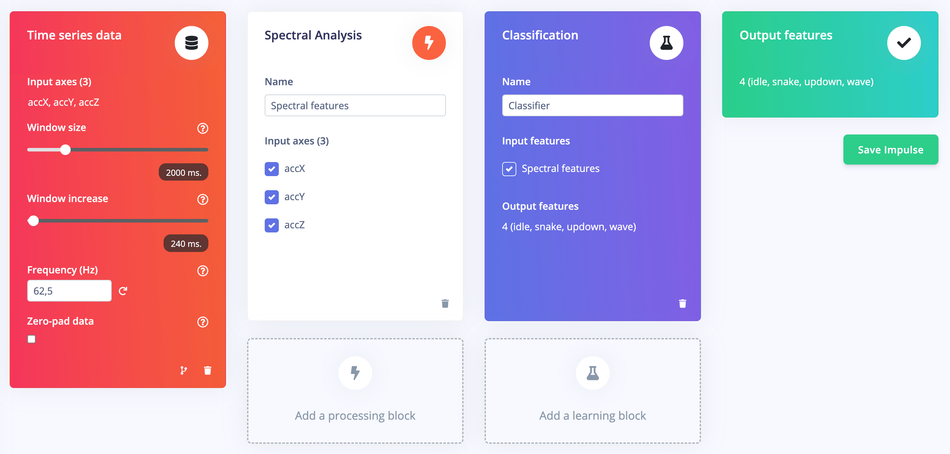

Data preprocessing is not a one-size-fits-all operation; it often requires fine-tuning based on the specific application requirements. For instance, the choice of filter parameters, the window size for normalization, and the sampling rate can all impact the performance of the gesture recognition system. Therefore, it's crucial to perform iterative testing and validation to fine-tune these parameters, a process that can be significantly streamlined using specialized machine learning platforms. Edge Impulse offers intuitive tools for data preprocessing, allowing developers to easily filter and normalize data, ensuring it's ready for model training.

Neural Network Models for Gesture Recognition

Gesture recognition typically employs Convolutional Neural Networks (CNNs) due to their ability to process spatial hierarchies in the data. A standard CNN model for gesture recognition would include multiple layers, such as convolutional layers, Rectified Linear Unit (ReLU) activation functions, max pooling, and batch normalization. These layers work in tandem to extract features from the raw sensor data and classify them into specific gestures. However, training these models requires a deep understanding of machine learning algorithms, data science, and optimization techniques. Specialized machine learning platforms can simplify this process by offering pre-trained models and automated training pipelines.

The complexity of the neural network model often depends on the number of gestures to be recognized and the required accuracy. More complex models with additional layers and nodes can offer higher accuracy but may be computationally intensive, making them unsuitable for real-time applications on resource-constrained devices like the PSoC 6. Therefore, model optimization techniques like pruning, quantization, and layer fusion are often employed to reduce the computational complexity without significantly compromising accuracy.

Implementing Gesture Recognition on PSoC 6

Developing a gesture recognition system involves more than just machine learning models. The development environment needs to be set up, the hardware configured, and the software architecture designed. In the case of PSoC 6, the ModusToolbox software environment provides a range of tools for configuration and development. The code structure for implementing gesture recognition involves various tasks, such as data acquisition, preprocessing, and classification. FreeRTOS is often used for managing these tasks, offering the benefits of real-time operation and future scalability.

The software architecture for implementing gesture recognition on PSoC 6 often involves multiple tasks running concurrently. For instance, one task may be responsible for data acquisition, another for data preprocessing, and yet another for gesture classification. Managing these tasks efficiently requires a robust multitasking environment, which is where real-time operating systems like FreeRTOS come into play. FreeRTOS allows for efficient task scheduling, inter-task communication, and resource management, making it easier to develop complex applications like gesture recognition.

Beyond the software architecture, the deployment phase is also crucial. Once the model is trained and optimized, it needs to be converted into a format that can be loaded onto the PSoC 6 MCU. This often involves converting high-level model formats like H5 into C or C++ code that can be integrated into the ModusToolbox environment. This step can be cumbersome and error-prone if done manually. However, specialized machine learning platforms offer automated tools for model conversion and deployment, ensuring that the transition from development to production is seamless.

The final piece of the puzzle is real-time performance monitoring and updates. Once deployed, the system's performance needs to be monitored to ensure it meets the desired accuracy and latency requirements. Any necessary updates or optimizations can be rolled out as firmware updates, a process that can be automated and streamlined using specialized machine learning platforms.

As we've seen, the journey from concept to deployment in gesture recognition involves multiple steps, each with its own set of challenges and complexities. While the PSoC 6 provides a robust hardware platform, the software complexities often require specialized tools and platforms for efficient development and deployment.

Here is a project by Infineon explaining the step by step process to implementing gesture classification using Infineon PSoC 6: GitHub - Infineon/mtb-example-ml-gesture-classification. For in-depth exploration of more Edge AI projects, don't forget to check out: Edge Impulse Blog.

Conclusion

Gesture recognition is poised to become a standard feature in the next generation of human-machine interfaces. While platforms like Infineon's PSoC 6 provide the hardware capabilities for such advanced applications, the development process involves several complexities. These complexities can be significantly simplified by leveraging the power of specialized machine learning platforms like Edge Impulse, making it easier to develop, deploy, and scale robust gesture recognition systems.

Further reading: Spotlight on Innovations in Edge Computing and Machine Learning: Predictive Maintenance

About the sponsor: Edge Impulse

Edge Impulse is the leading development platform for embedded machine learning, used by over 1,000 enterprises across 200,000 ML projects worldwide. We are on a mission to enable the ultimate development experience for machine learning on embedded devices for sensors, audio, and computer vision, at scale.

From getting started in under five minutes to MLOps in production, we enable highly optimized ML deployable to a wide range of hardware from MCUs to CPUs, to custom AI accelerators. With Edge Impulse, developers, engineers, and domain experts solve real problems using machine learning in embedded solutions, speeding up development time from years to weeks. We specialize in industrial and professional applications including predictive maintenance, anomaly detection, human health, wearables, and more.