George Mason University Researchers Enable Robots to Intelligently Navigate Challenging Terrain

Empowering Robots with Adaptive Terrain Navigation

This article was first published on

indrorobotics.caThis article was discussed in our Next Byte podcast.

The full article will continue below.

Picture this: You’re out for a drive and in a hurry to reach your destination.

At first, the road is clear and dry. You’ve got great traction and things are going smoothly. But then the road turns to gravel, with twists and turns along the way. You know your vehicle well, and have navigated such terrain before.

And so, instinctually, you slow the vehicle to navigate the more challenging conditions. By doing so, you avoid slipping on the gravel. Your experience with driving, and in detecting the conditions, has saved you from a potential mishap. Yes, you slowed down a bit. But you’ll speed up again when the conditions improve. The same scenario could apply to driving on grass, ice – or even just a hairpin corner on a dry paved road.

For human beings, especially those with years of driving experience, such adjustments are second-nature. We have learned from experience, and we know the limitations of our vehicles. We see and instantly recognize potentially hazardous conditions – and we react.

But what about if you’re a robot? Particularly, a robot that wants to reach a destination at the maximum safe speed?

That’s the crux of fascinating research taking place at George Mason University: Building robots that are taught – and can subsequently teach themselves – how to adapt to changing terrain to ensure stable travel at the maximum safe speed.

It’s very cool research, with really positive implications.

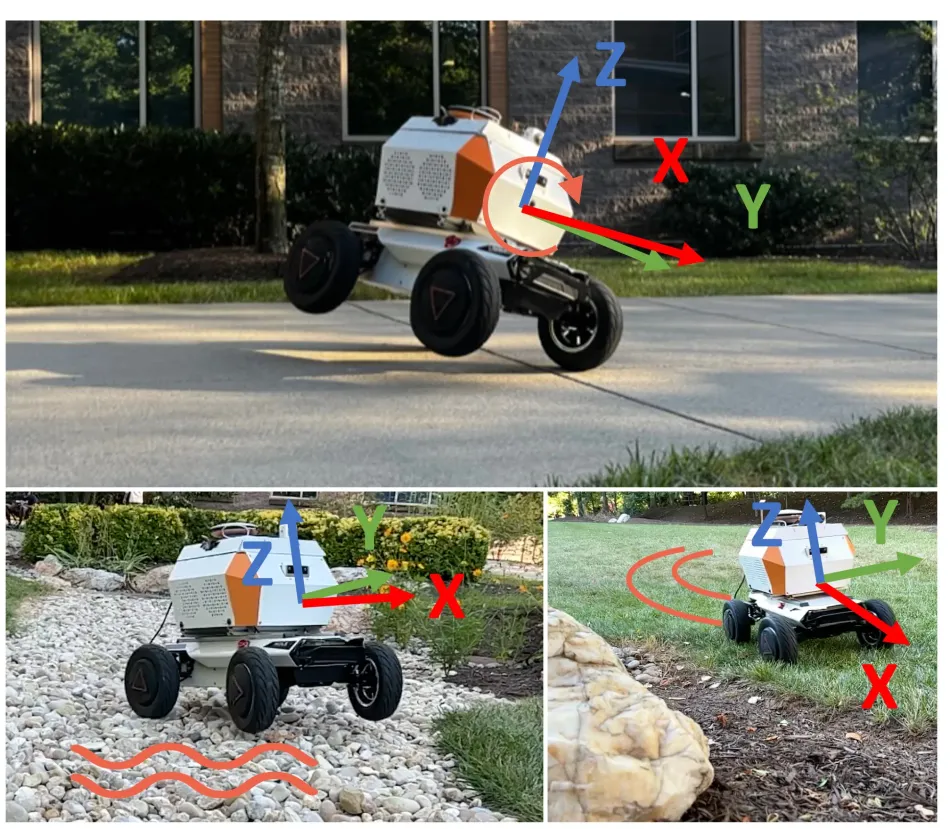

Below: You don’t want this happening on a critical mission…

“XX”

Those are the initials of Dr. Xuesu Xiao, an Assistant Professor at George Mason University. He holds a PhD in Computer Science, and runs a lab that plays off his initials, called the RobotiXX Lab. Here’s a snippet of the description from his website:

“At RobotiXX lab, researchers (XX-Men) and robots (XX-Bots) perform robotics research at the intersection of motion planning and machine learning with a specific focus on robustly deployable field robotics. Our research goal is to develop highly capable and intelligent mobile robots that are robustly deployable in the real world with minimal human supervision.”

We spoke with Dr. Xiao about this work.

It turns out he’s particularly interested in making robots that are particularly useful to First Responders, and carrying out those dull, dirty and dangerous tasks. Speed in such situations can be critical, but comes with its own set of challenges. A robot that makes too sharp a turn at speed on a high friction surface can easily roll over – effectively becoming useless in its task. Plus, there are the difficulties previously flagged with other terrains.

This area of “motion planning” fascinates Dr. Xiao. Specifically, how to take robots beyond traditional motion planning and enable them to identify and adapt to changing conditions. And that involves machine vision and machine learning.

“Most motion planners used in existing robots are classical methods,” he says. “What we want to do is embed machine learning techniques to make those classical motion planners more intelligent. That means I want the robots to not only plan their own motion, but also learn from their own past experiences.”

In other words, he and his students have been focussing on pushing robots to develop capabilities that surpass the instructions and algorithms a roboticist might traditionally program.

“So they’re not just executing what has been programmed by their designers, right? I want them to improve on their own, utilising all the different sources of information they can get while working in the field.”

The Platform

The RobotiXX Lab has chosen the Hunter SE as its core platform for this work. That platform was supplied by InDro Robotics, and modified with the InDro Commander module. That module enables communication over 5G (and 4G) networks, enabling high speed data throughput. It comes complete with multiple USB slots and the Robot Operating System (ROS) library onboard, enabling the easy addition (or removal) of multiple sensors and other modifications. It also has a remote dashboard for controlling missions, plotting waypoints, etc.

Dr. Xiao was interested in this platform for a specific reason.

“The main reason is because it’s high speed, with a top speed of 4.8m per second. For a one-fifth/one-sixth scale vehicle that is a very, very high speed. And we want to study what will happen when you are executing a turn, for example, while driving very quickly.”

As noted previously, people with driving experience instinctively get it. They know how to react.

“Humans have a pretty good grasp on what terrain means,” he says. “Rocky terrain means things will get bumpy, grass can impede a motion, and if you’re driving on a high-friction surface you can’t turn sharply at speed. We understand these phenomenon. The problem is, robots don’t.”

So how can we teach robots to be more human in their ability to navigate and adjust to such terrains – and to learn from their mistakes?

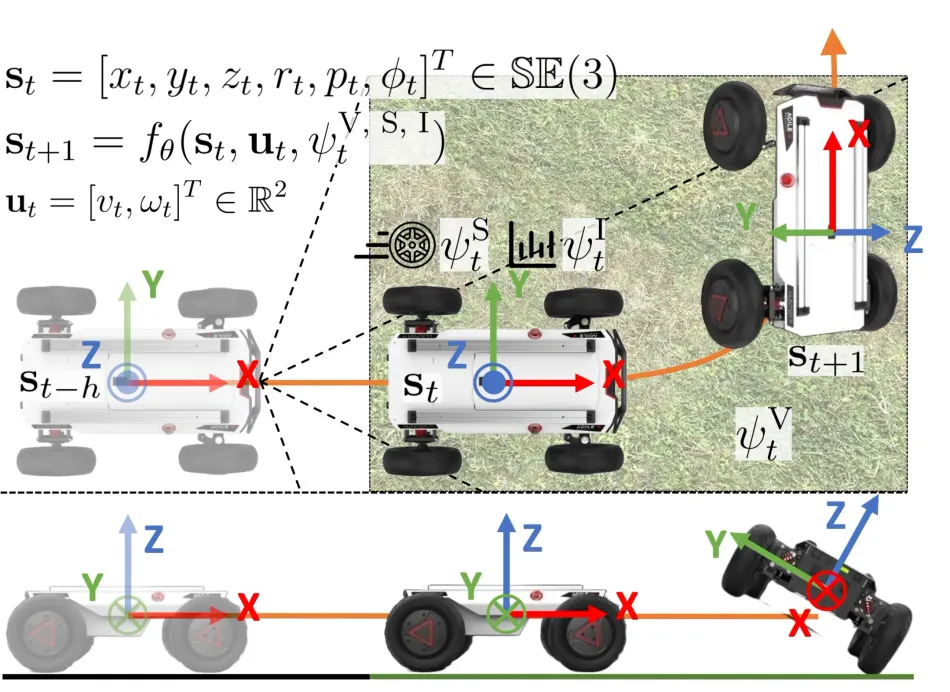

As you’ll see in the diagram below, it gets *very* technical. But we’ll do our best to explain.

The Approach

The basics here are pretty clear, says Dr. Xiao.

“We want to teach the robots to know the consequences of taking some aggressive maneuvers at different speeds on different terrains. If you drive very quickly while the friction between your tires and the ground is high, taking a very sharp turn will actually cause the vehicle to roll over – and there’s no way the robot by itself will be able to recover from it, right? So the whole idea of the paper is trying to enable robots to understand all these consequences; to make them ‘competence aware.'”

The paper Dr. Xiao is referring to has been submitted for scientific publication. It’s pretty meaty, and is intended for engineers/roboticists. It’s authored by Dr. Xiao and researchers Anuj Pokhrel, Mohammad Nazeri, and Aniket Datar. It’s entitled: CAHSOR: Competence-Aware High-Speed Off-Road Ground Navigation in SE(3).

That SE(3) term is used to describe how objects can move and rotate in 3D space. Technically, it stands for Special Euclidean group in three dimensions. It refers to keeping track of an object in 3D space – including position and orientation.

We’ll get to more of the paper in a minute, but we asked Dr. Xiao to give us some help understanding what the team did to achieve these results. Was it just coding? Or were there some hardware adjustments as well?

Turns out, there were both. Yes, there was plenty of complex coding. There was also the addition of an RTK GPS unit so that the robot’s position in space could be measured as accurately as possible. Because the team soon discovered that intense vibration over rough surfaces could loosen components, threadlock was used to keep things tightly in place.

But, as you might have guessed, machine vision and machine learning are a big part of this whole process. The robot needs to identify the terrain in order to know how to react.

We asked Dr. Xiao if an external data library was used and imported for the project. The answer? “No.”

“There’s no dataset out there that includes all these different basic catastrophic consequences when you’re doing aggressive maneuvers. So all the data we used to train the robot and to train our machine learning algorithms were all collected by ourselves.”

Slips, Slides and Rollovers

As part of the training process, the Hunter SE was driven over all manner of demanding terrain.

“We actually bumped it through very large rocks many times and also slid it all over the place,” he says. “We actually rolled the vehicle over entirely many times. This was all very important for us to collect some data so that it learns to not do that in the future, right?”

And while the cameras and machine vision were instrumental in determining what terrain was coming up, the role of the robot’s Inertial Measurement Unit was also key.

“It’s actually multi-modal perception, and vision is just part of it. So we are looking at the terrain using camera images and we are also using our IMU. Those inertial measurement unit readings sense the acceleration and the angular velocities of the robot so that it can better respond,” he says.

“Because ultimately it’s not only about the visual appearance of the terrain, it is also about how you drive on it, how you feel it.”

The Results

Well, they’re impressive.

The full details are outlined in this paper, but here’s the headline: Regardless of whether the robot was operating autonomously heading to defined waypoints, or whether a human was controlling it, there was a significant reduction in incidents (slips, slides, rollovers etc.) with only a small reduction in overall speed.

Specifically, “CAHSOR (Competence-Aware High-Speed Off-Road Ground Navigation) can efficiently reduce vehicle instability by 62% while only compromising 8.6% average speed with the help of TRON (visual and inertial Terrain Representation for Off-road Navigation).”

That’s a tremendous reduction in instability – meaning the likelihood that these robots will reach their destination without incident is greatly improved. Think of the implications for a First Responder application, where without this system a critical vehicle rushing to a scene carrying medical supplies – or even simply for situational awareness – might roll over and be rendered useless. The slight reduction in speed is a small price to pay for greatly enhancing the odds of an incident-free mission.

“Without using our method, a robot will just blindly go very aggressively over every single terrain – while risking rolling over, bumps and vibrations on rocks, maybe even sliding and rolling off a cliff.”

What’s more, these robots continue to learn with each and every mission. They can also share data with each other, so that the experience of one machine can be shared with many. Dr. Xiao also says the learnings from this project, which began in January of 2023, can also be applied to marine and even aerial robots.

For the moment, though, the emphasis has been fully on the ground. And there can be no question this research has profound and positive implications for First Responders (and others) using robots in mission-critical situations.

Below: The Hunter SE gets put through its paces. (All images courtesy of Dr. Xiao.)

InDro's Take

We’re tremendously impressed with the work being carried out by Dr. Xiao and his team at George Mason University. We’re also honoured to have played a small role in supplying the Hunter SE, InDro Commander, as well as occasional support as the project progressed.

“The use of robotics by First Responders is growing rapidly,” says InDro Robotics CEO Philip Reece. “Improving their ability to reach destinations safely on mission-critical deployments is extremely important work – and the data results are truly impressive.

“We are hopeful the work of Dr. Xiao and his team are adopted in future beyond research and into real-world applications. There’s clearly a need for this solution.”

If your institution or R&D facility is interested in learning more about InDro’s stable of robots (and there are many), please reach out to us here.