Bio-inspired deep reinforcement learning teaches these smart magnetic microbots to swim

Designed for use in the human body for everything from drug delivery to less-invasive biopsies, these tiny microrobots operate under the control of a deep-learning system trained with no modelling or prior environmental knowledge.

Made to ease researchers' burdens, this microrobot control system trains itself.

This article was discussed in our Next Byte podcast.

The full article will continue below.

Microrobots able to swim through fluid are of increasing interest in the medical field, capable of delivering precisely-targeted drugs, obtaining tissue samples, or even breaking up and removing blood clots. Actually controlling the devices once they’re inside the human body, though, remains a challenge — but one a pair of scientists from the University of Pittsburgh and Carnegie Mellon University may have found a way to solve.

Having developed simple magnetic microrobots, looking like tiny corkscrews, in a 3D-printed mold, the pair set about using deep reinforcement learning to create a system which could control their movement — without any manual training or simulation, and in fewer than 100,000 training steps.

The challenge

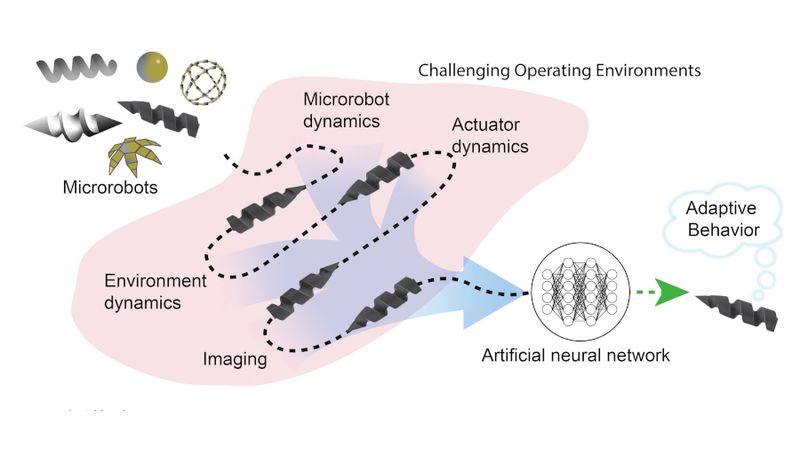

A tiny robot, easily fitting on the very tip of your finger, isn’t easy to control, and when you build it from complex composite materials — sometimes even integrating biological components — then set it loose in a complex environment like the human body, the challenge becomes near-intractable.

With too many variable, simulation is either entirely impossible or requires a range of sacrifices in the name of simplicity — potentially reducing the performance or capabilities of the finished control system. What Michael R. Behrens and Warren C. Ruder have proposed instead is to to do away with simulation altogether and create a control system based on deep reinforcement learning.

Designed to mimic how real-world organisms approach learning, reinforcement learning aims to allow a deep learning system to adapt based on observations made about the sate of an environment. In this case, the environment is a liquid in which tiny robots swim under magnetic control — aiming for a pre-set location which triggers a reward signal, letting the control system know it’s done its job.

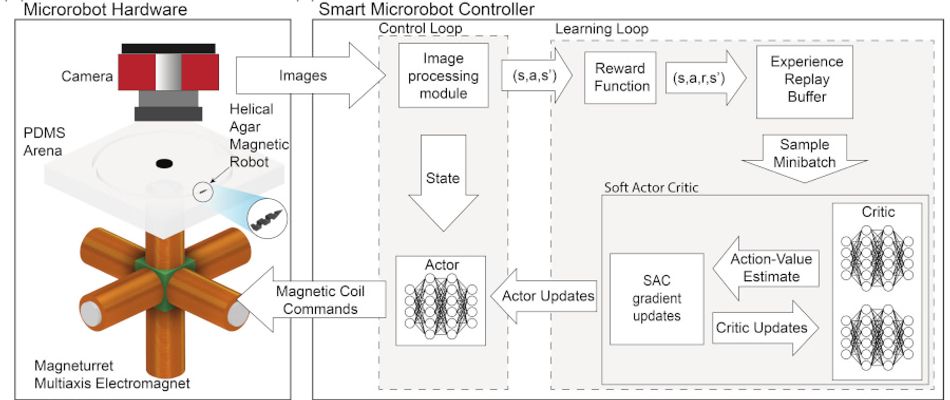

Based on the Soft Actor Critic (SAC) algorithm, the proposed deep-learning system doesn’t require any foreknowledge of the microrobot itself, the custom-built electromagnetic actuator which drives it, nor the environment in which it’s placed — and, the team found, can deliver impressive results quickly, proving its potential for reducing the time and resources required to develop microrobotic systems.

The experiment

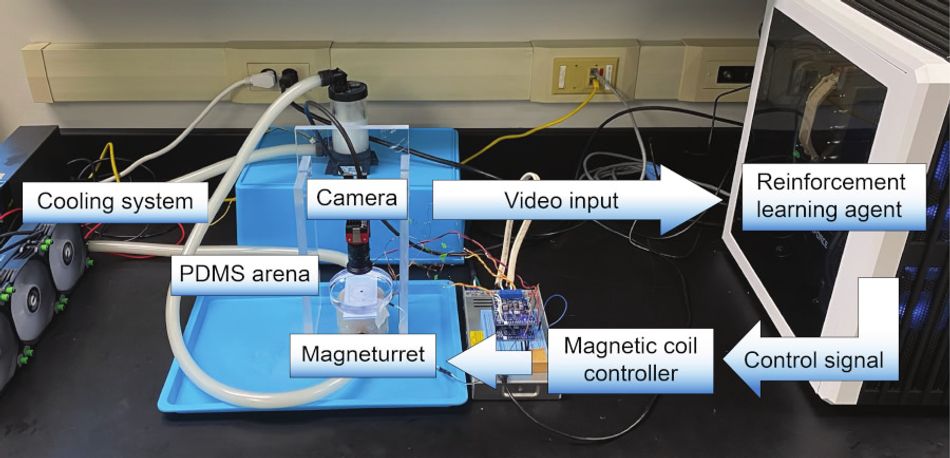

To prove the concept, the team built tiny robots - measuring just 4.4×1mm (around 0.17×0.04") — from a magnetically-sensitive soft polymer injected into a 3D_printed mold. These were then placed under the control of a custom three-axis magnetic coil actuator dubbed the Magneturret, built using six magnetic coils on a 3D-printed cube and driven by a microcontroller board and H-bridge motor driver.

The robots were placed in a circular track filled with fluid, designed to mimic in simplified form the in vivo environment microrobots would encounter when placed within the human body. “We hypothesized,” the researchers write, “that we would be able to develop a high-performance control system using [reinforcement learning] without going through the effort of developing a system model first.”

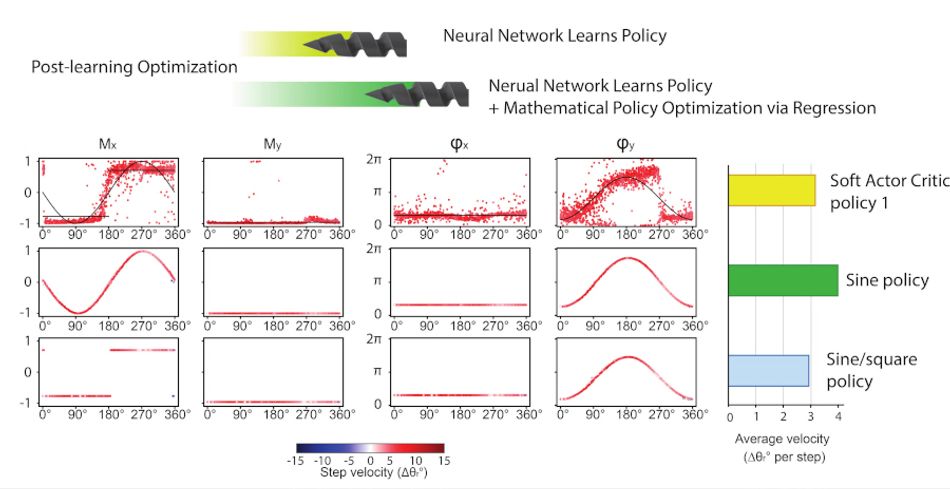

The proposal proved true: With the progression of the microrobots monitored via camera and a reward signal sent once they had completed a certain distance around the track in a clockwise direction, the learning system was able to control the devices from first principles. Initially, for the first 20,000 training steps, no net movement was noted; by the second set of 20,000 steps a trend towards clockwise movement was found; and by the end of the training the robots had begun primarily moving clockwise as intended.

With a successful control system demonstrated, Behrens and Ruder believe their approach has a number of key advantages compared to previously-published efforts. A particular advantage comes in removing the need for dynamic modelling, increasing the efficiency of researchers while creating a control system which could exceed the performance of classical equivalents created based on simplified models.

The pair have also proposed that the reinforcement learning approach could be used to enhance other control strategies, rather than to supplant them, and that the policies it derives could uncover potentially useful microrobot behaviors which would otherwise have gone unnoticed by human engineers.

Finally, the ability to derive control models from high-dimensional data demonstrated in earlier related works could, the researchers argue, make the reinforcement learning approach applicable not just to camera inputs but to a range of biomedical imaging models including MRI, X-ray, and ultrasound.

“All these points strongly favor the use of [reinforcement learning] for developing the next generation of microrobot control systems,” the pair concludes.

The work has been published on Cornell University’s arXiv.org preprint server under open access terms.

Reference

Michael R. Behrens, Warren C. Ruder: Smart Magnetic Microrobots Learn to Swim with Deep Reinforcement Learning. DOI arXiv:2201.05599 [cs.RO].