2023 Edge AI Technology Report. Chapter X: Future of Edge AI

What’s Next for Artificial Intelligence and Edge Computing?

2023 Edge AI Technology Report: Chapter X

Edge AI, empowered by the recent advancements in Artificial Intelligence, is driving significant shifts in today's technology landscape. By enabling computation near the data source, Edge AI enhances responsiveness, boosts security and privacy, promotes scalability, enables distributed computing, and improves cost efficiency.

Wevolver has partnered with industry experts, researchers, and tech providers to create a detailed report on the current state of Edge AI. This document covers its technical aspects, applications, challenges, and future trends. It merges practical and technical insights from industry professionals, helping readers understand and navigate the evolving Edge AI landscape.

Future of Edge AI

Shaping the future

In the coming years, the increasing demand for real-time, low-latency, and privacy-friendly AI applications will lead to a proliferation of Edge AI deployments. The latter will be continually more accurate and efficient, leveraging technological advances in communication networks (e.g., 5G and 6G networking), artificial intelligence (e.g., neuromorphic computing, data-efficient AI), and IoT devices. In this direction, the role of researchers and the open-source communities will be fundamental in driving the evolution of edge AI.

The development and deployment of efficient edge AI applications hinge on the evolution of different technologies destined to support edge devices and compute-efficient AI functions. Specifically, the following technological evolutions will shape the future of edge AI.

Emergence and Rise of 5G/6G Networks

Deploying real-time, low-latency Edge AI applications requires ultra-fast connectivity and high bandwidth availability. This is why conventional 4G and LTE (Long Term Evolution) networks are not entirely suitable for delivering Edge AI benefits at scale. During the last couple of years, the advent of 5G technology has opened new opportunities for high-performance Edge AI deployments.

5G networks deliver thousands of times more bandwidth than previous generation networks while at the same time supporting edge computing applications in environments where 1000s of IoT devices are deployed. As such, 5G networks facilitate Edge AI applications to access large amounts of training data and to operate without disruptions in crowded environments and device-saturated contexts. As 5G networks continue to expand, we can expect a proliferation of AI-enabled intelligent edge devices that will perform complex tasks and make autonomous decisions in real time.

In the future, 6G networks will also emerge to offer even faster speeds. 6G infrastructures are currently under research and development. They are expected to become commercially available after 2030 and use higher frequency bands than 5G (e.g., they will operate in the 30 to 300 GHz millimeter waves). 6G networks will increase bandwidth availability and network reliability, which will be vital in supporting future large-scale Edge AI applications. The latter will include numerous heterogeneous AI-based devices running a multitude of AI algorithms, including algorithms that will operate based on many data points thanks to 6G's capacity.

Neuromorphic Computing: Increase AI Intelligence by Mimicking the Human Brain

The future of Edge AI will also be shaped by novel AI paradigms such as neuromorphic computing. This approach mimics the human brain's structure and functionality by emulating the neural networks and synaptic connections in our brains. It is based on novel neuromorphic chips that process information more efficiently while adapting faster and more effectively to new situations. In practice, neuromorphic chips comprise many artificial neurons and artificial synapses that can mimic the functioning of brain spikes. Therefore, neuromorphic computing research brings us a step closer to understanding, decoding, and exploiting the code of the human brain in AI applications.

Neuromorphic computing chips are well-suited to deliver Edge AI benefits at scale. This is because they consume less power and provide faster processing speeds than conventional processors. Most importantly, they are equipping Edge AI systems with human-brain-like reasoning capabilities that will be extremely useful in many pervasive applications (e.g., obstacle avoidance, robust acoustic perception, etc.). As neuromorphic computing technology matures, it will enable a new generation of AI-based edge devices that can learn and adapt in real-time.

Event-based Processing & Learning: BrainChip’s Neuromorphic AI SolutionBrainChip is one of the pioneers of bringing neuromorphic computing to the edge. While traditional neuromorphic approaches have used analog designs to mimic the neuron and synapse, BrainChip has taken a novel approach on three counts.

Brainchip’s Akida neural processor is offered as IP and is configurable from energy-harvesting applications at the sensor edge to high-performance yet power-efficient solutions at the network edge. It is sensor-agnostic and has been demonstrated on a variety of sensors. As a self-managed neural processor that executes most networks completely in hardware without CPU intervention, it addresses key congestion and system bandwidth challenges in embedded SoCs while delivering highly efficient performance. With support for INT8 down to INT1 and skip connections, it handles most complex networks today, along with spiking neural nets. This led NASA to select BrainChip’s first silicon platform in 2021 to demonstrate in-space autonomy and cognition in one of the most extreme power- and thermally-constrained applications. Similarly, Mercedes Benz demonstrated BrainChip in their EQXX concept vehicle that can go over 1000 km on a single charge. In the latest generation, Brainchip has taken another big step of adding Temporal Event Based Neural Nets (TENNs) and complementary separable 3D convolutions that speed up some complex time-series data applications by 500x while radically reducing model size and footprint, but without compromising accuracy. This enables a new class of compact, cost-effective devices to support high-res video object detection, security/surveillance, audio, health, and industrial applications. While neuromorphic computing is still discussed as a future paradigm, BrainChip is already bringing this paradigm to market. |

Data-Efficient AI: Maximizing Value in the Absence of Adequate Quality Data

One of the significant challenges in AI is the need for vast amounts of data to train machine learning algorithms (e.g., deep learning) effectively. There is frequently a lack of adequate quality data to train such algorithms. This issue is prevalent in the case of Edge AI systems and applications, given the specialized nature of embedded machine learning and TinyML systems, which require their own data collection processes. Moreover, Edge AI systems face computational and storage constraints that prevent them from fully leveraging huge AI models and large numbers of data points.

Data-efficient AI techniques aim at overcoming the above-listed limitations by enabling AI models to learn from limited data samples. Thus, they obviate the need for large-scale data collection while lowering the computational requirements of Edge AI systems. Ongoing research on data-efficient AI explores many techniques, ranging from augmenting and using pre-trained models with domain knowledge (e.g., transfer learning) to paradigms that engage humans in the data labeling processes (e.g., active learning) as part of human-AI interactions. There are also popular data-efficient techniques that reduce the size of the AI model (e.g., model pruning) to enable space-efficient models that can fit on edge devices. Moreover, some techniques are inherently capable of learning from limited data samples, such as one-shot learning and few-shot learning.

Overall, future data-efficient AI methods will help edge devices make accurate predictions and decisions with minimal data input. Data-efficient techniques will also boost the overall performance of Edge AI systems while at the same time reducing the time and resources required for model training.

In-Memory Computing for Edge AI

In-memory computing is one more technological trend that will impact the future of Edge AI. It is about storing and processing data directly within the memory of a device rather than relying on traditional storage systems (e.g., disk). This approach is set to reduce data access times significantly and accelerate Edge AI systems' computational speeds. Therefore, it will further boost Edge AI systems' real-time analytics and decision-making capabilities.

In the future, Edge AI applications will have to process increased volumes of data rapidly. Hence, in-memory computing will become increasingly important for optimizing Edge AI's performance and efficiency. In particular, it will enable Edge AI devices to process complex algorithms and extract valuable insights from data at unparalleled speeds.

Digital In-Memory Computing: An Axelera AI SolutionWe asked Evangelos Eleftheriou, CTO and Co-founder at Axelera AI, about his thoughts on in-memory computing. Here’s what he had to say: The latency that arises from the increasing gap between the speed of memory and processing units, often referred to as the memory wall, is an example of a critical performance issue for various AI tasks. In the same way, the energy required to move data is another significant challenge for computing systems, particularly those that have strict power limitations due to cooling constraints, as well as for the wide range of battery-powered mobile devices. Therefore, new computing architectures that better integrate memory and processing are needed. Near-memory computing, a potential solution, reduces the physical distance and time to access memory. It benefits from advancements in die stacking and technologies like high memory cube (HMC) and high bandwidth memory (HBM). In-memory computing (IMC) is a fundamentally different approach to data processing, in which specific computations are carried out directly within the memory by arranging the memory in crossbar arrays. IMC units can tackle latency and energy problems and also improve computational time complexity due to the high level of parallelism achieved through the dense array of memory devices that perform computations simultaneously. Crossbar arrays of such memory devices can be used to store a matrix and perform matrix-vector multiplications (MVMs) without intermediate movement of data. This efficiency is especially beneficial for training and inference in deep neural networks, where energy efficiency is critical. As 70-90% of deep learning operations are matrix-vector multiplications, applications with many AI components, such as computer vision and natural language processing, can benefit from this technology. The efficient matrix-vector multiplication via in-memory computing is very attractive for the training and inference of deep neural networks, particularly for inference applications such as computer vision and natural language processing, where high energy efficiency is critical. In fact, matrix-vector multiplications constitute 70-90% of all deep learning operations. There are two classes of memory devices:

Traditional and emerging memory technologies can perform a range of in-memory logic and arithmetic operations, as well as MVM operations. SRAM, having the fastest read and write time and highest endurance, enables high-performance in-memory computing for both inference and training applications. It follows the scaling of CMOS technology and requires standard materials and processes. Its main drawbacks are its volatility and larger cell size. Axelera AI introduced an SRAM-based digital in-memory computing (D-IMC) engine, which is immune to noise and memory non-idealities that affect the precision of analog in-memory computing. D-IMC supports INT8 activations and weights with INT32 accumulation, maintaining full precision for various applications without retraining. The D-IMC engine of the matrix-vector-multiplier is a handcrafted, full-custom design that interleaves the weight storage and the compute units in an extremely dense fashion, thus reducing energy consumption while maintaining high energy efficiency even at low utilization. |

Distributed Learning Paradigms

Earlier parts of the report have outlined Edge AI approaches based on decentralized learning paradigms like federated learning. Federated learning enables edge devices to collaborate toward training a ‘global’ shared machine learning model from ‘local’ models without exchanging raw data. In coming years, federated learning will be a fundamental approach to preserving the privacy of sensitive information and to reducing the need for data transmission to centralized cloud servers. Federated learning enables edge devices to learn from a diverse range of data sources, which leads to more robust and accurate ‘global’ models. Most importantly, federated learning allows for continuous model updates and improvements, as devices can share new learnings and insights with their federated network in real-time.

However, federated learning is not the sole decentralized learning approach to developing Edge AI systems. There is also swarm learning, which takes inspiration from the collective behavior of social organisms (e.g., birds, insects) to create decentralized and self-organizing AI systems. The deployment of Edge AI networks based on swarm learning involves edge devices that work collaboratively to solve complex problems and make decisions by sharing information and insights in a decentralized manner. Contrary to federated learning, swarm-learning nodes (i.e., edge devices) share information completely decentralized without aggregating it in a centralized cloud. This approach enables Edge AI systems to dynamically adapt and evolve independently while at the same time improving their performance and efficiency in real-time.

In the future, such decentralized learning approaches will pave the way for more scalable, efficient, and privacy-preserving Edge AI solutions.

Heterogeneity and Scale-up Challenges

The future of Edge AI systems will include more scalable and heterogeneous systems. Specifically, the proliferation of the number and types of Edge AI systems will give rise to heterogeneous environments where different edge AI systems (e.g., TinyML, federated learning, embedded machine learning) will co-exist and interact in various deployment configurations. This will create additional challenges due to the need for integrating and orchestrating future Edge AI systems in complex AI workflows involving both cloud-based and cloud/edge components.

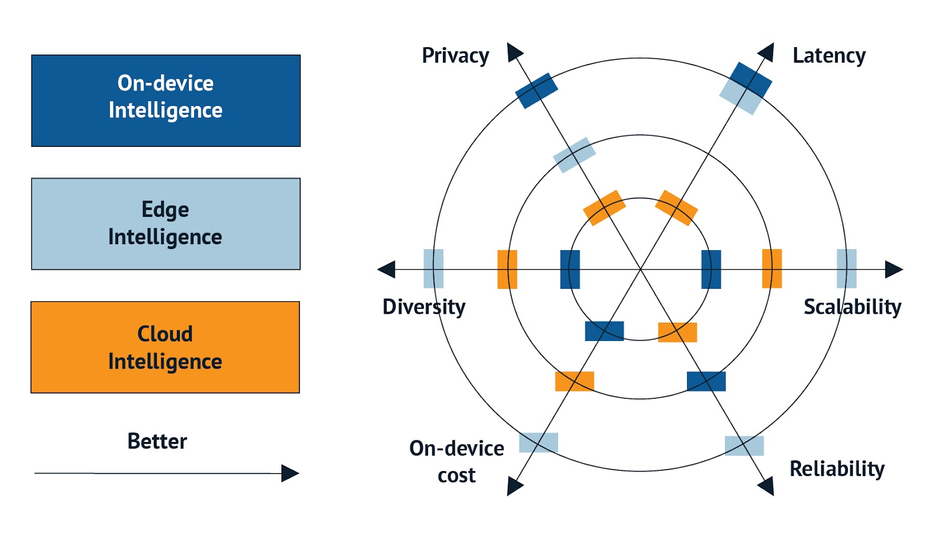

In this direction, workflow management and AI orchestration middleware for cloud/edge environments must evolve to address the peculiarities of edge AI systems. Future orchestrators must consider and balance trade-offs associated with the deployment and operation of Edge AI systems, including trade-offs concerning performance, latency, data protection, scalability, and energy efficiency.

The heterogeneity of future AI systems will raise the development complexity of end-to-end Edge AI systems. To alleviate this complexity, Edge AI vendors will likely offer more sophisticated tools for developing and deploying machine learning pipelines in cloud/edge environments. For example, they will offer novel AutoML (Automatic Machine Learning) environments that lower the time and effort needed to train, integrate, and deploy end-to-end applications.

Key Stakeholders and Their Roles

The future of Edge AI will be developed in the scope of an evolving ecosystem of stakeholders, which will interact closely to ensure that different developments fit together and reinforce each other. The heart of this ecosystem will comprise vendors of microsystems and edge devices and integrators of machine learning solutions for Edge AI systems. The traditional machine learning community will actively engage in the Edge AI ecosystem by advancing popular environments and tools to support future edge AI developments.

Research and development organizations will also have a prominent role in the Edge AI ecosystem, as they will drive the development of the technological innovations that will revolutionize AI. Moreover, the open-source community is expected to have an instrumental and pioneering role in prototyping, testing, and standardizing future Edge AI middleware and tools.

“Edge AI is like building a superhighway; as this highway is built, the possibilities that it unlocks are tremendous, just like how the highway system spawned economies,” said Yanamadala. “The purpose of Edge AI is to unlock the potential of machine learning and AI applications. The end goal is to facilitate unleashing the power of ML combined with data. It might happen in stages, but that's the happy path.”

Report Conclusion

As we conclude this report on Edge AI, we stand at the precipice of a transformative era in Artificial Intelligence. The rise of Edge AI technology presents us with unparalleled opportunities to shape the future of intelligent devices and systems. With its ability to process data locally, reduce latency, enhance privacy, and enable real-time decision-making, Edge AI is poised to revolutionize various industries and propel us toward a more connected, efficient, and intelligent world.

The importance of Edge AI in the current and future technology landscape cannot be overstated. Its impact is felt across domains such as autonomous vehicles, healthcare, industrial automation, and IoT deployments. By bringing AI capabilities directly to the edge devices, Edge AI empowers devices to operate autonomously, adapt to their surroundings, and make informed decisions in real-time.

As technology continues to advance, the growth of edge computing infrastructure and the development of specialized AI hardware accelerators will unlock even greater potential for Edge AI applications. We can anticipate the deployment of more sophisticated AI models on edge devices, enabling complex tasks such as natural language processing, computer vision, and deep learning directly at the edge.

Furthermore, the proliferation of 5G networks will complement Edge AI by providing high-speed, low-latency connectivity, further enhancing the capabilities of edge devices. This convergence of Edge AI and 5G will facilitate the seamless integration of intelligent devices and systems into our daily lives, enabling a world where autonomous vehicles navigate with precision, smart cities optimize resources, and healthcare systems deliver personalized care. The journey of Edge AI has only just begun, and the path ahead is illuminated with the promise of transformative advancements.

The 2023 Edge AI Technology Report

The guide to understanding the state of the art in hardware & software in Edge AI.

Click through to read each of the report's chapters.

Report Introduction

Chapter I: Overview of Industries and Application Use Cases

Chapter II: Advantages of Edge AI

Chapter III: Edge AI Platforms

Chapter IV: Hardware and Software Selection

Chapter V: Tiny ML

Chapter VI: Edge AI Algorithms

Chapter VII: Sensing Modalities

Chapter VIII: Case Studies

Chapter IX: Challenges of Edge AI

Chapter X: The Future of Edge AI and Conclusion

Report sponsors