2023 Edge AI Technology Report. Chapter IX: Challenges of Edge AI

The Challenges Ahead of Edge AI and How to Solve Them.

2023 Edge AI Technology Report. Chapter IX: Challenges of Edge AI

Edge AI, empowered by the recent advancements in Artificial Intelligence, is driving significant shifts in today's technology landscape. By enabling computation near the data source, Edge AI enhances responsiveness, boosts security and privacy, promotes scalability, enables distributed computing, and improves cost efficiency.

Wevolver has partnered with industry experts, researchers, and tech providers to create a detailed report on the current state of Edge AI. This document covers its technical aspects, applications, challenges, and future trends. It merges practical and technical insights from industry professionals, helping readers understand and navigate the evolving Edge AI landscape.

Understanding the challenges

Introducing Edge AI has brought numerous advantages to the modern world of computing. With the Edge AI philosophy, data processing can be performed locally on a device, eliminating the need for sending large amounts of data to a centralized server. This not only reduces data transfer requirements but also results in faster response times and increased reliability, as the device can continue to operate even if it loses connection with the network. Edge technology also provides enhanced data privacy, as sensitive data can be processed locally and does not need to be transmitted to a third-party server.

Despite the numerous potential benefits of Edge AI, there are also several challenges associated with its implementation and usability. In this chapter, we will examine these challenges in detail, explaining how they can impact the efficiency, performance, and overall success of Edge AI deployments.

Data Management Challenges

Data management poses a significant challenge in Edge AI, as edge devices often capture real-time data that can be incomplete or noisy, leading to inaccurate predictions and poor performance. In fact, the challenges in computing at the edge are related to data movement, which has an impact on power, efficiency, latency, and real-time decision-making. Data movement is influenced by the volume and velocity of data, which need to be orchestrated from the data center to the endpoint. The volume of data has security implications, power implications, computation requirements, and application-level implications for real-time decision-making. The concept of the edge aims to reduce data movement, reduce latency, and enable more real-time decision-making through distributed intelligence.

“The more decision-making an endpoint can make without consulting the data center, the more real-time it could be.”

- Chowdary Yanamadala, Senior Director, Technology Strategy, Arm’s Advanced Technology Group

Researchers have proposed various solutions to improve the quality of data collected by Edge AI devices. One promising approach is federated learning, which involves training AI models on data distributed across multiple edge devices, enhancing the quality and diversity of training data.

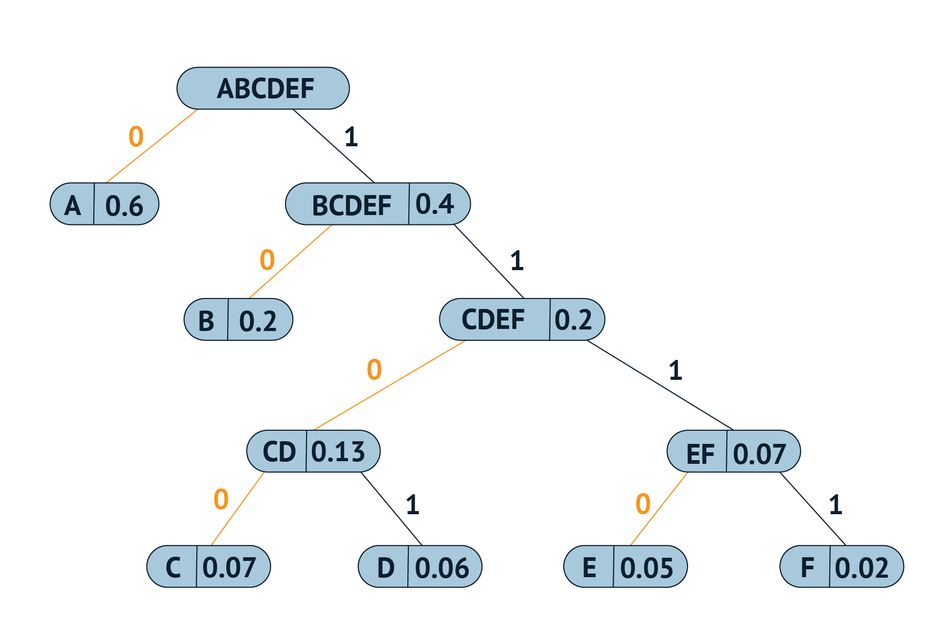

Another data management issue is the limited storage capacity of edge devices. Since Edge AI devices typically have limited memory storage, storing larger quantities of data produced by edge applications can be challenging. To overcome this issue, data compression techniques such as Huffman coding and Lempel-Ziv-Welch (LZW) compression can be employed to reduce the size of data stored on edge devices, optimizing memory usage.

When discussing data management practices, data governance is another crucial aspect that is often overlooked in the context of Edge AI devices. As AI applications become more prevalent, it becomes increasingly important to ensure that data generated by edge devices are appropriately managed and aligned with regulations and policies. However, governing data on edge devices can be challenging, particularly in complex enterprise environments.

To overcome this obstacle, blockchain-based data governance frameworks such as Hyperledger Fabric and Ethereum have emerged as potential solutions. These frameworks enable secure and transparent data management and provide a distributed and decentralized approach to managing and tracking data. By utilizing blockchain technology, data governance on Edge devices has shown to be more efficient, secure, and reliable, thus ensuring compliance with required regulations.

Integration Challenges

Integrating Edge AI with other systems can be challenging for many reasons, where compatibility issues dominate. In general, incompatibility issues can arise from differences in hardware, software, and communication protocols. Each edge device can have unique specifications, architecture, and interfaces, making integration with other devices and systems difficult and not routine work. At the same time, AI models may require specific software libraries, frameworks, and programming languages that may not be compatible with other systems.

To overcome these challenges, the open-source community has contributed integration frameworks, proposed new industry standards, and developed efficient communication protocols to solve interoperability issues and promote standardization. For example, the Open Neural Network Exchange (ONNX) is an open-source approach applicable to deep learning models that can be used across different frameworks and platforms, promoting interoperability and reducing compatibility issues.

Another interesting example is how Synaptics tackles integration challenges. They tie wireless and edge together by providing chips for several wireless techs for the IoT, such as Wi-Fi, Bluetooth, IEEE 802.15.4 Thread/Zigbee, and Matter. They also provide ultra-low energy (ULE) technology, which operates in an interference-free band (1.9 GHz) and supports secure, wide-coverage wireless communications with support for two-way voice and data.

Patrick Mannion, Director of External PR and Technical Communications at Synaptics, stated that “When meta or other sensor data need to be transported to an aggregation point for further processing, it’s critical that the appropriate wireless technology be used to ensure a robust connection with the lowest possible latency and power consumption.”

Synaptics is also working with laptop manufacturers on the “Integration of visual sensing based on ultra-low-power machine learning (ML) algorithms,” as Mannion explained. “These algorithms analyze user engagement and detect on-lookers to save power and enhance security on the laptop itself.” He added, “The algorithms are being optimized for efficiency and compatibility with any system hardware. The efficiency of Synaptics’ own Edge AI visual sensing hardware, which began with Katana, is also improving rapidly to adapt to upcoming applications that will incorporate more sensing capabilities as we shift to more advanced context-aware computing at the edge.”

Security Challenges

In addition to compatibility issues, integrating Edge AI with other systems may be followed with significant security risks. Edge devices often collect and process sensitive data, including personal health records, financial information, and biometric data, which makes them vulnerable to security threats such as data breaches, cyber-attacks, and privacy violations.

“The biggest challenge for security is consistency, which also applies to software in general.”

- David Maidment, Senior Director, Secure Device Ecosystem, Arm.

Therefore, security measures should be considered from the initial design phase of Edge AI systems. Techniques such as secure boot and hardware root of trust (RoT) can ensure the integrity of edge devices. Similarly, secure software development practices like threat modeling and code reviews can prevent common vulnerabilities.

“Standards such as Arm SystemReady play a role in addressing this challenge by providing a standardized OS installation and boot for edge devices,” Maidment said. “SystemReady includes secure boot and secure capture updates to ensure consistency in the way the operating system lands on the platform and remains secure. This is important for reducing fragmentation and lowering the cost of ownership over the device's lifetime.”

Furthermore, integration with cloud-based services may also introduce additional security risks, which can be prevented by exploiting techniques such as data encryption, secure authentication, and secure communication protocols. From the perspective of AI models, techniques such as federated learning, differential privacy, and homomorphic encryption have been used to train AI models on sensitive data without compromising privacy. Moreover, anomaly detection techniques, such as intrusion detection systems, can be used to detect and mitigate attacks targeting Edge AI systems, ensuring the entire system's security.

“The move to Edge AI processing focuses on reducing or eliminating raw data sent to the cloud for processing. This enhances the security of the system or service,” explains Nandan Nayampally. An on-device learning capability, like the one offered by BrainChip, not only allows for customization and personalization untethered from the cloud, but it also “stores this as weights rather than data, further enhancing privacy and security, while still allowing this learning to be shared by authorized users when needed.”

Latency Challenges

Latency is a significant issue that can significantly affect the performance of Edge AI systems. These systems face three types of latency challenges: input latency, processing latency, and output latency.

Input latency is the delay between the time a data sample is captured by an edge device and the time it is processed by an AI model. It can result from factors such as slow sensor response time, data transmission delay, and data pre-processing overhead. Input latency can impact the accuracy and timeliness of AI predictions, leading to missed opportunities for real-time decision-making.

Processing latency, on the other hand, refers to the delay between the time an AI model receives a data sample and the time it generates a prediction. Factors such as the complexity of the AI model, the size of the input data, and the processing power of the edge device can cause processing latency. It can affect the real-time responsiveness of AI predictions and may cause delays in critical applications such as medical diagnosis and autonomous driving.

Output latency is the delay between the time an AI prediction is generated and the time it is transmitted to the user or downstream system. Various factors, such as network congestion, communication protocol overhead, and device-to-device synchronization, can cause it. Output latency can impact the usability and effectiveness of AI predictions and may cause delays in decision-making and action-taking.

To address latency challenges, various techniques such as edge caching, edge computing, and federated learning are widely used in Edge AI systems. Edge caching helps reduce input latency by storing frequently accessed data closer to the network's edge. This technique can store pre-trained AI models, reference data, and other relevant data, thus improving the real-time responsiveness of AI predictions. Additionally, edge computing reduces processing latency by moving AI processing from the cloud to the edge of the network. By deploying lightweight AI models such as decision trees and rule-based systems on edge devices with limited processing power and storage capacity, it can eliminate the need for data transmission, thus improving the real-time responsiveness of AI predictions. Another notable approach is federated learning, which allows distributed AI model training on edge devices without transferring raw data to the cloud. This technique reduces the risk of data privacy violations and lowers output latency. By training AI models on diverse data sources such as mobile devices and IoT sensors, federated learning can improve the accuracy and generalization of AI predictions.

Scalability Challenges

Edge AI systems can face significant scalability challenges that can affect their performance, reliability, and flexibility. Scalability refers to the ability of a system to handle increasing amounts of data, users, or devices without compromising efficiency. These challenges can be classified into three categories: computational scalability, data scalability, and system scalability.

Computational scalability is the ability of an Edge AI system to process increasing amounts of data without exceeding the processing power and storage capacity of edge devices. Edge devices' limited processing power, memory, and storage can restrict the size and complexity of AI models and hinder their accuracy and responsiveness.

Data scalability, on the other hand, is the capability of an Edge AI system to handle increasing amounts of data without compromising performance. Processing large amounts of data in real-time on edge devices can be difficult due to their limited data transfer capacity and unreliable connectivity, which may restrict the quantity and quality of data that can be transmitted and processed.

System scalability refers to the capacity of an Edge AI system to manage growing numbers of edge devices and users efficiently and appropriately. This can be difficult because Edge AI systems require distributed processing and coordination, which can introduce latency, overhead, and complexity.

To address these challenges, techniques such as load balancing, parallel processing, and distributed computing can be used to optimize system scalability and enhance the overall performance and reliability of Edge AI systems. By leveraging these techniques, Edge AI systems can achieve the scalability required to handle the increasing volume and diversity of data generated by edge devices, users, and applications.

Several potential solutions have been introduced to address scalability issues in Edge AI systems, including edge orchestration, edge-to-cloud coordination, and network slicing. Edge orchestration involves managing the deployment and coordination of AI models on multiple edge devices, enabling distributed processing and load balancing. This approach optimizes the allocation of processing resources, such as CPU and GPU, and minimizes communication overhead between edge devices without being hindered by the limited processing power, memory, and storage constraints of the edge devices.

Another solution is Edge-to-cloud coordination, which integrates Edge AI systems with cloud-based AI systems, enabling hybrid processing and seamless data exchange. It allows heavy processing tasks, such as AI model training and validation, to be offloaded to the cloud and enables data aggregation and analysis from multiple edge devices. Finally, Network slicing can address scalability issues by partitioning wireless networks into multiple virtual networks, each with a unique set of resources and quality-of-service guarantees. This approach can be used to allocate network resources based on Edge AI systems' specific requirements and priorities, such as low latency, wide data transfer capacity, and high reliability, without being limited by the challenges posed by restricted data transfer capacity and unreliable connectivity.

In addition to these challenges, one more challenge requires mentioning, and that is the AI modeling challenge. As explained by Shay Kamin Braun of Synaptics, “When you design an edge device model before deployment, you try to test it in as many environments as possible so the model does what it’s supposed to do without mistakes.” For example, a device that is supposed to detect a person may face two separate issues: (1) not detecting a person that is there or (2) misdetecting something else for a person.

It is quite difficult to scale testing before deployment, especially for an edge model running inside multiple devices and getting inputs from multiple sensors, which means there remain untested environments. Continuous learning after the models have been deployed is not easy, particularly for battery-powered devices. That is why companies that are able to scale their testing and mature their models prior to deployment are the ones expected to win.

Cost Challenges

Edge AI systems can easily face cost challenges that constrain their adoption and impact. First on the list are hardware costs, which refer to the cost of specialized edge hardware, sensors, and actuators. Edge devices often require specific CPU and GPU hardware components that can accelerate AI workloads and improve performance while keeping their size minimalistic.

On the other hand, software costs refer to the cost of developing, deploying, and maintaining software applications and algorithms required to support Edge AI applications. Developing AI applications and algorithms can be time-consuming and require specialized skills and knowledge, which can increase development costs. Furthermore, deploying and maintaining software applications and algorithms on Edge AI devices can be challenging due to edge devices' limited processing power and storage capacity, which can increase operational costs.

Edge AI systems may also face several costs that can impact their adoption and effectiveness beyond hardware, software, and operational costs. For example, data storage and management costs are a significant concern, with edge devices generating and storing vast amounts of data that can quickly fill up limited storage capacity. Also, reliable and high-speed network connectivity is essential for edge devices, but setting up and maintaining robust network infrastructure can be expensive, particularly in remote or rural areas.

“One of the hidden costs in edge computing is the cost of computing.” - Chowdary Yanamadala, Senior Director, Technology Strategy, Arm’s Advanced Technology Group

Computing costs are a critical challenge that can impact the decision-making process for businesses. “By being power efficient, we can indirectly contribute to lowering the cost of operations,” Yanamadala said. “If our architecture is 30% more power-efficient than others but – for argument’s sake – costs 10% more to integrate, we can argue that the additional upfront investment is worth it because it leads to continued benefits of lower cost of operations. This trade-off between capital expenditure and operating expenditure is a common decision-making process for enterprises when moving to the cloud or data center side of things.”

Data privacy and security are also critical aspects, as edge devices collect sensitive data that must be protected from breaches. Furthermore, Edge AI applications often require a specialized network infrastructure, such as high-speed networks with minimal delay or latency, that can support real-time processing and analysis, adding to infrastructure costs. To reduce these costs, organizations can leverage existing network infrastructure, implement edge-to-cloud coordination, or adopt open-source software.

Power Consumption Challenges

Edge AI systems can also face challenges related to high energy consumption, which can limit their adoption and impact, especially in remote and harsh environments such as industrial plants, agricultural fields, and highways. High energy requirements can be attributed to the need for powerful computing resources to process and analyze data in real-time. Edge devices often require high-performance processors, memory, and storage devices that consume significant amounts of energy, making it challenging to power such systems in energy-constrained environments. Additionally, always-on connectivity and data transfer between Edge devices and the cloud can further increase energy consumption.

To address these challenges, one solution is to use energy-efficient hardware, such as low-power processors and memory, to reduce energy consumption without compromising performance. This can be achieved using edge computing hardware designed explicitly for Edge AI applications. For example, some edge computing devices are equipped with ARM-based processors optimized for low-power consumption while delivering high performance. Another solution is to optimize the software algorithms used in Edge AI systems. Techniques such as reducing unnecessary data transmissions and improving the accuracy of predictive models can decrease the overall computational load, resulting in lower energy consumption.

In addition, integrating renewable energy sources such as solar and wind power with energy-efficient hardware can enable Edge devices to operate autonomously, reducing their energy consumption and carbon footprint. For instance, edge devices can be equipped with solar panels or wind turbines to generate electricity locally. Battery storage systems can also be used to store excess energy generated during peak times, allowing devices to operate during periods of low energy availability. By leveraging renewable energy sources, Edge AI systems can operate sustainably and reduce their environmental impact.

Potential Solutions

Implementing artificial intelligence at the edge presents an intricate set of challenges, especially when operating within the constraints of an edge device such as a smartphone. Here, the AI accelerator's footprint is necessarily small, and its energy consumption must be meticulously managed. These strict requirements, however, inevitably lead to a trade-off, as the limited physical space and energy restrict storage and computational capacities.

To alleviate such concerns, chip designers may transition to smaller nodes (e.g., from a 12nm to a 5nm process) and modify the chip’s architecture (via 3D stacking, for example). While these hardware-oriented approaches effectively miniaturize the physical constraints, they often fall short when grappling with the inherent limitations of Edge AI applications. In this realm, massive computing, small form factors, energy efficiency, and cost-effectiveness must go hand in hand, a challenging balance to strike.

Confronting these challenges, Edge AI demands an innovative approach: co-designing hardware and software. This paradigm encompasses tactics of data compression, like data quantization and network pruning. By compressing the data, the chip requires less storage and memory and can rely on simpler computational units - those operating on fewer bits, for instance - to execute the AI algorithms. This reduction in memory and computational complexity reciprocally enhances energy efficiency - a classic case of killing multiple birds with one stone.

However, data compression is not without its own drawbacks. A significant concern is that it may precipitate an undesirable loss in the AI model's accuracy. To circumvent this, algorithm developers must carefully identify which segments of the AI network can withstand compression and to what extent without leading to significant degradation of accuracy.

Moreover, to ensure widespread usability, the compression algorithm should be optimized for speedy execution and compatibility with a broad range of networks. Concurrently, hardware developers should focus on devising ways to maximize the efficiency of compressed data usage while communicating the potential and limitations of their implementations to the algorithm developers.

In conclusion, the successful deployment of AI at the edge requires a harmonious blend of hardware and software co-design, informed by a deep understanding of the constraints and potentials of both domains.

“Balancing the quest for miniaturization, energy efficiency, cost-effectiveness, and model accuracy is no simple task. Still, with careful coordination and communication, we can pave the way for the next wave of AI breakthroughs at the edge.”

- Dr. Bram Verhoef, Head of Machine Learning at Axelera AI.

Gain a broader perspective on Edge AI by revisiting our comprehensive guide.

The 2023 Edge AI Technology Report

The guide to understanding the state of the art in hardware & software in Edge AI.

Click through to read each of the report's chapters.

Report Introduction

Chapter I: Overview of Industries and Application Use Cases

Chapter II: Advantages of Edge AI

Chapter III: Edge AI Platforms

Chapter IV: Hardware and Software Selection

Chapter V: Tiny ML

Chapter VI: Edge AI Algorithms

Chapter VII: Sensing Modalities

Chapter VIII: Case Studies

Chapter IX: Challenges of Edge AI

Chapter X: The Future of Edge AI and Conclusion

Report sponsors: