2023 Edge AI Technology Report. Chapter II: Advantages of Edge AI

Benefits, Utilities, and Hybrid Deployment of Edge AI.

2023 Edge AI Technology Report. Chapter II: Advantages of Edge AI

Edge AI, empowered by the recent advancements in Artificial Intelligence, is driving significant shifts in today's technology landscape. By enabling computation near the data source, Edge AI enhances responsiveness, boosts security and privacy, promotes scalability, enables distributed computing, and improves cost efficiency.

Wevolver has partnered with industry experts, researchers, and tech providers to create a detailed report on the current state of Edge AI. This document covers its technical aspects, applications, challenges, and future trends. It merges practical and technical insights from industry professionals, helping readers understand and navigate the evolving Edge AI landscape.

From Cloud to Edge AI

Following the introduction of cloud computing, there was a surge of interest in managing and analyzing data on the cloud. At that time, many enterprises considered the cloud a very appealing option for running data analytics and machine learning functions due to its scalability, capacity, and quality of service.

Over the years, however, many organizations voiced concerns against transferring and processing large volumes of data in the cloud. For instance, companies were reluctant to transfer sensitive corporate data to a remote data center they did not control. Others were concerned about the latency of several applications, as cloud data management and analytics incurred data transfer through a wide area network.

Motivated by the above-listed limitations of cloud data processing, many organizations have turned to edge computing to manage some of their data close to the data sources. Edge computing limits the amount of data to be transferred to the cloud for processing and analytics. Hence, it alleviates the latency, security, and privacy challenges of data analytics in the cloud.

Edge AI Advantages

Leveraging the merits of edge computing, Edge AI enables organizations to develop practical AI applications. These applications exhibit exceptional performance, low latency, robust security, improved privacy control, and power efficiency. Edge AI also enables organizations to make optimal use of network, computing, and energy resources, improving the AI applications’ overall cost-effectiveness. Let’s explore the advantages of Edge AI in more detail:

Reduced Latency: Edge AI applications limit the amount of data transfers over wide area networks, as processing occurs close to the data sources rather than within the cloud. As such, data processing is faster, and the latency of the Edge AI application is reduced. Moreover, the transfer and execution of instructions from the AI applications to the field result in much lower latency. This is vital for several classes of low-latency AI applications, such as applications based on industrial robots and automated guided vehicles. Some other applications built around video in which the data had to be sent to the cloud are now being processed in the Edge not only because they can but because it’s vital to assess what’s going on in near real-time in situations like security cases.

Real-Time Performance: The lower latency of Edge AI applications makes them suitable for implementing functionalities that require real-time performance. For instance, machine learning applications that detect events in real-time (e.g., defect detection in production lines, abnormal behavior detection in security applications) cannot tolerate delays associated with the transfer and processing of data from the edge to the cloud.

Enhanced Security and Data Protection: Edge AI applications expose much fewer data outside the organizations that produce or own the data. This reduces their attack surface and minimizes the opportunities for malicious security attacks and data breaches. These are why Edge AI applications tend to be much more secure than their cloud counterparts.

Improved Privacy Control: Many AI applications process sensitive data, such as data related to security, intellectual property, patients, and other forms of personal data. Edge AI deployments create a trusted data management environment for all these applications, providing more robust privacy control than conventional AI applications in the cloud. The reason is Edge AI applications limit the amount of data transferred or shared outside the organizations that produce or handle sensitive datasets.

Power-Efficiency: Cloud data transfers and cloud data processing are extremely energy-savvy operations. Specifically, cloud I/O (Input/Output) functionalities are associated with significant carbon emissions. Most importantly, cloud AI is not green at all, as very large volumes of data are typically processed by GPUs (Graphical Processing Units) and TSUs (Tensor Processing Units). Edge AI alleviates the problematic environmental performance of cloud AI applications. It reduces the number of I/O operations and processes data within edge devices or edge data centers. Hence, it results in an improved overall CO2 footprint for AI applications.

Cost-Effectiveness: Edge AI applications economize on network bandwidth and computing resources because they transmit and process much less data than cloud computing applications. Moreover, they consume less energy than cloud AI applications. As a result, Edge AI applications can be deployed and operated at a considerably lower cost than cloud AI deployments.

On-Device Learning: Certain Edge AI applications can be executed within a single device, such as an IoT device or a microcontroller. This enables the development of powerful and intelligent devices, like System-on-Chip (SoC) devices. One of their main characteristics is that they can learn on the device, which is the foundation for giving machines intelligence capabilities that are hardly possibly based on cloud processing. BrainChip’s Nandan Nayampally remarks, “With on-device learning, features extracted from trained models can be extended to customize model behavior without having to resort to extremely expensive model retraining on the cloud. This substantially reduces costs.”

New Capabilities Enabling Novel Applications: The integration of Edge AI systems within AI applications provides capabilities not available a few years ago. For instance, it enables a new class of real-time functionalities in transport, manufacturing, and other industrial settings. Hence, Edge AI is not only improving on cloud AI applications but also unlocking opportunities for innovative applications that were not previously possible.

In a conversation with Synaptics, we asked Shay Kamin Braun, Director of Product Marketing, about the most important advantages that Edge AI provides. He reiterated the advantages mentioned above and added some notable ones.

While “Edge AI provides a reduced latency in response time and a better UX without the network latency in the cloud,” Kamin Braun also highlighted privacy and battery life benefits. In terms of privacy, “PII (personally identifiable information) stays on the device and doesn’t go to the cloud. Only metadata goes to the cloud in most cases. This increases privacy,” explained Kamin Braun. “As for power efficiency, running AI at the edge helps you reduce the amount of data transmitted to the cloud over the network, which saves power. As a result, you get longer battery life or energy star compliance.”

Kamin Braun also emphasized the advantage of availability, which can be an issue for Cloud AI given its inherent reliance on the network. “Maybe you have no coverage for mobile devices, or maybe the Wi-Fi is not working. You cannot always rely on the cloud for processing. On the contrary, if your application is completely at the edge, then you’re not dependent on the network. Edge AI provides full availability.”

“By doing the computation at the sensor with the context, you can optimize better than using a generic, aggregated solution. While the battery is a consideration, lower thermal footprint enables cheaper, cost-effective chip packaging and field use that really provides scale.”

- Nandan Nayampally, CMO, BrainChip

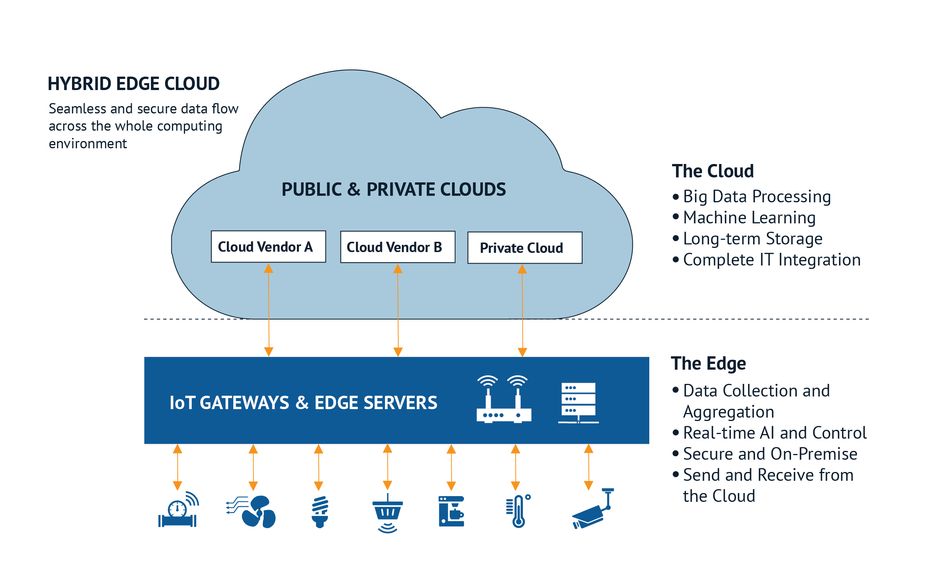

Cloud/Edge Computing Continuum: A Powerful Combination

With all the advantages that Edge AI has over cloud AI, many use cases still demand computationally intensive data processing within scalable cloud data centers. This is the case with deep learning applications, for example, which must be trained with large numbers of data points. In such cases, there is always a possibility to combine cloud AI and Edge AI paradigms to obtain the benefits of both worlds. Specifically, it is possible to perform a first round of local data processing at the edge to reduce the amount of uninteresting data and identify datasets that need processing in the cloud.

Local data processing is usually followed by a transfer of selected data to the cloud, where data points from different edge data sources are aggregated and processed to extract global insights. Furthermore, cloud AI applications may push information about these insights back to the edge data sources, which enhances the intelligence of local processing and field actuation functions. This two-level data processing exploits different deployment configurations of edge devices in the cloud/edge computing continuum.

There are machine learning paradigms that inherently support this two-level data cloud/edge data processing approach. As a prominent example, federated machine learning employs local data processing to train machine-learning models across various edge computing nodes residing in different organizations. In this way, it produces a range of local machine-learning models. These are then fused in a central cloud location, which combines the local models into a more accurate global model.

This federated approach offers strong privacy and data protection characteristics, as source data need not leave the organizations where the edge nodes reside. Instead, an accurate global model is built in the cloud based on transferring and processing data about the local machine learning models. Therefore, the machine-learning models’ information is shared while the source data are not.

The combination of cloud AI with Edge AI functionalities can be realized based on various deployment configurations, providing versatility at the levels of development, deployment, and operation. As a prominent example, in cases where local intelligence, real-time performance, and strong data protection are required, machine learning deployments can be restricted to the edge of the network. On the other hand, when the AI application involves the processing of high volumes of data (Big Data), Cloud AI is preferred.

Obviously, there are many use cases where AI processing can be distributed between cloud and edge. In such cases, the distribution should consider performance, power efficiency, and security trade-offs to yield the best possible configuration. Combining the merits of cloud computing and edge computing into a single cloud/edge AI deployment is yet another significant advantage of Edge AI systems.

This chapter was contributed to by BrainChip and Synaptics.

Gain a broader perspective on Edge AI by revisiting our comprehensive guide.

The 2023 Edge AI Technology Report

The guide to understanding the state of the art in hardware & software in Edge AI.

Click through to read each of the report's chapters.

Report Introduction

Chapter I: Overview of Industries and Application Use Cases

Chapter II: Advantages of Edge AI

Chapter III: Edge AI Platforms

Chapter IV: Hardware and Software Selection

Chapter V: Tiny ML

Chapter VI: Edge AI Algorithms

Chapter VII: Sensing Modalities

Chapter VIII: Case Studies

Chapter IX: Challenges of Edge AI

Chapter X: The Future of Edge AI and Conclusion

The report sponsors: