Redefining Sensor Fusion with CommonSense addon for Sony Spresense and Edge Impulse

Sensor fusion enables the seamless integration of data from multiple sensors, paving the way for advanced Edge AI implementations that optimize real-time processing, enhance decision-making, and boost system responsiveness in dynamic environments.

Introduction

In the intricate tapestry of modern technology, where devices are becoming increasingly interconnected and intelligent, the ability to accurately interpret the world around us is paramount. This is where the concept of 'sensor fusion' shines brightly, helping to make sense of vast quantities of data. Sensor fusion, in its essence, is the art and science of amalgamating data from multiple sensors to provide a more holistic, accurate, and reliable view of the environment. It's akin to piecing together a jigsaw puzzle, where each sensor provides a fragment of the bigger picture, and when fused together, these fragments reveal a comprehensive image.

As we navigate through this article, we will delve deep into the technical intricacies and methodologies underpinning sensor fusion. We'll explore its applications, from autonomous vehicles to healthcare, and highlight the challenges that developers and engineers face in this domain. Furthermore, we'll introduce the transformative potential of integrating sensor fusion with cutting-edge tools like the SensiEDGE CommonSense addon for Sony Spresense and the paradigm of Edge AI. By the end, readers will gain a comprehensive understanding of the current landscape of sensor fusion and the promising capability it brings.

Understanding Sensor Fusion

Sensor fusion is a multidisciplinary field that integrates data from multiple sensors to derive a more comprehensive and accurate representation of the environment than any individual sensor can provide. Central to this is the principle of Redundancy and Diversity.

Redundant sensors, which are multiple units measuring the same parameter, ensure that the system remains resilient against individual sensor failures or inaccuracies. On the other hand, diverse sensors, each capturing different facets of the environment, ensure a comprehensive view. This dual approach not only bolsters the reliability of measurements but also provides a panoramic perspective of the surroundings.

Methodologies Driving Fusion

Two methodologies particularly stand out in the realm of sensor fusion are Kalman Filtering and Particle Filtering. They are state estimation techniques that are used to combine noisy measurements from multiple sensors to estimate the true state of a system. The Kalman filter, a recursive algorithm, is tailored for linear dynamic systems. It shines in environments with Gaussian distributed noise, predicting future states based on past measurements. Conversely, Particle filters are the go-to for nonlinear systems. They operate by representing a system's posterior distribution using a set of random samples, offering a more flexible approach to state estimation.

Challenges in Real-world Implementation

While the promise of sensor fusion is undeniable, it's not without its challenges. Temporal Alignment is a significant hurdle. With sensors operating at different sampling rates, ensuring that data is synchronized is crucial for accurate fusion. Another challenge lies in Spatial Calibration. Given that sensors might have varied orientations or fields of view, aligning them to a common spatial frame is essential.

Furthermore, as the number of sensors in a system grows, scalability becomes a concern, demanding efficient algorithms that can handle increased data loads without compromising on processing speed.

Evaluating Fusion Systems

Lastly, the efficacy of a sensor fusion system is often determined by a set of quality metrics. Accuracy, which measures how close the fused data is to the actual state of the environment, is paramount. However, robustness, the system's resilience against anomalies, and computational efficiency, indicating the system's resource utilization, are equally vital.

Further reading: Sensor Fusion: The Ultimate Guide to Combining Data for Enhanced Perception and Decision-Making

Applications of Sensor Fusion

Sensor Fusion facilitates a variety of applications across various domains by amalgamating data from diverse sensors to derive precise and actionable insights. Here are some of the applications where sensor fusion shines:

Autonomous Vehicles: One of the most well-known applications of sensor fusion is in self-driving cars. These vehicles use a combination of cameras, LiDAR, radar, ultrasonic sensors, and more to perceive their surroundings, detect obstacles, and navigate safely. By fusing data from these diverse sensors, autonomous vehicles can operate safely in a wide range of conditions, from bright sunlight to nighttime and from clear weather to fog.

Robotics: Robots, especially those designed for complex tasks in variable environments, use sensor fusion to combine data from cameras, infrared sensors, tactile sensors, and gyroscopes. This allows them to navigate, interact with objects, and perform tasks with higher precision and reliability.

Augmented Reality (AR) and Virtual Reality (VR): AR and VR systems often rely on sensor fusion to track user movements and adjust the displayed visuals accordingly. By combining data from accelerometers, gyroscopes, and cameras, these systems can provide a more immersive and responsive user experience.

Military and Defense: Advanced defense systems use sensor fusion to combine data from radars, sonars, infrared cameras, and other sensors. This provides a comprehensive situational awareness, enabling quicker and more informed decision-making in complex scenarios.

Healthcare: Wearable devices that monitor health metrics often use sensor fusion to provide more accurate readings. For instance, a smartwatch might combine data from a heart rate sensor, accelerometer, and gyroscope to better understand a user's activity level and overall health.

Other notable applications include environmental monitoring, where sensor fusion aids in forest fire detection and prediction; smart cities, which utilize the technique for traffic management and public safety; agriculture, where precision farming is enhanced through fused data; industrial automation for monitoring equipment health and product quality; and navigation and mapping, where devices like smartphones and drones benefit from improved location accuracy.

Sensor Fusion with CommonSense addon for Sony Spresense Microcontroller and Edge Impulse

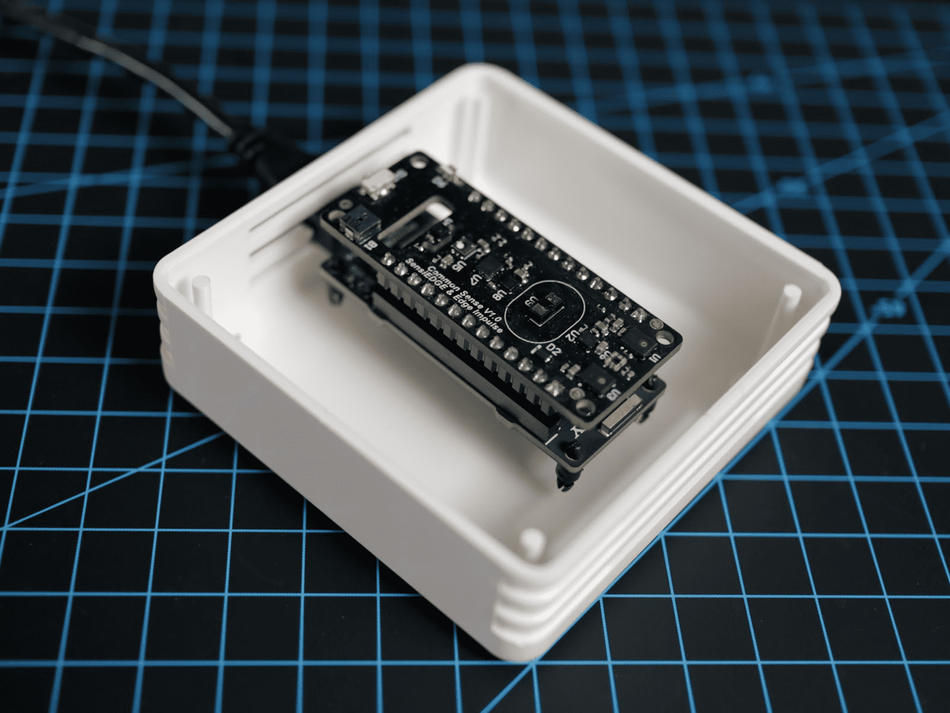

In the rapidly evolving world of IoT and edge computing, the synergy between robust hardware and intelligent software becomes crucial. The SensiEDGE CommonSense add-on, tailored for the Sony Spresense board, epitomizes this synergy, setting new standards in sensor fusion.

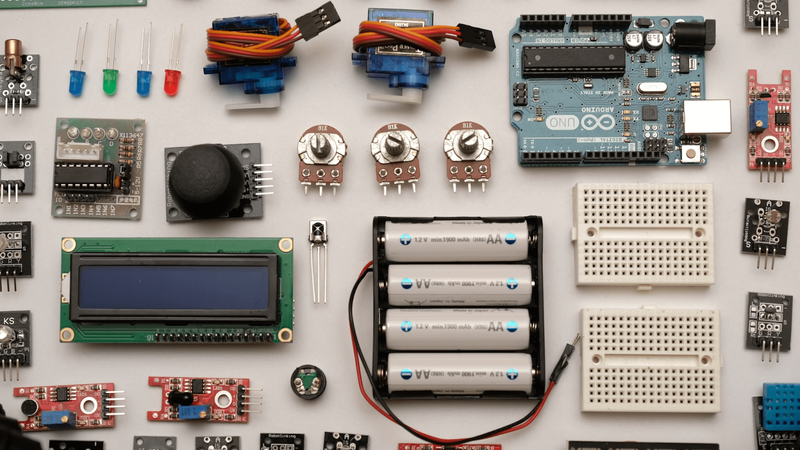

The CommonSense add-on is a testament to the advancements in sensor technology within the IoT landscape. Despite its compact and unassuming design, it boasts a plethora of capabilities. Central to its operation is an array of 10 unique sensors that allow the add-on to monitor, measure, and perceive the environment when attached to a Sony Spresense development board.

It's adept at capturing a myriad of environmental parameters, from air quality and temperature to humidity. Additionally, its motion tracking capabilities, encompassing an accelerometer, gyroscope, and magnetometer, allow for a broad spectrum of data collection. Augmented by features like audio detection through its microphone and versatile connectivity options via Bluetooth and Wi-Fi, it emerges as an invaluable asset for developers aspiring to craft comprehensive IoT solutions.

The power of the CommonSense add-on is further magnified when its data is fused, offering a holistic view of the monitored environment or subject. Such consolidated data, when interpreted through machine learning models, paves the way for precise predictions and actionable insights.

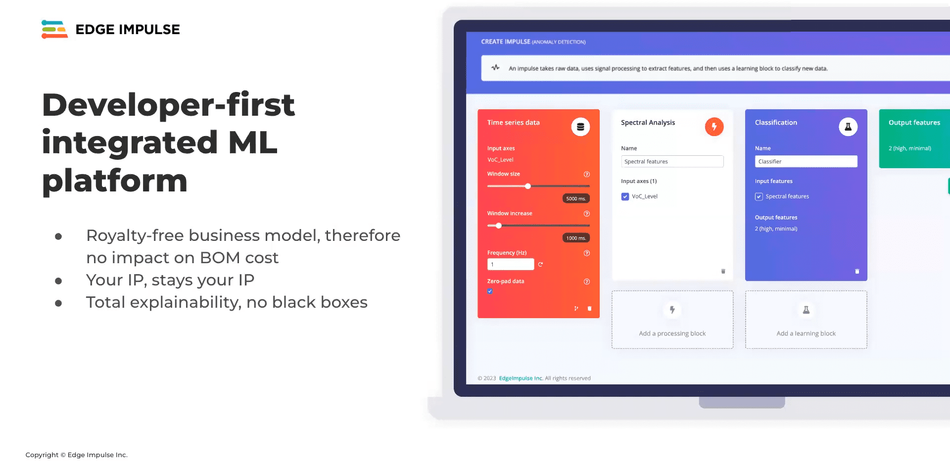

This is where Edge Impulse, a platform renowned for its expertise in embedded machine learning, comes into play. The amalgamation of the CommonSense add-on and Sony's Spresense board with Edge Impulse's software expertise results in a blend of sensor fusion and edge AI. This empowers developers to design, refine, and implement machine learning models right on the device, leveraging AI with no reliance on cloud connectivity.

For a deeper dive into this integration and its potential, consider exploring this webinar that accentuates the potential of sensor fusion on the Sony Spresense:

Conclusion

The integration of SensiEDGE CommonSense add-on with Sony Spresense and Edge Impulse makes for a powerful combination that enhances the reliability, accuracy, and efficiency of data interpretation in various applications, all on the edge.

Leveraging advanced hardware and intelligent software promises to drive innovation, offering a more holistic and nuanced understanding of our environment. In essence, this collaboration is set to redefine the boundaries of sensor fusion, paving the way for groundbreaking advancements in the interconnected world of modern technology.

About the sponsor: Edge Impulse

Edge Impulse is the leading development platform for embedded machine learning, used by over 1,000 enterprises across 200,000 ML projects worldwide. We are on a mission to enable the ultimate development experience for machine learning on embedded devices for sensors, audio, and computer vision, at scale.

From getting started in under five minutes to MLOps in production, we enable highly optimized ML deployable to a wide range of hardware from MCUs to CPUs, to custom AI accelerators. With Edge Impulse, developers, engineers, and domain experts solve real problems using machine learning in embedded solutions, speeding up development time from years to weeks. We specialize in industrial and professional applications including predictive maintenance, anomaly detection, human health, wearables, and more.

References

[1] Mitchell, H. (2007). Multi-Sensor Data Fusion. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-71559-7_1

[2] Chui, C.K., Chen, G. (2017). Kalman Filtering. Springer, Cham. https://doi.org/10.1007/978-3-319-47612-4_1

[3] ElProCus. What is Sensor Fusion: Working & Its Applications. ElProCus. [Online]. Available: https://www.elprocus.com/sensor-fusion/

[4] Sony, Spresense 6-core microcontroller board with ultra-low power consumption [Internet]. Available from: https://developer.sony.com/spresense/

[5] SensiEDGE, CommonSense: Sensor AI Board [Internet]. Available from: https://www.sensiedge.com/commonsense