Vector 2.0: AI Robot Companion

A small tabletop robot companion and helper integrated with Artificial Intelligence.

Technical Specifications

| Display | High-Res Color IPS |

| Connection | 2.4GHz WiFi |

| Camera | HD camera with 120 degree field of view |

| Microphone | Beamforming Four-Microphone Array |

| Sensors | time-of-flight laser distance sensor, Infrared Laser Scanner, cliff sensors, capacitive touch sensors, 6-Axis IMU |

| Actuators | DC motors |

| Power | Lithium-ion battery |

| Computing | 1.2 GHz quad-core Qualcomm Snapdragon |

| Software | Custom OS, SDK |

Overview

Problem / Solution

The rise of robotics has reached studies in artificial intelligence, prompting the creation of AI robots more and more today. With applications in the military, medicine, and exploration, AI robots ease our lives in many ways. The advancement in technology allows AI robots to navigate independently, decide on their own, and adapt to changing conditions. The need for methods to facilitate robotic functions has never been more pressing.

Vector is a robot sidekick who wants to make people laugh, although he is up for anything. This AI-powered robot is equipped with technology and character that serves as a companion and helper at home. Powered with state-of-the-art AI robotics, Vector can read the room, detail weather, set timers, take photos for snaps, and more. Vector can respond to questions with his speech recognition and synthesis, not to mention its expressive LCD face to initiate user interaction. He can also map using a single-point laser that navigates using SLAM. With his convolutional neural network packed on board, he can detect people and do other tasks.

Design

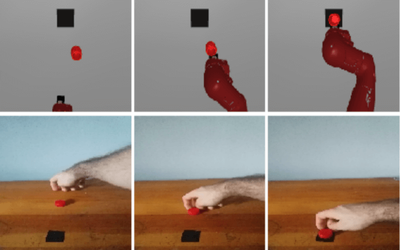

Vector’s various sensors allow for responding realistically as he reads rooms, hears his surroundings, recognizes people, and navigates his space while avoiding obstacles.

His eyes have an HD camera with a 120 Ultra Wide field view that allows him to see the world. Vector cannot only identify people but also remember their faces using its computer vision. This vision also will enable Vector to navigate the space without bumping into obstacles. The addition of planned software development improves his ability to recognize pets and detect if a human is smiling.

Vector ear has a beam-forming four-microphone array, granting him directional hearing. Upon sitting next to Vector, he is up for any direction at any minute. He will also act as startled as you in the presence of loud noise.

His touch sensors and accelerometer give Vector a sense of feeling. Vector knows when he is being touched or moved. So you can pet him, and he will relax. However, avoid shaking him too much.

He also has a processor that gives him the ability to think. The processor can run a smartphone with cloud connectivity, using a 2.4GHz WiFi connection. This powerful robot brain allows Vector to process his environment, react to things, and connect to the cloud so he can answer and share the weather.

With hundreds of synthesized sounds, Vector has a unique voice and a language all his own. When asked, Vector uses a custom text-to-speech voice command to direct an answer.

Vector is also aware when he is running out of charge and will move to his charger on his own for self-charging.

Function

Vector functions as a weather forecaster, a timer, a photo capturer, and even a blackjack dealer with his built-in utility and additional optional integration with Amazon Alexa. With such an integration, Vector has Alexa’s home connecting power on top of an endless skill arsenal constantly in development. Similar to the Alexa voice command, you can trigger Vector to add shopping items, set reminders, control smart devices like lights and speakers, and so much more.

Vector also delivers responses when asked questions. Saying “Hey Vector” followed by “I have a question” lets Vector think of an answer as he is knowledgeable on various topics. Users can ask him about unit conversion, word meanings, equations, nutrition, sports, and even pop culture. And with cloud connectivity, Vector continuously charges himself with new features and functions.

Vector Application

While Vector needs an app for setup, all subsequent interactions are done via eye contact and talking. Users can access Vector’s feelings as the app visualizes his mood and stimulation. The Anki Vector application, accessible on Anki.com, also shows robot and human selfies captured by Vector.

Escape Pod

The Escape Pod allows Vector to function without external servers while serving as an insurance policy in case Digital Dream Labs cannot maintain Vector. Designed for hobbyists and custom users, Escape Pod allows independent function from cloud servers and extends customization of voice commands. This safety net guarantees concerned users access despite server discontinuance in the future, allowing users to bypass the existing firmware in exchange for other apps the community may generate.

Escape Pod is an insurance policy that lets users connect to Vector on a local service for unparalleled security. Aside from increased privacy, using local servers improves Vector’s response time for a more conversational experience.

Escape Pod gives further access to advanced customization options and extra enhancements. Open Source Extension Engine likewise connects with third-party services that can collect external information, such as weather and smart device data. On the other hand, firmware management uploads firmware images to the pod, storing and flashing images to a connected robot during the onboarding process. Users can also access what the pod hears via the web interface using Behavior Logging.

Included in the purchase of Escape Pod is an Escape Pod image for Raspberry Pi and Escape Pod License for one robot. Local servers with Raspberry Pi 4 Starter Kit (2/4/8GB), Raspberry Pi 4 EXTREME Kit (2/4/8GB), or Raspberry Pi 4 MAX Kit (2/4/8GB allows for the best user experience, although Pi 3B+ and Pi 4 configurations also work.

Open Source Kit for Robots (OSKR)

Vector integrates an Open Source Kit for Robots (OSKR), allowing hardcore hobbyists and professionals to create desired features for themselves. OSKR works like an app store for the robot, making modification of the source code accessible. This feature that can adjust behavior, animation, and others results in a far deeper experience than existing SDK models.