Revisiting AI's Ethical Dilemma: Balancing Promise and Peril

Article #4 of Confronting AI Series: AI's rapid rise brings transformative potential and serious risks; without transparency, regulation, education, and justice, unchecked deployment may entrench bias, erode trust, and harm society more than it helps.

AI Generated Image by Mouser Electronics

This is the fourth article in our multi-part Confronting AI series, brought to you by Mouser Electronics. Based on the Methods: Confronting AI e-magazine, this series explores how artificial intelligence is reshaping engineering, product design, and the ethical frameworks behind emerging technologies. Each article examines real-world opportunities and unresolved tensions—from data centers and embedded ML to regulation, adoption, and ethics. Whether you're developing AI systems or assessing their broader impact, the series brings clarity to this rapidly evolving domain.

"AI Everywhere" explains how AI extends beyond LLMs into vision, time series, ML, and RL.

"The Paradox of AI Adoption" focuses on trust and transparency challenges in AI adoption.

“Repowering Data Centers for AI” explores powering data centers sustainably for AI workloads.

“Revisiting AI’s Ethical Dilemma” revisits ethical risks and responsible AI deployment.

“Overcoming Constraints for Embedded ML” presents ways to optimize ML models for embedded systems.

“The Regulatory Landscape of AI” discusses AI regulation and balancing safety with innovation.

Generative AI, trained on unimaginably large datasets, has completely dominated the conversation, driving AI enthusiasts headlong into trying to find its world-changing application. The current scramble to apply this burgeoning technology to every facet of life is a far cry from the measured approach we imagined.

The unchecked momentum of AI in real-world applications has escalated our ethical paradox: How can we harness the immense potential for good while minimizing the capacity for harm? A measured and ethical approach is ever more crucial as the rapid proliferation of AI often prioritizes innovation over thoughtful consideration, risking real-world consequences that should not be overlooked.

In this article, we explore the ethical risks of AI deployment, from hallucinations and bias to real-world misuse, and examine the principles needed to guide responsible development. We also highlight promising use cases that show how AI can be a force for good when aligned with societal values.

Ethical Risks of AI Deployment

Leading AI companies like OpenAI, Google, and Anthropic have made significant achievements. Large language models (LLMs) and other generative models are clearly generations beyond typical voice assistants. If you give them a prompt saying what you want, for the most part, they deliver. You can ask questions, generate papers and articles, create new artwork, and even solve complex math and logic problems. Despite the promise of generative AI, its hasty deployment in the real world has not been without issues.

The use of generative AI without sufficient oversight raises critical ethical concerns. For example, OpenAI advertises its speech-recognition transcription tool, Whisper, as "approach[ing] human level robustness and accuracy."[1] While Whisper offers impressive speech-to-text capabilities, it has also simply invented text that was never uttered.[2] These AI hallucinations, as they are known, can arise in any interaction with LLMs.

The extent of the problem is not clear, but a Cornell University study conducted in 2023 found that out of 13,140 audio samples run through the Whisper application programming interface (API), 1.4% of the transcriptions included hallucinations. Of those hallucinations, 38% presented references to violence, fabricated names or other inaccuracies, or websites not mentioned in the audio sample.[3] Despite the known deficiencies, hospitals and other mission-critical institutions continue to deploy the technology with little thought given to the consequences.

The news industry offers another cautionary tale. Hoping to increase the efficiency of their operations, journalists and editors are adopting the technology in surprisingly large numbers. Whether or not it actually saves time is not clear since a reliable news organization will spend as much time fact-checking as it would researching. Less reliable organizations have published fully AI-generated stories only to retract them later because they included fabricated information.[4] The ethical implications are clear: Without rigorous oversight, these tools risk eroding public trust in vital institutions.

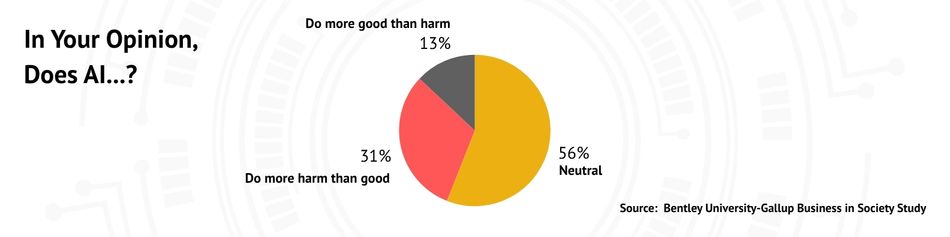

Meanwhile, traditional AI models continue to be deployed to make decisions that greatly affect human lives, including job performance, hiring, loans, and criminal justice, among many others. A 2024 survey by Bentley University and Gallup found that 56% of Americans surveyed viewed AI tools as inherently neutral.[5] However, humans develop AI models, and they must make decisions about how those models are trained. They select the data used to train them and make trade-offs that affect outcomes. Various standard performance metrics used in AI development work against each other, causing developers to embed ethical choices within the technology without considering their decisions in those terms.

Public institutions especially need to consider the implications of the technology they adopt. What processes do police departments use before deciding to deploy facial recognition applications or systems that purport to predict where crime is most likely to occur? What training do officers receive before using the technology? We should be asking these questions of the organizations operating on our behalf.

And yet, our AI mania continues. WIRED magazine and Lighthouse Reports researched the use of an algorithmic machine learning system to detect welfare fraud in the city of Rotterdam in the Netherlands.[6] WIRED and Lighthouse Reports analyzed the system’s findings and concluded that the models do no better than randomly guessing to identify actual fraud in the system, potentially leaving certain populations vulnerable to discrimination as a result of bias in the algorithm. While Rotterdam has since discontinued its welfare fraud detection system, similar systems are used around the world by various government agencies in ways largely unknown to citizens.[7]

The Center for Family and Community Engagement at North Carolina State University also found that AI-driven decision-making can perpetuate discrimination due to biased training data and unconscious biases of developers, possibly harming marginalized communities by reinforcing systemic inequalities.[8] Issues like unfair risk assessments, data privacy concerns, and a lack of human oversight raise serious ethical considerations. Still, AI also has the potential to improve child welfare by streamlining administrative tasks and reducing social worker burnout. The challenge lies in ensuring that AI is implemented ethically, with rigorous oversight and safeguards to prevent bias and protect vulnerable populations. Initiatives like NC State’s Embedding AI in Society Ethically (EASE) center play a vital role in addressing these concerns and promoting the responsible integration of AI in societal institutions like child welfare.

The Promise of Ethical AI

When responsibly developed and deployed, AI holds the potential to address some of our societal challenges. In healthcare, AI has revolutionized tuberculosis detection in Vietnam by combining traditional screening methods with advanced algorithms, enabling faster and more accurate diagnoses.[9] Tuberculosis is difficult to treat in many remote areas, especially where expensive medical equipment and expertise are not readily available. Standard smartphones equipped with advanced technology that has been trained on millions of short audio recordings are being used for early detection of one of the world's top infectious diseases. The technology simply listens to people's coughs.[10]

Similarly, AI-driven drug discovery tools are expediting the identification of lifesaving medications, potentially transforming public health outcomes.[11] One way of developing antibiotics involves extremely complicated sequencing of bacterial DNA.[12] Foundational models similar to those used in agents like ChatGPT are being applied to build up libraries of potentially effective drugs that can stand up to ever increasingly resistant bacteria.

In education, personalized learning platforms powered by AI are helping bridge gaps in student understanding by tailoring instruction to individual needs. When combined with human oversight, such tools demonstrate the ethical promise of AI in fostering equity and progress. Other tasks as niche as the preservation of disappearing indigenous languages can benefit from recent developments in AI. These are social problems that don't normally get the attention or funding necessary to drive solutions. But with AI tools in hand, scientists and activists can tackle issues that matter to them even if the wider world sees only "bigger" problems to deal with.

An Ethical Imperative to Navigate AI's Dual Nature

At its core, AI is a double-edged sword—a tool capable of amplifying human ingenuity and entrenching systemic inequities. Developers, policymakers, and society at large are responsible for navigating this tension. Ethical principles must underpin every stage of AI's development and deployment to ensure that its use aligns with broader social values.

At a minimum, certain ethical principles should be applied before using any technology that will impact lives in this way:

Transparency and accountability: AI systems, especially those deployed in high-stakes environments, must be auditable and explainable. Decisions made by these systems should be interpretable by nonexperts, which will foster trust and accountability.

Regulation and oversight: Policymakers must establish clear ethical guidelines governing AI use. This includes mandating post-deployment accuracy assessments, providing avenues for recourse when systems fail, and addressing the environmental impact of AI development. Vendors should be held accountable for harm caused by their systems, which will ensure that ethical considerations are integral to AI design.

Education and literacy: Societal understanding of AI's limitations and potential harms is essential. Integrating AI literacy into school curricula and professional training programs will empower individuals to evaluate AI technologies critically and use them responsibly.

Justice: AI developers and users must proactively address inherent biases in training data. By embedding fairness into system design and rigorously testing for disparate impacts, developers can enable AI to be a force for equity rather than division.

There is a burgeoning industry around the notion of responsible AI. Academics and consulting firms are developing sets of practices to help organizations assess inherent risks. These include oversight mechanisms to address bias, privacy infringement, and misuse of AI.

Transparent decision-making and explainability are key values that should be incorporated into AI decisions. Algorithmic audits are being used to investigate outcomes from decision-making systems. Responsible AI researchers have borrowed from the social sciences to apply experimental methods to measure discrimination and other negative consequences.

Another important consideration in these studies is who is impacted by AI's mistakes. No model is 100% accurate, but do false positives in model predictions tend to hurt some groups of people more than others? Notions of fairness and balancing trade-offs (i.e., benefits to society vs. disparate outcomes for different individuals) are complex challenges that are still being addressed. There is no industry standard at this point, but academics and local governments are starting to make progress in that direction.

Conclusion

The paradox of AI emanates from a number of factors, including the complicated reality of its transformative potential alongside its inherent risks. To navigate this era responsibly, we must anchor AI development and deployment in ethical principles. Only through transparency, regulation, education, and a commitment to justice can we ensure that AI serves as a tool for collective good rather than as a driver of harm. As we move forward, the question is not whether AI can achieve remarkable feats but whether it can do so ethically and equitably. The answer depends on our collective willingness to prioritize humanity over haste.

References

[1] OpenAI. Introducing Whisper [Internet]. 2022 Sep 21. Available from: https://openai.com/index/whisper/

[2] Burke G, Schellmann H. Researchers say an AI-powered transcription tool used in hospitals invents things no one ever said. Associated Press [Internet]. 2024 Oct 26. Available from: https://apnews.com/article/ai-artificial-intelligence-health-business-90020cdf5fa16c79ca2e5b6c4c9bbb14

[3] Koenecke A, Choi ASG, Mei KX, Schellmann H, Sloane M. Careless Whisper: Speech-to-Text Hallucination Harms [Internet]. arXiv; 2024 Feb. Available from: https://arxiv.org/abs/2402.08021v2

[4] Sato M, Roth E. CNET found errors in more than half of its AI-written stories [Internet]. The Verge; 2023 Jan 25. Available from: https://www.theverge.com/2023/1/25/23571082/cnet-ai-written-stories-errors-corrections-red-ventures

[5] Ray J. Americans Express Real Concerns About Artificial Intelligence [Internet]. Gallup; 2024 Aug 27. Available from: https://news.gallup.com/poll/648953/americans-express-real-concerns-artificial-intelligence.aspx

[6] Constantaras E, Geiger G, Braun J‑C, Mehrotra D, Aung H. Inside the Suspicion Machine [Internet]. WIRED; 2023 Mar 6. Available from: https://www.wired.com/story/welfare-state-algorithms/

[7] OECD. Governing with Artificial Intelligence: Are governments ready? [Internet]. OECD Publishing; 2024 Jun 13. Available from: https://www.oecd.org/en/publications/governing-with-artificial-intelligence_26324bc2-en.html

[8] Doyle AG. How the Use of AI Impacts Marginalized Populations in Child Welfare [Internet]. North Carolina State University; 2024 Dec 2. Available from: https://cface.chass.ncsu.edu/news/2024/12/02/how-the-use-of-ai-impacts-marginalized-populations-in-child-welfare/

[9] Innes AL, Lebrun V, Hoang GL, Martinez A, Dinh N, Nguyen TTH, et al. An Effective Health System Approach to End TB: Implementing the Double X Strategy in Vietnam. Glob Health Sci Pract. 2024 June 27;12(3):e2400024. doi:10.9745/GHSP-D-24-00024. Available from: https://pmc.ncbi.nlm.nih.gov/articles/PMC11216706/

[10] Pappas P. Cough analysis tool screens for TB via smartphone [Internet]. Digital Health Insights; 2025 Jan 2023. Available from: https://www.dhinsights.org/news/cough-analysis-tool-screens-for-tb-via-smartphone

[11] Paul D, Sanap G, Shenoy S, Kalyane D, Kalia K, Tekade RK. Artificial intelligence in drug discovery and development. Drug Discov Today. 2020 Oct 21;26(1):80–93. doi:10.1016/j.drudis.2020.10.010. Available from: https://pmc.ncbi.nlm.nih.gov/articles/PMC7577280/

[12] Swanson K, Liu G, Catacutan DB, et al. Generative AI for designing and validating easily synthesizable and structurally novel antibiotics. Nat Mach Intell. 2024 Mar 22;6:338–353. doi:10.1038/s42256-024-00809-7. Available from: https://www.nature.com/articles/s42256-024-00809-7

This article was originally published in “Methods: Confronting AI,” an e-magazine by Mouser Electronics. It has been substantially edited by the Wevolver team and Ravi Y Rao for publication on Wevolver. Upcoming pieces in this series will continue to explore key themes including AI adoption, embedded intelligence, and the ethical dilemmas surrounding emerging technologies.