The Paradox of AI Adoption: Why Trust Still Lags Behind Technology

Article #2 of Confronting AI Series: AI can enhance decisions and refine processes, yet organizations are hesitant to integrate it. Boosting trust through education, clear policies, transparent models, and improved human-AI collaboration will encourage wider adoption.

This is the second article in our multi-part Confronting AI series, brought to you by Mouser Electronics. Based on the Methods: Confronting AI e-magazine, this series explores how artificial intelligence is reshaping engineering, product design, and the ethical frameworks behind emerging technologies. Each article examines real-world opportunities and unresolved tensions—from data centers and embedded ML to regulation, adoption, and ethics. Whether you're developing AI systems or assessing their broader impact, the series brings clarity to this rapidly evolving domain.

"AI Everywhere" explains how AI extends beyond LLMs into vision, time series, ML, and RL.

"The Paradox of AI Adoption" focuses on trust and transparency challenges in AI adoption.

“Repowering Data Centers for AI” explores powering data centers sustainably for AI workloads.

“Revisiting AI’s Ethical Dilemma” revisits ethical risks and responsible AI deployment.

“Overcoming Constraints for Embedded ML” presents ways to optimize ML models for embedded systems.

“The Regulatory Landscape of AI” discusses AI regulation and balancing safety with innovation.

Artificial intelligence (AI) stands at the forefront of technological innovation, poised to transform many aspects of enterprise operations through automation and data-driven insights. Yet, while businesses are enticed by promises of greater efficiency and competitive advantage, many hesitate to embrace AI. Concerns over its "black box" nature and risks like AI hallucinations fuel this caution.

This article explores how enterprises can build trust in AI. By setting clear boundaries on its use, enhancing transparency in AI models, and adopting a collaborative approach that augments rather than replaces human workers, organizations can navigate the complexities of AI adoption and unlock its transformative potential.

Decoding the Hype Versus the Reality of AI Adoption

The vision of how AI could transform industries has permeated nearly every sector. From healthcare to finance to retail, AI holds the potential to streamline workflows, enable faster decision-making, and uncover new business opportunities. AI has already demonstrated significant promise in data analysis, predictive analytics, automation of repetitive tasks, and natural language processing. These applications are particularly enticing for enterprises, as they offer the appeal of improving operational efficiency, reducing costs, and driving innovation.

For example, in data analysis, AI can process massive datasets to identify trends, anomalies, or patterns far beyond the capabilities of human analysts. In customer service, AI-driven chatbots can handle a high volume of inquiries, offering quick responses and freeing human agents to focus on more complex issues. The allure of these advancements is hard to overlook, and the possibility of embedding AI into core business processes has generated excitement.

Despite the optimistic forecasts, the rate of enterprise AI adoption has been surprisingly conservative. According to a survey conducted in 2021–2022,[1] more than 70% of enterprises were still in the exploratory or pilot phase of AI adoption, with only a small percentage operationalizing AI at scale.

Several factors drive this initial cautious approach. The fear of the unknown is a significant barrier; AI is still relatively new to many executives and causes apprehension. Many enterprise leaders understandably worry about potential risks such as data breaches, ethical issues, or unintended consequences of automated decisions—not to mention the technical complexity of deploying and managing AI systems.

Regulatory scrutiny around data usage, privacy, and potential biases adds to this hesitation. Organizations understand that improper AI deployment could cause operational errors, damage their reputation, and lead to legal repercussions. So, while the promise of AI is exciting, enterprises are taking a slow, measured approach in their adoption journey. However, after the exponential rise of generative AI in 2023, 72 percent of respondents to a 2024 McKinsey report confirmed the adoption of AI in at least one business function.[2]

Addressing the Black Box Nature of AI Models

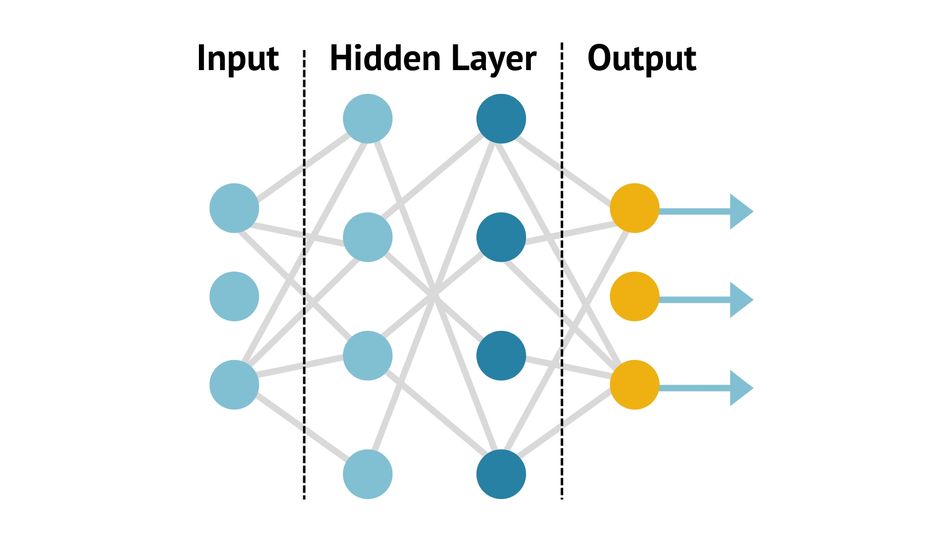

Another significant challenge to adoption is the black box nature associated with many advanced AI models, especially those using deep learning and neural networks. While these systems can process inputs and generate outputs, humans do not readily understand the how and why behind their decisions.

This opacity arises because these models use complex algorithms involving millions or billions of parameters. For example, a deep neural network designed for image recognition might consist of numerous layers, each extracting different features from the input data. The interactions within these layers are mathematically complex, and even experts struggle to trace the exact pathway leading to a specific output.

Moreover, AI models often rely on vast amounts of training data to learn patterns and make predictions. The relationships and correlations they identify are so multifaceted that they defy straightforward human interpretation. As a result, stakeholders are left with a powerful tool whose decision-making process is largely mysterious.

The black box nature of AI significantly impacts the trust that enterprises and stakeholders place in the technology. Ensuring that the AI isn't perpetuating biases present in training data is challenging without transparency into AI's decision-making process. For example, a financial institution using AI to approve loan applications may frustrate applicants and face regulatory scrutiny for potential discrimination if the AI denies loans without providing a clear rationale.

A real-world example involves a major technology company's recruitment AI that favored male candidates over female candidates because it was trained on data reflecting the company's historically male-dominated hiring patterns.[3] A lack of transparency delayed the identification of this bias, leading to reputational damage and raising ethical concerns. Public incidents like this underscore how a lack of transparency may lead to mistrust and hesitancy to adopt AI solutions, creating significant barriers to leveraging AI's capabilities and hindering the collaborative relationship between humans and AI systems.

Confronting the Challenge of AI Hallucinations

AI hallucinations refer to instances in which an AI system generates incorrect, nonsensical, or entirely fabricated outputs that are presented as plausible and confident responses. These hallucinations often occur in advanced large language models and generative AI systems that predict and create responses based on learned patterns in data. For example, an AI chatbot might provide a detailed answer to a question about a nonexistent event, complete with fabricated dates and figures, and users struggle to discern the inaccuracy without external verification.

For example, in 2023, a lawyer inadvertently cited nonexistent court cases in a legal brief after relying on information provided by an AI language model.[4] The AI confidently generated case names, citations, and even entirely fabricated summaries, which the lawyer included in official court documents. The court later discovered that these cases did not exist, resulting in professional embarrassment and sanctions for the lawyer involved.

Such hallucinations are particularly problematic because they appear accurate, and users may not have the expertise to question the information provided.

The risks AI hallucinations pose are significant, especially when AI systems are integrated into critical decision-making processes. Inaccurate outputs may lead to faulty business strategies, misguided policy decisions, or the dissemination of false information to the public. In the legal example, the lawyer's reliance on incorrect AI-generated information jeopardized the case and raised ethical concerns.

Furthermore, risk compounds as AI-generated content increasingly feed back into training datasets for future models. If hallucinations are not identified and filtered, they become part of the data that teach new AI systems; this perpetuates and amplifies errors, causing a snowball effect that undermines AI technologies' overall quality and trustworthiness.

Preventing the Snowball Effect of Hallucinations

Enterprises must maintain robust human oversight in content creation and data curation to prevent AI hallucinations from propagating. This involves conducting regular audits of AI outputs and implementing protocols to verify information before it is used or published.

Continuous Monitoring

Ongoing evaluation of AI systems is crucial. By continuously monitoring performance, organizations can identify patterns of errors or inaccuracies that may indicate underlying issues with the AI model or its training data. This proactive approach allows for timely interventions to correct problems before they escalate.

Feedback Loops

Establishing feedback mechanisms enables AI systems to learn from mistakes under human guidance. When errors are identified, they should be flagged and fed back into the system as such to adjust algorithms and improve future performance. This iterative process helps refine AI models over time and reduces the likelihood of repeated hallucinations.

Setting Boundaries for Trustworthy AI Use

To build trust in AI, enterprises must define clear boundaries around its use. This involves identifying specific use cases in which AI has demonstrated reliability and its outputs can be validated. For instance, AI might be trusted for data entry automation or preliminary data analysis, but it will require human oversight in areas like strategic decision-making or customer communications.

By setting these parameters, organizations can ensure that AI serves as a tool within well-understood limits. This approach reduces the risk of unexpected outcomes and helps stakeholders feel more comfortable with AI's role in the workflow.

Effective risk management strategies are essential for monitoring AI outputs and catching errors early. This includes implementing checks and balances such as human-in-the-loop systems, in which human experts review and validate AI-generated results before they are executed or disseminated.

Enterprises should also limit AI autonomy, especially in critical processes where mistakes have severe consequences. By controlling the degree of independence granted to AI systems, organizations can prevent them from making unilateral decisions that could lead to adverse outcomes.

Increasing Transparency in AI Models

Transparency is crucial for building trust, allowing users to see the rationale behind AI-generated outcomes. When users understand how an AI system arrives at its conclusions, they are more likely to trust and accept its recommendations. Additionally, transparency facilitates regulatory compliance by providing documentation and explanations required by oversight bodies, particularly in industries with strict governance standards.

One way to ensure transparency is by using explainable AI (XAI)—methods and techniques that make the outputs of AI systems more understandable to humans. XAI aims to shed light on the internal workings of AI models by providing insights into how decisions are made.

Two methods enterprises can employ are local interpretable model-agnostic explanations (LIME) and Shapley additive explanations (SHAP). LIME helps decipher individual predictions by approximating the complex model locally with a simpler interpretable model, which allows stakeholders to see which features most influenced a specific decision. Similarly, SHAP uses game theory to assign each feature an importance value, offering insights into the global and local interpretability of the model's outputs.

By integrating these techniques, organizations can make AI decision-making processes more transparent and facilitate better oversight.

Augmenting Humans Rather Than Replacing Them

Rather than viewing AI as a replacement for human workers, enterprises should adopt an augmentation strategy in which AI serves as a tool to enhance human capabilities. This perspective positions AI as a collaborator that handles routine or data-intensive tasks, which allows humans to focus on areas that require creativity, critical thinking, and emotional intelligence.

AI and human workers can collaborate effectively in several scenarios. In healthcare, AI algorithms assist radiologists by highlighting areas of concern in medical images, but the physician makes the final diagnosis. In customer service, AI chatbots handle common inquiries, while complex issues are escalated to human agents. This synergy leads to better outcomes, as it combines the efficiency of AI with the nuanced understanding of human professionals. This approach also leads to higher acceptance rates of AI technologies among employees, as it alleviates fears of job displacement and emphasizes the value of human expertise.

Integrating Practical Steps for Enterprises

Enterprises need to develop clear AI governance policies that encompass ethical guidelines, accountability measures, and protocols for risk management. These policies should outline acceptable use cases, delineate responsibilities, and establish procedures for handling errors or unintended outcomes.

Training and Education

Investing in employee training programs is essential to equip staff with the skills needed to work effectively alongside AI. This includes technical training and education on AI's limitations, ethical considerations, and best practices for human-AI collaboration.

Stakeholder Engagement

Involving all stakeholders in the AI adoption process fosters a culture of transparency and inclusivity. By engaging employees, customers, regulators, and partners in discussions about AI initiatives, enterprises can address concerns, gather valuable feedback, and build broader trust in AI adoption.

Bridging the Trust Gap in AI Adoption

Despite AI's transformative potential, enterprises remain cautious owing to the black box nature of models and the risk of hallucinations, highlighting a tension between innovation and the need for reliability.

Building trust in AI is imperative; organizations can mitigate risks and foster stakeholder confidence by setting clear boundaries on its use, enhancing transparency, and focusing on augmenting rather than replacing human workers.

Enterprises are encouraged to take proactive steps in responsible adoption—developing comprehensive policies, investing in education, and engaging stakeholders—to unlock AI’s full potential while maintaining the integrity and trust essential for long-term success.

References

[1] Loukides M. AI adoption in the enterprise 2022. O’Reilly Radar [Internet]. 2022 Mar 31. Available from: https://www.oreilly.com/radar/ai-adoption-in-the-enterprise-2022/

[2] Singla A, Sukharevsky A, Yee L, Chui M, Hall B. The state of AI: How organizations are rewiring to capture value. McKinsey & Company [Internet]. 2025 Mar 12. Available from: https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

[3] Dastin J. Insight – Amazon scraps secret AI recruiting tool that showed bias against women. Reuters [Internet]. 2018 Oct 11. Available from: https://www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G

[4] Weiser B. Here’s what happens when your lawyer uses ChatGPT. The New York Times [Internet]. 2023 May 27. Available from: https://www.nytimes.com/2023/05/27/nyregion/avianca-airline-lawsuit-chatgpt.html

This article was originally published in “Methods: Confronting AI,” an e-magazine by Mouser Electronics. It has been substantially edited by the Wevolver team and Ravi Y Rao for publication on Wevolver. Upcoming pieces in this series will continue to explore key themes including AI adoption, embedded intelligence, and the ethical dilemmas surrounding emerging technologies.