The Expanding Reach of AI: Exploring Intelligence Beyond LLMs

Article #1 of Confronting AI Series: AI is rapidly evolving beyond language models, quietly transforming fields like vision, prediction, and control. Explore how diverse AI techniques are solving complex challenges where text-based approaches fall short.

AI Generated Image by Mouser Electronics

This is the first article in our multi-part Confronting AI series, brought to you by Mouser Electronics. Based on the Methods: Confronting AI e-magazine, this series explores how artificial intelligence is reshaping engineering, product design, and the ethical frameworks behind emerging technologies. Each article examines real-world opportunities and unresolved tensions—from data centers and embedded ML to regulation, adoption, and ethics. Whether you're developing AI systems or assessing their broader impact, the series brings clarity to this rapidly evolving domain.

"AI Everywhere" explains how AI extends beyond LLMs into vision, time series, ML, and RL.

"The Paradox of AI Adoption" focuses on trust and transparency challenges in AI adoption.

“Repowering Data Centers for AI” explores powering data centers sustainably for AI workloads.

“Revisiting AI’s Ethical Dilemma” revisits ethical risks and responsible AI deployment.

“Overcoming Constraints for Embedded ML” presents ways to optimize ML models for embedded systems.

“The Regulatory Landscape of AI” discusses AI regulation and balancing safety with innovation.

Artificial intelligence (AI) seems to be everywhere. It’s a sudden add-on to every search bar on the website of every company, big or small. It appears from nowhere as emails are drafted or technical notes are written in a shared document: “Would you like to ask AI for help?” New solutions emerge daily, offering users the ability to “chat with your data” or “chat with your documents.”

While AI has been experiencing an exciting cycle of innovation and popularity in the past few years, the race to fit AI into every product and offer it as a solution to enterprise problems across the board has become more pronounced with the advent of modern large language models (LLMs).

The majority of new AI-powered features involve these LLMs in some capacity, boasting impressive functionality across various written tasks, from generating to summarizing content. As AI makes its way into increasingly more nuanced and complex fields like law, accounting, and software engineering, people may believe all problems can be solved with this kind of AI, but unfortunately, that is not the case.

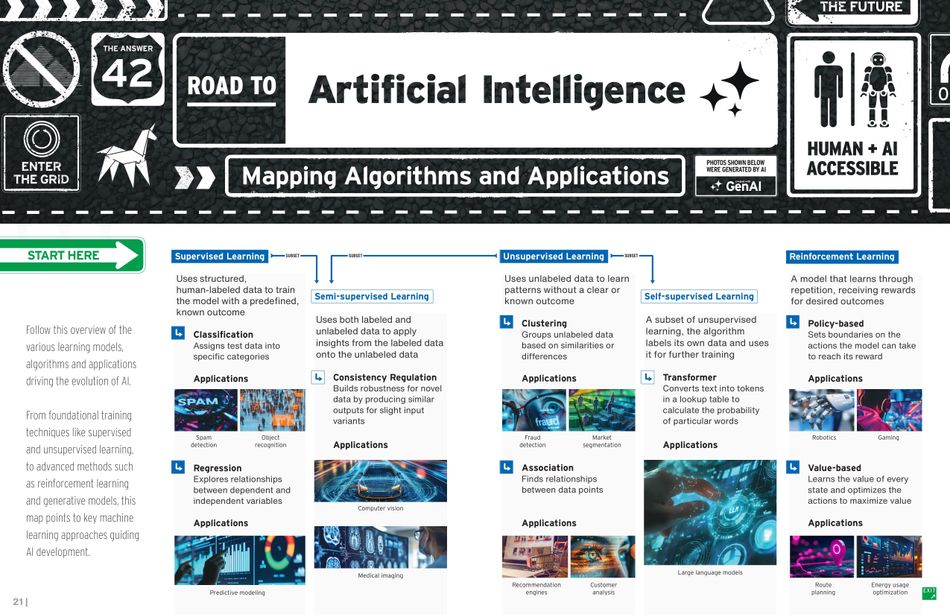

A significant number of use cases and subsequent data types demonstrate that, despite the hype, LLM-powered generative AI is not a panacea. This article explores AI applications ranging from computer vision and time series analysis to traditional machine learning (ML) and reinforcement learning (RL), showing that the world of AI is wider than LLMs and that better tools are often available for the job.

LLMs for Everything? Not So Much

LLMs are one of the most powerful and wide-reaching forms of AI, and the current hype cycle would have businesses believing it can be employed successfully in every enterprise use case. However, that notion is misguided. First, not all problems are language-based. LLMs typically rely on the fact that the input data and required output are text-based—documents, descriptions, instructions, summaries, and so forth. However, some data are purely in structured and numerical form, in vast swathes, and with intricate underlying patterns that emerge over time (e.g., banking data covering years of transactions, figures, and spending amounts).

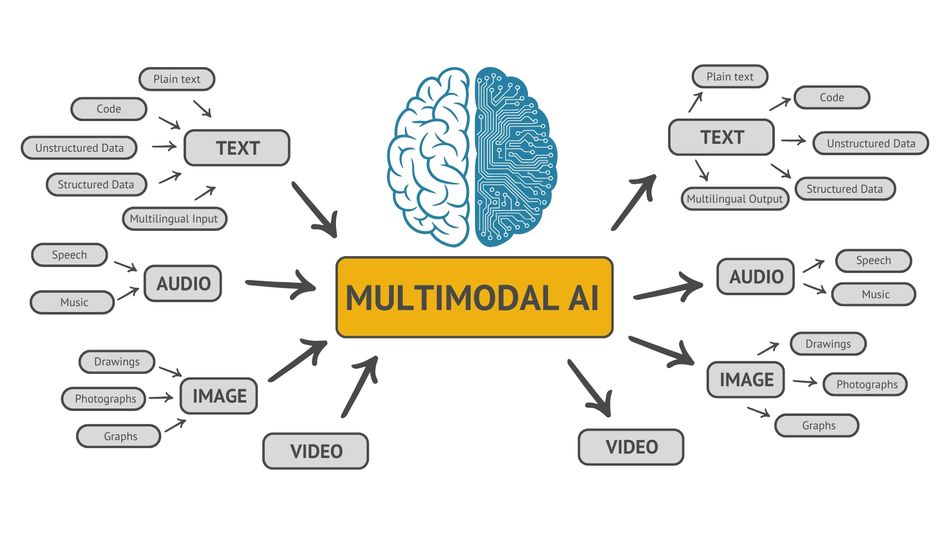

Other use cases call for images as inputs, such as satellite data or medical scans. Although some LLMs come with multimodal capacities, they don’t generalize well to these more complicated or bespoke imagery needs. Other applications, such as predictive maintenance, may take inputs in the form of sound recordings or other sensor data (both time series and multimodal), which means that language-based generative AI is likely to be wholly unhelpful.

Second, not every solution is generative. Some solutions instead require regression or classification. Although some overlap exists—for example, generative AI can produce a classification when asked or can generate a number in a regression-like fashion given a sample set (if the domain is sufficiently niche)—the generative model may not have been exposed to those patterns and won’t produce the correct output. Sometimes, the question is specific to a dataset and can only be answered by inspecting (i.e., learning from) the whole dataset.

Lastly, sometimes generative AI is just overkill. Many problems have tried-and-true mathematical or statistical solutions that don’t require generative AI. For example, applications like scheduling and route selection in logistics and delivery can leverage known algorithms for path optimization. These are often more straightforward to implement, more constrained to the specific problem, and, hence, more accurate without a need for deep learning and its associated overhead.

If Not LLMs, Then What?

With all the focus on language-based generative AI, one might miss the fact that equally impressive forms of AI exist. Deep learning models with similar sophistication and capabilities as LLMs have been developed for computer vision applications as well as time series analysis and RL. Additionally, traditional statistics-based ML still plays a prominent role in many enterprise use cases.

Computer Vision

Computer vision is a key subset of AI that is transforming industries like security, healthcare, and manufacturing. In cybersecurity, for instance, computer vision is employed to analyze visual data from surveillance systems to detect anomalies and identify potential breaches. In healthcare, it aids in diagnosing diseases from medical imaging, such as by detecting cancer in radiology scans. Similarly, in manufacturing, it facilitates quality control through defect detection in assembly lines. These applications hinge on deep learning techniques that enable systems to “see” and interpret visual data, such as images or videos, by using algorithms that mimic human visual perception. Typically, the most used models are convolutional neural networks (CNNs) or, more recently, vision transformers.

Regardless of the underlying model, training generally involves three steps. Once raw visual data have been cleaned and formatted as part of initial image preprocessing, the vision model will then run those data through layers that detect features like edges, textures, and patterns, building from simple structures to complex shapes and objects. Typically, this is supervised learning; the CNN has an indication of what is in the images it initially learns from, whether the task is classification or object detection.

By constantly updating the layers to predict the target label more accurately, the model effectively “learns” what is in the input image. In this way, the system ultimately will be able to perform tasks like classifying the detected features into categories (e.g., “malignant” vs. “benign”) or pinpointing their exact location in the image, which enables actions like automated quality checks or anomaly detection.

Time Series Analysis

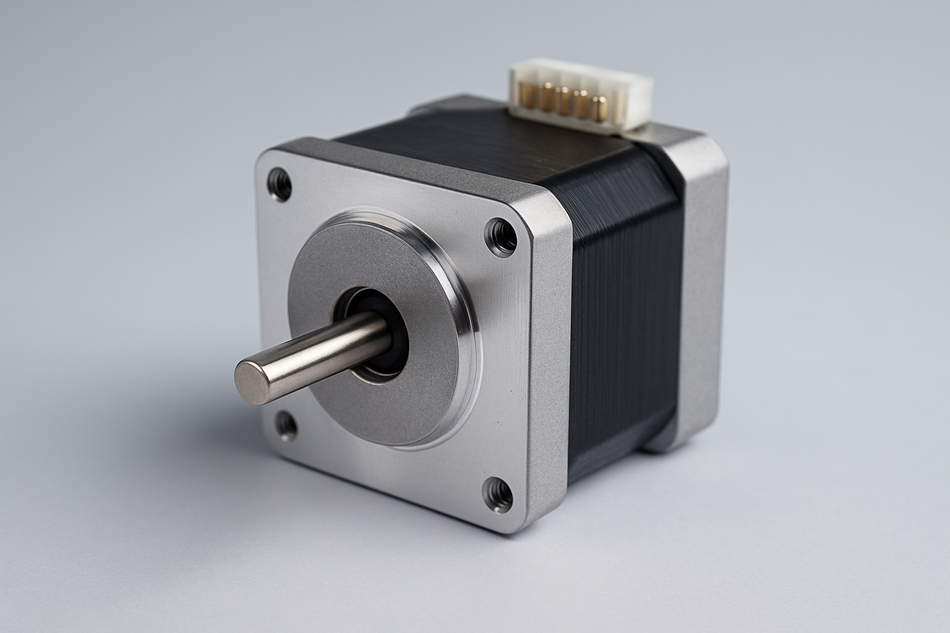

Time series analysis plays a critical role across AI applications such as predictive maintenance and risk assessment. Models in this category focus on analyzing sequential data, such as sensor readings from industrial equipment or financial transaction logs, to identify patterns, predict failures, or assess risks. This subset of AI is essential in any application for which past behavior is a predictor of future events, enabling forecasts based on prior data or anomaly detection when events deviate from expected patterns. For instance, in predictive maintenance, AI models trained on historical data can forecast when a machine is likely to fail, enabling preemptive repairs that save costs and reduce downtime. While AI-powered time series analysis often relies on deep learning techniques that automatically learn complex temporal patterns, traditional ML methods based on statistical principles also play a significant role.

Time series analysis typically begins with initial data preprocessing to clean and transform the data, which is a critical step for both traditional and deep learning approaches. This might involve normalizing values, resampling data at consistent intervals, or removing outliers that could skew the results. Depending on the task and the available data, different models are selected. For simpler tasks or when data are scarce, statistical models, like autoregressive integrated moving average (ARIMA), are often used for anomaly detection or short-term forecasting, owing to their efficiency in capturing linear relationships. In contrast, deep learning models, such as long short-term memory (LSTM) networks or gated recurrent units, excel at recognizing complex, nonlinear patterns in longer sequences of data.

Gated recurrent units are trained in a manner similar to CNNs, with layers that sequentially process input data to extract relevant features. However, these models include specialized mechanisms, such as memory cells and gating structures, that allow them to retain and prioritize information from earlier points in a sequence, effectively “remembering” critical patterns over time. This makes them particularly powerful for capturing dependencies across multiple time steps, such as seasonal trends or long-term correlations.

Machine Learning

ML also underpins many AI applications. Unlike deep learning, which often requires large datasets, the less complex statistical methods of ML can work effectively with smaller, structured datasets, making them suitable for more niche domains like biomedical research or drug discovery. ML encompasses a range of techniques, from supervised learning models like support vector machines and random forests to unsupervised methods such as k-means clustering and principal component analysis. Supervised learning algorithms rely on labeled datasets to predict outcomes, enabling applications such as biomarker discovery, where clinical factors can be used to predict things like immunotherapy response. Unsupervised learning, alternatively, identifies hidden patterns or groupings in data, such as clustering customers based on purchasing behavior without predefined categories. These approaches are particularly valuable when computational efficiency and interpretability are key requirements.

ML uses mathematical algorithms to extract patterns from data and make predictions or decisions based on those patterns. For instance, random forests, a popular ensemble learning method, combine multiple decision trees to improve prediction accuracy and reduce overfitting, making them robust for tasks like fraud detection. The training process for most ML models involves splitting data into training and test sets, optimizing the algorithm on the training set, and validating its performance on unseen data. Unlike deep learning, these methods are often faster to train, require fewer computational resources, and can provide insights into feature importance, making them a versatile choice for many business and scientific use cases.

Reinforcement Learning

RL represents a distinct AI approach focused on decision-making in dynamic environments, where an agent learns to achieve specific goals by interacting with its surroundings and receiving feedback in the form of rewards or penalties. Unlike supervised learning, which relies on labeled data, RL explores sequences of actions to maximize long-term rewards, making it well suited for applications that require adaptive strategies. The RL process begins by defining an environment that models the problem domain and specifying states, actions, and reward structures. Agents are trained through iterative trial and error, often using techniques like Q-learning or policy gradient methods.

During training, data are collected as the agent interacts with the environment, creating an experience dataset that is often stored in replay buffers for optimization. The agent’s policy, a function mapping states to actions, is updated continually to improve performance—balancing exploration of new actions with exploitation of known strategies. This approach enables RL to excel in tasks like predictive maintenance, where agents can learn optimal repair schedules, or in drug discovery to optimize molecular design. Fields like robotics, gaming, and autonomous systems also lean heavily on this subset of AI.

Conclusion

Generative AI might grab headlines, but beyond the hype of LLMs, AI’s true power lies in its diverse tool kit, which can address the multifaceted challenges businesses face across industries. Computer vision enables systems to process and analyze visual data, transforming fields like healthcare and manufacturing. Time series analysis excels in applications that depend on patterns in sequential data, such as predictive maintenance and financial forecasting. ML offers statistical precision and efficiency, which are ideal for structured datasets in tasks like biomedical research and drug discovery. Meanwhile, RL thrives in dynamic environments, crafting adaptive strategies for fields like robotics and gaming. Examining these varied applications makes clear that effective AI solutions exist beyond the narrow scope of language-based generative AI. In a rapidly evolving technological landscape, businesses that adopt the right combination of AI tools stand to unlock real value and innovation.

This article was originally published in “Methods: Confronting AI,” an e-magazine by Mouser Electronics. It has been substantially edited by the Wevolver team and Ravi Y Rao for publication on Wevolver. Upcoming pieces in this series will continue to explore key themes including AI adoption, embedded intelligence, and the ethical dilemmas surrounding emerging technologies.