Be the first to know.

Get our human computer interaction weekly email digest.

Tagged with

Human-Computer Interaction

Latest Posts

Turning Offices with Hot Desking into the Ideal Space - Case Study of Hot Desking Using the Pifaa Seating Management System

For this article we interviewed Niwa and Omura, who are responsible for the design, development, and operation of the system, as well as Toda, who requested its development and is also a user.

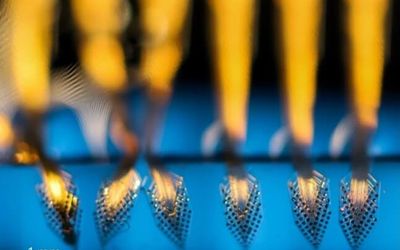

Harnessing scalability, adaptability, and efficiency of biological neural networks through flexible brain-computer interfaces

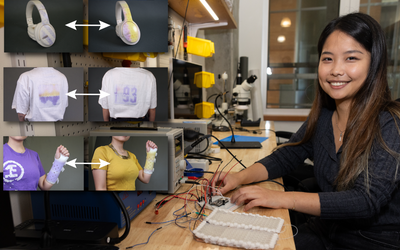

Liu and team receive NSF EFRI grant for biocomputing research

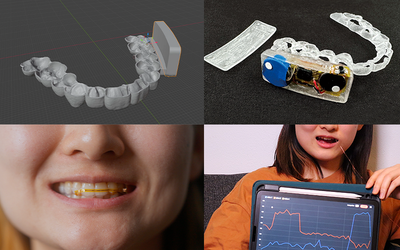

Interactive mouthpiece opens new opportunities for health data, assistive technology, and hands-free interactions

When you think about hands-free devices, you might picture Alexa and other voice-activated in-home assistants, Bluetooth earpieces, or asking Siri to make a phone call in your car. You might not imagine using your mouth to communicate with other devices like a computer or a phone remotely.