Project Luminous: The next level of Augmented Reality

The DFKI-technology collects countless impressions, interprets them and can suggest an appropriate action using MLLM.

This article was first published on

www.dfki.deIn a constantly changing world – saturated with a multitude of impressions – it can sometimes be difficult to maintain an overview. After all, all impressions not only have to be perceived, but also interpreted, which ultimately opens up a wide range of options for action. This is where LUMINOUS (Language Augmentation for Humanverse) system comes into play. The DFKI-technology collects countless impressions, interprets them and can suggest an appropriate action using MLLM.

"Through our developed technology, virtual worlds become increasingly intelligent. The intuitive interaction (via text) with the system and automatic generation of complex behaviors and processes through 'generative AI' or so-called 'Multi-Modal Large Language Models' enable us not only to experience them, but also to test them. To achieve this, at project LUMINOUS we are working in parallel on several approaches such as automatic code generation, the rapid input of new data and other solutions."

System observes, interprets – and refines itself

In the new LUMINOUS project, DFKI is working on next-generation augmented reality (XR) systems. In the future, generative and multimodal language models (MLLM) will join the existing technical extensions of our visually perceived reality, such as the form of texts, animations or the insertion of virtual objects, and redefine interaction with augmented reality (XR) technology.

Muhammad Zeshan Afzal, researcher in the department 'Augmented Vision' at DFKI explains, how this might look like in practise:

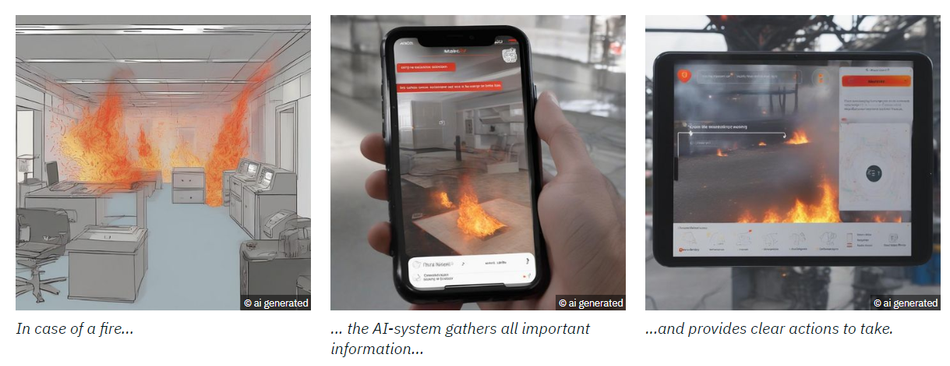

„Let’s take the following scenario: a fire breaks out in a room. In this case, our system first determines where the person – who is equipped with our technology – is currently located. It then collects relevant data from their immediate surroundings, such as the presence of a fire extinguisher or an emergency exit and passes this on to the generative and multimodal language model. This then determines a suitable recommendation for action, such as initiating the extinguishing process using a fire extinguisher, closing windows, or getting to safety.”

Learning from descriptions creates flexibility

Until now, R&D endeavours have been largely limited to the spatial tracking of users and their environment. The result: very specific, limited, and non-generalisable representations, as well as predefined graphic visualizations and animations. „Language Augmentation for Humanverse“ aims to change this in the future.

To achieve this, the researchers at DFKI are developing a platform with language support that adapts to individual, non-predefined user needs and previously unknown augmented reality environments. The adaptable concept originates from zero-shot learning (ZSL), an AI system that is trained to recognize and categorize objects and scenarios – without having seen exemplary reference material in advance.

The assistance helps LUMINOUS to learn and constantly expand its knowledge – beyond the pure training data. By making names and text descriptions available to the LLM, LUMINOUS will use its database of image descriptions to build up a flexible image and text vocabulary that makes it possible to recognize even unknown objects or scenes.

Interactive support in real time

The areas of application for augmented reality systems such as LUMINOUS go beyond situational assistance. „We are currently investigating possible applications for everyday care of sick people, implementation of training programmes, performance monitoring and motivation”, says Zeshan Afzal.

As a kind of translator, the LLM from LUMINOUS should be able to describe everyday activities on command and mediate them to users via a voice interface or avatar. The visual assistance and recommended actions provided in this way will then support everyday activities in real time.

LUMINOUS in application

The results of the project are being tested in three pilot projects focussing on neurorehabilitation (support for stroke patients with speech disorders), immersive safety training in the workplace and the testing of 3D architectural designs.

In the case of neurorehabilitation of stroke patients with severe communication deficits (aphasia), realistic virtual characters (avatars) support the initiation of conversation through image-directed models. These are based on natural language and enable generalization to other activities of daily life. Objects in the scene (including people) are recognized in real time using eye-tracking and object recognition algorithms. Patients can then ask the avatar or MLLM to articulate either the name of the object or the first phoneme. To use the language models in the patient’s respective environment, patients undergo personalised and intensive XR-supported training.

The LUMINOUS-Tech captures the movements and style of the human trainer with a minimal number of sensors to enable the modelling and instantiation of three-dimensional avatars. The aim is to use only kinematic information derived exclusively from the input of the headset, the position of the head and the hands during training.

Future users of these new XR-systems will be able to interact seamlessly with their environment by using language models while having access to constantly updated global and domain-specific knowledge sources. In this way, new XR-technologies can be used for distance learning and education, entertainment, or healthcare services, for example.

Partners:

- German Research Centre for Artificial Intelligence GmbH (DFKI)

- Ludus Tech SL

- Mindesk Societa a Responsabilita Limita

- Fraunhofer Society for the Advancement of Applied Research

- Universidad del Pais Vasco/Euskal Herriko Universitatea

- Fundación Centro de Tecnologias de Interacción visual y Comunicaciones Vicomtech

- University College Dublin

- National University of Ireland

- Hypercliq IKE

- Ricoh International B.V. – Brach Office Germany

- MindMaze SA

- Centre Hospitalier Universitaire Vaudois

- University College London

The project is subsidised by the European Union. Project LUMINOUS (Language Augmentation for Humanverse) does in no way shape or form relate to the AI Language Model Luminous, developed by Aleph Alpha.