The pandemic of deepfakes : A rising threat bigger than identity theft

What may have started as a technology to have fun with, may very well change the way we tackle data protection and identity frauds.

Photo by Bermix Studio on Unsplash

The world isn’t done dealing with fake news yet, and another form of misinformation has emerged quite vehemently – deepfakes. Created by Artificial Intelligence (AI) systems, deepfakes typically manipulate facial expressions and speech or face-swap an individual into a video. One might recall the deepfakes of prominent figures like Barack Obama, Donald Trump, and Mark Zuckerberg that made national news.

Mark Zuckerberg in a deepfake video

A few of these might be akin to fun memes, but there is a dark side to deepfakes that can manipulate political speeches for misinformation campaign, threatening the thread of democracies, or can damage an individual’s reputation by morphing false statements and speeches.

While fact-checking agencies have cropped up to tackle the menace of fake news, the gravest concern with deepfakes is that it is often difficult to detect, unless tagged as such. Also considering that self-learning AI algorithms will make deep fakes more sophisticated in the near future, any check placed in the present context may be irrelevant soon.

The threat is real

What may have started as a technology to have fun with, may very well change the way we tackle data protection and identity frauds. Politicians, businessmen, big-shots, or celebrities are not the only victims of this misuse of AI algorithms. The general public and organizations on the ground are also being targeted for financial fraud and manipulation.

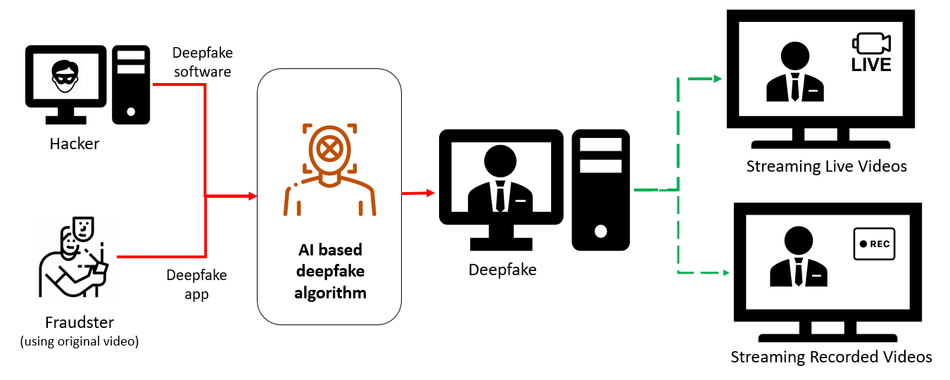

Below is a brief representation of how perpetrators can use simple software for online video streaming or video recordings to commit cybercrimes:

Many organizations have now begun to see deepfake as an even bigger risk than identity theft – especially during current pandemic times. The concern is validated by the latest threat report by the University College of London (UCL) that ranks deep fake technology as one of the biggest threats we face today, even as it spills out of the dark web.

Check out this interesting Top 10 Deepfake Videos

Dealing with issues

The big question now is how can we effectively tackle the ill-effects of deepfake? Various measures have been taken by technology companies in this regard. Attempting to keep pace with the rapidly evolving nature of deepfakes, several businesses have strengthened their employee interactions via video conferencing, encouraging live panel discussions, job interviews, and using video testimonials to establish credentials. Banks have started leveraging video KYC for approving loan applications. The courts have implemented virtual hearings without the need for participants to appear in person. Even online dating apps, such as Tinder and Lolly, have started encouraging users to add videos to their profiles or link their profiles to video-sharing social networking apps as a prevention against the infestation of deep fakes.

While the use of video verification technology has been long accepted as a safe and reliable means for customer data verification or Know Your Customer (KYC) protocol, deepfake brings several new challenges for organizations. To start, deepfakes can escape detection even with the video verification protection layer. Bank and crypto exchanges may end up approving loan applications or transactions of fraudsters. A company might interview one person and onboard another. Judges may end up sentencing innocent victims for life, or someone’s public video could be doctored to humiliate them in the public. With easy access to free-to-download apps to create deepfakes in the market today, the niche breed of such hackers is only growing.

By now it’s clear that deploying another counter technology will not suffice in dealing with an obscure problem as that of deepfakes. In our view, an effective solution will require a combination of stringent laws, strict rules and regulations, the imposition of severe punishment, and using cutting-edge technologies. All this, combined with efforts to educate people on how to best use their personal information, should be the prime focus.

Burdened with the task of maintaining the sanctity of information being posted by millions of users, social media platforms that are typically used to spam deepfakes, are now leveraging AI to tackle the menace. While WeChat started blocking video posts created via the Zao app, Facebook has created a repository of realistic deepfake videos to be used as a benchmark to build effective detection tools. The social media platform that owns Instagram and Whatsapp has also partnered with Microsoft, MIT, University of Oxford, and UC Berkeley, to encourage research on alternate ways to identify and prevent deepfakes.

Whichever path we choose to prevent falling victim to the menace of deepfakes, the need is to have a dynamic solution that can keep up with the evolving nature of AI capability. And we must be quick. Because who knows, once we are done defeating deepfakes, something else might crop up.

This article is written by Arun Rishi Kapoor, Senior Lead Analyst, and Anand Chandrashaker, Senior Domain Principal at Infosys BPM.