Sensor Fusion: Everything You Need to Know

Introducing sensor fusion, how it works, and why you should use sensor fusion in your next system.

Sensor fusion refers to the capability to combine input from different radars, LiDARs, cameras to create a single image of an environment around a vehicle.

Such a process is required as sensor imperfections are a certainty. No system can afford the luxury of operating under pristine, lab-grade conditions, where distractions never happen.

Aside from external disturbances in working conditions, there are also the sensor’s own issues—drifting, inaccuracies, or just plain crazy black box errors.

Sensor fusion is one component you’ll use to design this ‘better’ system.

What is sensor fusion?

You might be more familiar with sensor fusion if I call it “data fusion,” “information fusion,” or even “multi-sensor integration.”

These phrases are often mentioned like they’re the same thing.

Spoiler alert: they’re not.

But a sensor fusion system can involve all these principles at the same time.

By definition, sensor fusion is a process to join the measurement data from multiple measurement sessions, be it from different sensors or different instances, to get better measurement data than if you’re using a single sensor as your source of truth.

‘Better’ in this sense can be something as simple as a more accurate representation, more complete data, or even inferred information that isn’t directly measured by current sensors.

4 reasons to use sensor fusion

Let’s face it:

Issues come up when you use sensors.

Whether it’s something caused by imperfection of the sensor itself, like noise and drifting, or an external factor, like an extraordinary operating condition.

Certain as they are, you can side-step these issues by strengthening your data stream with multiple sensors, whether they’re duplicates or heterogeneous sources.

Let’s say you want to measure the vibration of a bridge when a vehicle passes.

You left an accelerometer overnight, which transmits the data live to a computer in your office.

When you saw the data the next morning, you noticed that there’s an outlier in your data—a sudden burst in your accelerometer’s wavelength.

What happened there?

Did a particularly big truck just happen to pass by? Is it just plain sensor craziness? Or is there a cyclist who knocked the accelerometer out of its position?

Unfortunately, since you’re only using one sensor in this case, you’ll never know. And you’ll never know if the data your sensor recorded is accurate either.

This is where using multiple sensors and a sensor fusion algorithm helps.

- Adding a second (and third) sensor lets you compare the data. This improves the accuracy of your measurement results, so you can hypothesize about what happened at the bridge that night.

- If there are outside interferences disturbing your sensor’s performance, you can use a second sensor that isn’t affected by this disturbance.

Thus, maintaining the consistency of your data stream. - If by any chance, your sensor is experiencing a breakdown in the middle of the operation, you don’t have to suffer from data loss as there are backup sensors available.

- Get additional insights using the same set of sensors, reducing the complexity, number of components, and cost of operation for your system.

The margin of error for your system gets smaller when you use sensor fusion to merge sensory data from multiple sources, as opposed to trusting a single sensor to perform exceptionally well throughout the entire operation.

Types of sensor fusion systems

When observed from a high-level, the setup you’ll need for a sensor fusion network is fairly simple.

You have the sensors, which give an input of raw data to the estimation framework, where the algorithm will transform your raw data into a specific output.

Depending on the algorithm you’re using, the output could be an entirely unique set of information, or it could be a more accurate version of your raw data.

However, the algorithm, the architecture of the overall system, and the sensor setup will depend on what sensors you have and what information you want to get out of this sensor fusion system.

Let’s start with the easier part and get to know the types of sensor fusion systems, based on the setup, architecture type, and levels of fusion.

Sensor setup

Arguably the simplest categorization, there are three commonly used sensor fusion configurations: competitive (or redundant), cooperative, and complementary. (Durrant-White, 1990)

- Competitive

In a competitive or redundant setup, your sensors observe the same object. By having multiple sensors set up to observe the same state, you can improve the reliability and accuracy of your system, and the confidence level of your measurement data. - Cooperative

Just like a competitive setup, cooperative systems also observe the same object. Rather than using the data to decide which data stream should be passed on, you can use a cooperative setup to get new insights into your observed environment by combining the raw data. - Complementary

A complimentary setup observes two different objects. Similar to a cooperative setup, merging the data lets you form a global point of view of the observed environment.

Architecture type

Based on its architecture, there are three main types of sensor fusion systems: centralized, decentralized, and distributed. (Castanedo, 2013)

There is also a hierarchical type, which combines the decentralized and distributed system through a hierarchy, but let’s stick to the three main types for this brief explanation.

- Centralized

Within a centralized architecture, the data fusion process is done directly on the control system. This simplifies the architecture, but puts a strain on the control system, making it slower and less efficient. - Decentralized

In a decentralized architecture, your measurement data is combined at a data fusion node, with feedback from your local network of sensors and other data fusion nodes in your system. While this is more efficient than a centralized system, your communication line gets complicated quickly if you use a lot of sensors and data fusion nodes in your system. - Distributed

A distributed system processes your data locally at the source before sending it into an intermediate fusion node, which then produces a global view of your observed environment.

Levels of data fusion

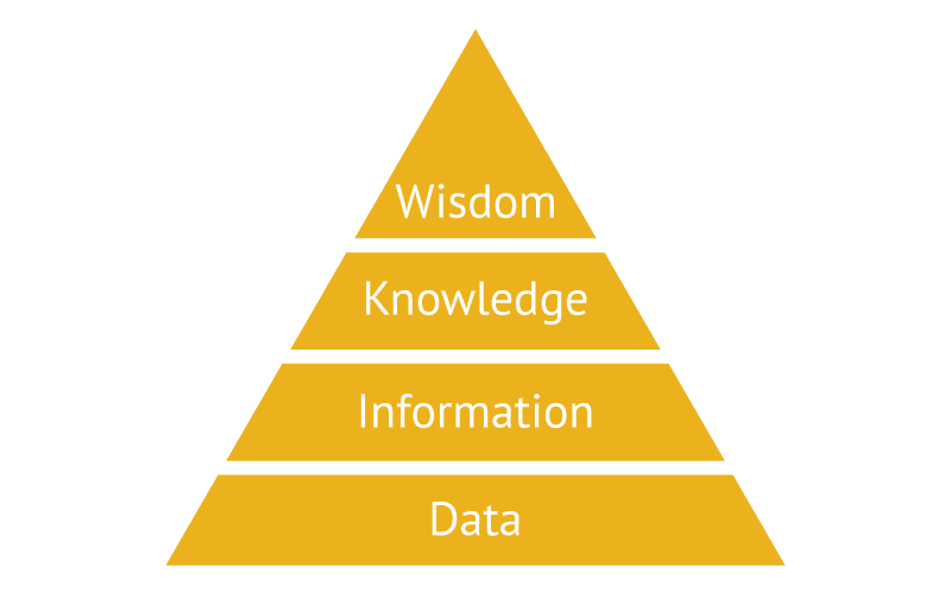

Depending on your application, your sensor measurement data will transform into different levels of data as it goes through your system. Ackoff states that the hierarchy of your data can take the form of data, information, knowledge, and wisdom.

In this pyramid, data is raw data. Or basically just an input from your measurement system —whether it’s numbers, symbols, signals, or others—that you can’t really make sense of just yet.

The next level, information, is still data but this time tagged with the relevant context so it becomes more useful and easily visualized.

While knowledge is obtained by assembling the pieces of information and relating it to what you want to find out. In short, knowledge is where the data starts to become useful for you.

The top of our pyramid, wisdom, is a little harder to define. But just like everything else before it, it builds on knowledge. This time to ask more abstract questions like ‘why’ and eventually get a decision.

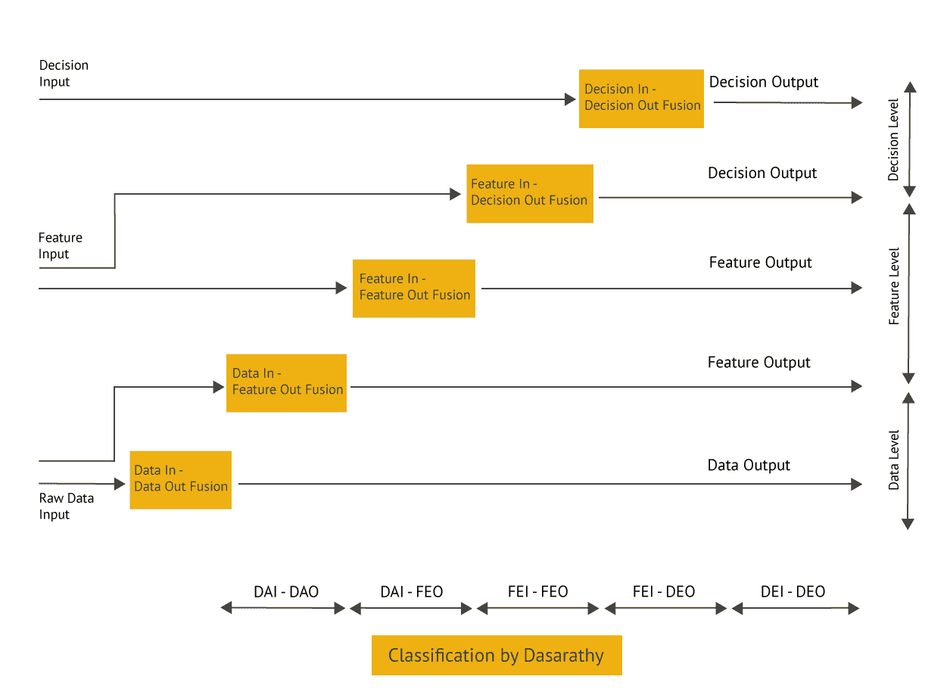

This DIKW ties in neatly to the three main levels of data fusion. Based on the levels of data fusion, there are three categories of sensor fusion systems:

- Low-level fusion moves at a data level. It takes your raw data as input and returns a more accurate version of your raw data as output.

- Mid-level fusion moves at the feature level. It takes observed features as input and produces a new feature as output. Here, features are just information inferred from a collection of raw data, including contextual information processed from various sensors or a priori information.

- High-level fusion combines decisions, represented from sources as input, to produce a more accurate decision as output.

As you can see, this categorization only moves from one level to the next. The problem is when you have a system that crosses levels. Here, you can use Dasarathy’s categorization, which uses input and output data format as the parameter.

How sensor fusion prevents cars from crashing

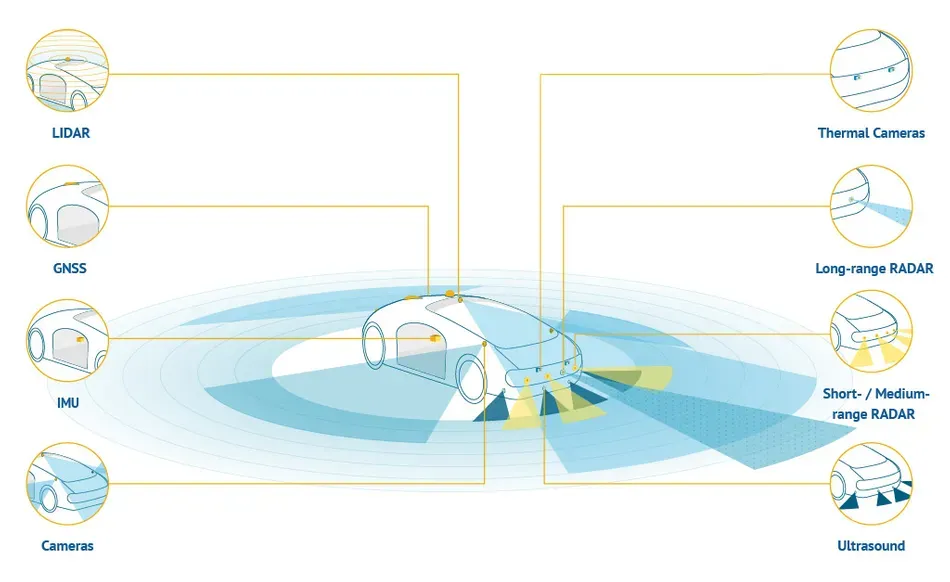

Autonomous vehicles need a lot of input to get a sense of their ever-changing environment.

To prevent crashes, the system needs a model of the external and internal state, which will need to be measured using a combination of several sensors, including radar, lidar, and cameras.

Naturally, each sensor has its own strength and weakness. To get an accurate depiction of the environment and improve the robustness of the system, you’ll need to combine the data from each sensor.

For instance, you need cameras to get more context about the environment and classify objects. On the other hand, lidar and radar are better at determining the distance of the car from an object.

The table below depicts how these three sensors can benefit from each other.

| RADAR | LiDAR | CAMERA | FUSION | |

| Object detection | + | + | O | + |

| Pedestrian detection | - | O | + | + |

| Weather conditions | + | O | - | + |

| Lighting conditions | + | + | - | + |

| Dirt | + | O | - | + |

| Velocity | + | O | O | + |

| Distance - accuracy | + | + | O | + |

| Distance - range | + | O | O | + |

| Data density | - | O | + | + |

| Classification | - | O | + | + |

| Packaging | + | - | O | + |

| + = Strength; O = Capability; - = Weakness | ||||

There are many more applications of sensor fusion in autonomous vehicles. It’s also needed for many other safety features required by an autonomous vehicle, such as 3D object detection, tracking, and occupancy mapping. (Lindquist, 2011)

Want to use sensor fusion in your next project?

So now that you’ve peeked into the world of sensor fusion, there’s one more topic we haven’t covered yet. Just because it’s such a broad topic.

Your sensor fusion algorithm, the core of your sensor fusion system.

It will largely depend on what you’re trying to do. The algorithm you’ll need to get more precise data for your lab-contained robots will most likely differ from one to prevent crashes on autonomous cars.

After you’ve decided on the requirement of your system and which sensors to use, you can start thinking about which algorithm you’ll use to create a sensor fusion system that matches your needs.

To get a basic idea about what kind of algorithm you need, you can start with the most popular filters for sensor fusion: the Kalman filter, an estimation framework widely used for its predictive capabilities.

Bibliography

Castanedo, Federico. 2013. “A Review of Data Fusion Techniques.” The Scientific World Journal 2013, no. 704504 (October): 19. https://doi.org/10.1155/2013/704504.

Durrant-White, H.F. 1990. “Sensor Models and Multisensor Integration.” In Autonomous Robot Vehicles, 73-89. N.p.: Springer. https://doi.org/10.1007/978-1-4613-8997-2_7.

Galar, Diego, and Uday Kumar. 2017. “Chapter 1 - Sensors and Data Acquisition.” In eMaintenance: Essential Electronic Tools for Efficiency, 1-72. N.p.: Academic Press. https://doi.org/10.1016/B978-0-12-811153-6.00001-4.

Lundquist, Christian. "Sensor fusion for automotive applications." PhD diss., Linköping University Electronic Press, 2011