Be the first to know.

Get our edge ai weekly email digest.

Tagged with

Edge AI

ORGANIZATIONS. SHAPING THE INDUSTRY.

Berkeley Artificial Intelligence Research

Research

The Berkeley Artificial Intelligence Research (BAIR) Lab brings together UC...

View more

Latest Posts

webinar | JAN 15, 2025

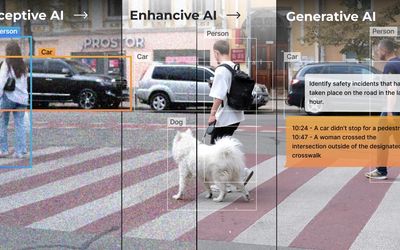

Join us for The Rise of Generative AI at the Edge: From Data Centers to Devices webinar on Wednesday, January 15th