SoftBank Robotics - Pepper

A humanoid robot designed to interact with humans.

Technical Specifications

| Height | 120 cm | 47.2 in |

| Length | 42.5 cm | 16.7 in |

| Width | 48.5 cm | 19 in |

| Weight | 28 kg | 62 lb |

| Speed | 3 km/h | 1.7 mph |

| Sensors | Head with two HD 5-megapixel cameras (mouth and forehead), 3D sensor (behind eyes), four microphones, and three touch sensors. Torso with gyroscope. Hands with two touch sensors. Leg/mobile base with two sonars, six lasers, three bumper sensors, and a gyroscope. |

| Actuators | 20 DC motors |

| Power | 30-Ah lithium-ion battery, 12 hours of operation |

| Computing | Intel Atom E3845 computer |

| Speakers | Two speakers |

| Tablet | 10.1-inch tablet on the robot's chest |

| Connection | Bluetooth, Ethernet, Wi-Fi |

| Software | NAOqi operating system |

| Choregraphe software development kit (SDK) and Pepper SDK for Android Studio | |

| Support for Python, C++, Java, and JavaScript | |

| ROS interface | |

| Degrees of freedom (DOF) | 19 (Head: 2 DoF; Shoulder: 2 DoF x 2; Elbow: 2 DoF x 2; Wrist: 1 DoF x 2; Hand: 1 DoF x 2; Hip: 2 DoF; Knee: 1 DoF; Mobile base: 2 DoF) |

Overview

Problem / Solution

Robotics and its long-standing significance have led to studies of artificial intelligence, creating AI robots more frequently today. Robot applications appear in the military, medicine, exploration, and even entertainment, all to ease our lives. AI robots allow independent navigation, decision-making, and adaptability to varying conditions. Thus the need for methods involving navigation configuration, people interaction, and facing adversities that require complex semantics.

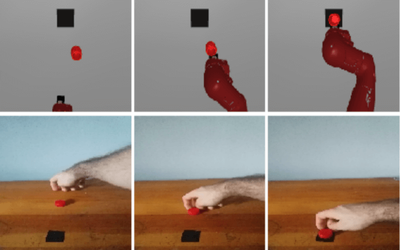

As a companion in homes, friendly humanoid Pepper can aid customers in retailing stores as it speaks, gesticulates, and maintains an outlook of making people smile. Pepper, a social robot, recognizes faces and makes sense of basic human emotions. It can also interact with humans and engage in conversions with the aid of its touch screen. Pepper is widely available in businesses and schools, where more than 2000 companies adopt it as an assistant in welcoming, informing, and guiding people in an advanced innovative way.

Design

With its curvy design, Pepper is danger-free and offers a high level of user acceptance. It has 19 degrees of freedom, instigating natural and expressive movements. The head has 2 DoF, and each pair of shoulder elbow, hands, wrists, and hands also has 2 DoF. The other three are found on the knee and the mobile base. Pepper runs on actuator 20 DC motors, running on 30Ah Li-ion battery for an approximate 12-hr operation. Equipped with Computing Intel Atom E34845, this robot displays information and highlights messages and support speeches via its 10.1-inch tablet found on its chest. It has two speakers and can connect to Bluetooth, ethernet, and Wi-Fi.

Pepper can recognize 15 languages, including but not limited to English, French, Spanish, German, Italian, Arabic, Japanese, and Dutch.

Sensors

At 120 cm height, Pepper perceives the environment and prompts a conversation when notices a person. With touch sensors, LEDs, and microphones, multimodal interactions become possible. The bumpers, infrared sensors, 2D and 3D cameras, and sonars allow for omnidirectional and autonomous navigation. The head has two HD 5-megapixel cameras in the mouth and forehead, 3D sensors behind the eyes, four microphones, and three touch sensors. The torso has a gyroscope, and the hands have two touch sensors. With its perception modules, it recognizes and interacts with people.

Software

Pepper runs on an open and programmable platform, utilizing a NAOqi operating system. It supports Python, C++, Java, JavaScript, and ROS interface. It connects to Android Studio via its software development kit (SDK) and Pepper SDK. Aside from over 20 software engines for awareness, motion, and dialogue, Pepper’s emotion engine attempts to make sense of users’ feelings through recognition of facial gestures, voice tone, and speech—allowing for a suitable response.

References

Recommended Specs

Continue Reading

Unitree Robotics Releases Industrial Quadruped Robot B2, Breaking Through Limits with Hyper Evolution!

On November 3, Unitree Robotics released the new industrial quadruped robot B2, continuing to lead the global quadruped robot industry application.