Implementing AI in IoT Endpoints: Balancing Performance, Feasibility, and Data Constraints

Article 1 of Bringing Intelligence to the Edge Series: With the introduction of AI, IoT devices can become more intelligent and less reliant on external systems— but not without trade-offs in performance and cost. Understanding how to make that decision is key.

This is the first article in a 6-part series featuring articles on "Bringing Intelligence to the Edge". The series looks at the transformative power of AI in embedded systems, with special emphasis on how advancements in AI, embedded vision, and microcontroller units are shaping the way we interact with technology in a myriad of applications. This series is sponsored by Mouser Electronics. Through their sponsorship, Mouser Electronics shares its passion and support for engineering advancements that enable a smarter, cleaner, safer manufacturing future.

Introduction

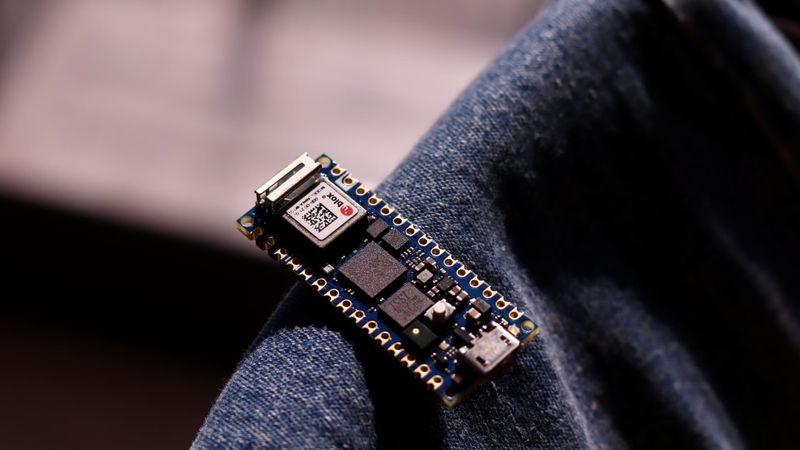

Artificial intelligence (AI) is transforming the ever-expanding Internet of Things (IoT) quickly as manufacturers rush to embed the technology into their devices, from smart watches and home monitors to self-driving cars and manufacturing robots. Using AI in IoT endpoints enables powerful devices that can make decisions without the need for outside assistance, but this comes with certain trade-offs.

To determine when AI should be introduced to IoT endpoints, design engineers should carefully assess the return on investment (ROI), evaluate the feasibility of the project within existing constraints, and consider data requirements and what data engineering may be needed. Embracing the right strategy when considering the costs and benefits of introducing AI to an IoT device can help to ensure the success of the project and maximize the impact of smart devices in our increasingly digitized world.

This article will delve deeper into the factors that design engineers must weigh when considering the integration of AI into IoT devices. It will explore the performance trade-offs, the balance of improved intelligence with device performance, and the potential hurdles in data engineering. By gaining a deeper understanding of these elements, engineers and stakeholders can make more informed decisions, ensuring the successful and effective implementation of AI into IoT endpoints.

Making Smart Devices Smarter— but Not without Trade-Offs

AI is revolutionizing the way that smart devices operate, enabling them to make decisions without relying on external systems for input. This is beneficial in many ways—the data can stay on the device, which is safer and more private. The devices are also capable of making decisions based on the data without the need for immediate connectivity, which is helpful for endpoints that may have only sporadic access to a connection.

However, this comes with trade-offs: AI models can be slower to run and consume more power depending on the model and size. This can be a major limiting factor for AI on edge devices because for machine learning (ML) to be effective in those applications, it must be small and fast enough to run on an edge device. Therefore, although AI-enabled devices can be more powerful, they come with trade-offs that engineers must consider.

Balancing Improved Intelligence with Impact on Performance

Design engineers should consider the ROI when deciding whether to add AI to an IoT endpoint. Before embarking on the decision to embed ML in these endpoints, the expected value should be defined and quantified as much as possible. Doing so helps not only to understand how the costs stack up compared to expected gains but also to inform the criteria for success of the project (i.e., accuracy of the model or some other metric). This will make it easier to determine if the value of the AI outweighs the impact on the device’s performance.

In most cases, ML algorithms require more computing power and more energy, which can reduce the device’s battery life or noticeably degrade its performance in terms of latency. If the device is already running at maximum capacity, the ML algorithms may not be able to run at a satisfactory level. Thus, design engineers should examine the use case of the device carefully to determine if AI would be beneficial and not too much of an additional strain on the device’s resources. For devices that have constant access to a power source, the additional cost of computation at the source becomes more of an issue than battery life.

In addition to evaluating ROI versus device performance, design engineers should examine whether the task can be achieved with ML. Given the hype around AI, applying it to all use cases without thorough investigation is tempting.

However, conducting research on prevailing methods in the field and applications that continue to elude satisfactory solutions from ML could reveal potential issues before starting the process of adding AI to IoT endpoints.

At this point, design engineers should also determine if the AI capabilities can be achieved without ML and if the use case is better suited for an AI-enabled device or a simpler alternative solution. Typically, the first place to start in this process is to review the literature and identify which methods have been used successfully before. Then, using that information as a guide, build a baseline proof of concept using simple, rule-based approaches or less sophisticated algorithmic implementations. This baseline can be used to assess how well a non-ML solution might work; if it does not meet success criteria for performance, then progressing to an ML-based solution may be in order.

Assessing Feasibility among All the Constraints

Assuming that the value expected by introducing ML outweighs the costs and potential performance impacts, the next step in the decision-making process is to assess whether the ML lifecycle is possible within the current technical or physical constraints of the devices. IoT endpoints that are not normally Wi-Fi enabled and that produce vast quantities of unlabeled data stored for only short periods of time at the edge will score much lower on the feasibility scale than those with cloud access, where data can be pooled and annotated more easily and where compute power is more readily available.

In particular, since AI generally requires some training that involves the model having access to enough data to learn from, how this training will be done is an aspect of feasibility. For example, putting ML in an endpoint, though not impossible, is significantly less feasible if the data are distributed and cannot be pooled for training a model owing to privacy, storage, or connectivity requirements and constraints. In these cases, training could occur in a federated fashion, but the cost and complexity of doing so could outweigh the benefits.

The impact of these constraints often extends beyond the initial data annotation and model training phases of the ML lifecycle. Model performance monitoring and updates are key, requiring retraining on new data if performance has declined as well as connectivity to push new deployments to devices. Engineers should consider monitoring in terms of the model and the data: Assessing feasibility includes determining whether data drift between the training data and new points being measured can be captured. For example, monitoring is more difficult for endpoints where data is stored only for a short period of time, which may impact the final decision about whether to introduce ML to the process.

Addressing the Age-Old Question of Data

Regardless of the final application, when deciding whether to embark on an ML project, one of the most common questions is whether enough data exists. This goes hand in hand with labeling requirements—for example, whether the task requires annotated data for a supervised training approach and whether the data are currently in the required state. Within the IoT context, if the data cannot be pooled in the cloud, design engineers must determine whether enough data exists per device for the task at hand and if they need to be labeled. Data quality is essential for successful ML projects, so engineers should also evaluate the ability to manage noise and the heterogeneity of sensor data to an acceptable standard.

Data engineering depends on the device configuration and how the data are stored. To ensure the success and accuracy of an ML project, proper data engineering is essential and IoT applications are no exception. This includes understanding the data format, quality, and type, as well as how the data might be cleaned (e.g., granting access for initial data exploration and deploying processes that transform the data to be usable). Data engineering is typically performed by data engineers who require access to the data they need to work with; in cases where access is not permissible due to device location, lack of connectivity, or privacy concerns, the feasibility of project success may decrease.

Conclusion

When deciding whether to introduce AI to IoT endpoints, design engineers must take a variety of considerations into account. From assessing the expected value of the AI versus the cost and impact on device performance, to evaluating the feasibility of the ML lifecycle within the existing constraints, and finally to understanding the data requirements and what data engineering might be needed, engineers should carefully consider each element of the decision. With the right approach and research, design engineers can make an informed decision that incorporates the costs and benefits of introducing AI to an IoT device, helping to ensure the success of the project.

This article is based on an e-magazine: Bringing Intelligence to the Edge by Mouser Electronics and Renesas Electronics Corporation. It has been substantially edited by the Wevolver team and Electrical Engineer Ravi Y Rao. It's the first article from Bringing Intelligence to the Edge Series. Future articles will introduce readers to some more trends and technologies shaping the future of Edge AI.

This introductory article unveils the "Bringing Intelligence to the Edge" series, exploring the transformative potential of AI at the Edge

The first article examines the challenges and trade-offs of integrating AI into IoT devices, emphasizing the importance of balancing performance, ROI, feasibility, and data considerations for successful implementation.

This second article delves into the transformative role of Endpoint AI and embedded vision in tech applications, discussing its potential, challenges, and the advancements in processing data at the source.

The third article delves into the intricacies of TinyML, emphasizing its potential in edge computing and highlighting the four crucial metrics - accuracy, power consumption, latency, and memory requirements - that influence its development and optimization.

The fourth article delves into the realm of data science and AI-driven real-time analytics, showcasing how AI's precision and efficiency in processing big data in real-time are transforming industries by recognizing patterns and inconsistencies.

The fifth article delves into the integration of voice user interface technology into microcontroller units, emphasizing its transformative potential.

The sixth article delves into the profound impact of edge AI on system optimization, maintenance, and anomaly detection across diverse industries.

About the sponsor: Mouser Electronics

Mouser Electronics is a worldwide leading authorized distributor of semiconductors and electronic components for over 1,200 manufacturer brands. They specialize in the rapid introduction of new products and technologies for design engineers and buyers. Their extensive product offering includes semiconductors, interconnects, passives, and electromechanical components.