Bringing Intelligence to the Edge Series

Introducing the Bringing Intelligence to the Edge Series: Exploring how Artificial Intelligence is moving to embedded systems, transforming technology across various applications.

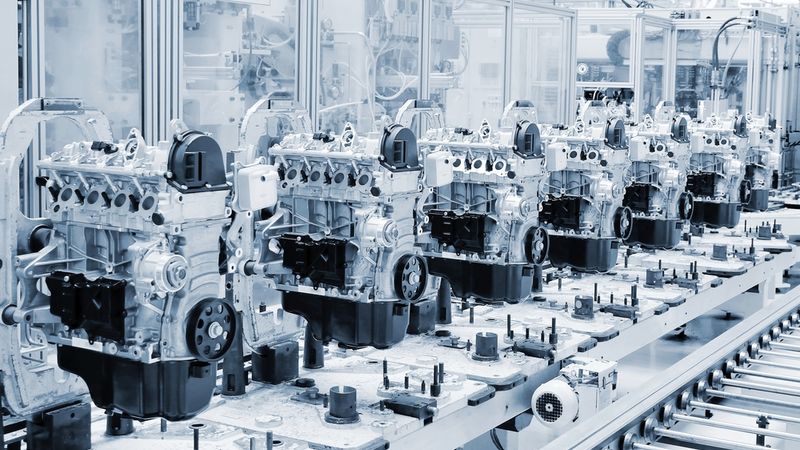

This is the introductory article to a 6-part series featuring articles on "Bringing Intelligence to the Edge". The series looks at the transformative power of AI in embedded systems, with special emphasis on how advancements in AI, embedded vision, and microcontroller units are shaping the way we interact with technology in a myriad of applications. This series is sponsored by Mouser Electronics. Through their sponsorship, Mouser Electronics shares its passion and support for engineering advancements that enable a smarter, cleaner, safer manufacturing future.

The evolution of Artificial Intelligence (AI) has primarily been fueled by the power of centralized computing. Complex algorithms, training large models on extensive datasets - these tasks have been traditionally delegated to powerful servers tucked away in massive data centers. But as we continue to generate an unprecedented volume of data through billions of connected devices, a compelling question is thrust into the spotlight:

Should we always rely on the cloud for processing this data, or is there a more efficient way?

This question brings us to the edge of a new frontier in AI, quite literally - Artificial Intelligence at the Edge.

We are excited to unveil our brand new series, "Bringing Intelligence to the Edge" sponsored by Mouser Electronics, which dives into the fascinating realm of AI and embedded systems. Over the course of six engaging articles, we will explore how this intersection is transforming technology across various applications.

What is AI at the Edge?

At its core, AI at the Edge is about shifting the focus of data processing from the cloud to the devices generating the data - the "edge" devices. These could be anything from your smartphone to a drone, a self-driving car, or a sensor in a vast Internet of Things (IoT) network. With edge AI, the raw data produced by these devices is processed locally, significantly reducing the need for data transmission and consequently, the latency and bandwidth usage.

Driving Factors for Edge AI

The shift towards Edge AI is not a random trend, but the result of very deliberate and compelling reasons. One of these is the increasing concern about data privacy. In the context of traditional cloud-based AI models, data needs to be transmitted to remote servers for processing. This movement and storage of data outside the local device or network inherently increases the risk of privacy breaches, especially when sensitive data is involved. By processing data directly on the edge device, Edge AI offers a significant advantage - it keeps the data local, ensuring that it never leaves the device. This privacy-enhancing characteristic significantly reduces the potential for unwanted access and data breaches.

Yet, privacy is not the only factor fueling the move towards Edge AI. Latency, the delay before a transfer of data begins following an instruction for its transfer, is a critical aspect of many applications. Imagine a self-driving car navigating traffic or a robotic surgeon performing a delicate procedure - in these situations, near-instantaneous data processing is not just desirable, but essential. Sending data to the cloud and waiting for a response is simply not feasible in these time-critical scenarios. Once again, Edge AI comes out on top, providing the ultra-low latency responses that are critical to the safe and effective operation of these applications.

Challenges of Implementing AI at the Edge

Despite the undeniable advantages of Edge AI, the path to its wide-scale implementation is not without hurdles. One of the foremost challenges lies in the constraints associated with edge devices. These devices are often limited in terms of processing power, memory, and energy. Training and running resource-intensive deep learning models on such devices, therefore, can be a complex task.

Beyond that, there's the issue of model efficiency. Given the resource constraints of edge devices, AI models need to be designed with efficiency in mind. Techniques to compress these models, while retaining their functionality and performance, become crucial.

Finally, device management and security at scale are not trivial concerns. When you're dealing with a multitude of distributed Edge AI devices, ensuring efficient network management, timely firmware updates, and bug fixes are all challenges that need to be addressed. Moreover, Edge AI devices can be more susceptible to physical attacks than their cloud-based counterparts, raising the need for robust and comprehensive cybersecurity measures.

Overcoming the Challenges: Techniques and Strategies

Addressing the challenges of implementing AI at the edge necessitates ingenious techniques and strategies. Some of the key methodologies developed and honed for this purpose include model pruning, quantization, and knowledge distillation.

One way to think of model pruning is like carving a sculpture from a block of stone - the goal is to remove all the excess without compromising the final form. Similarly, model pruning involves eliminating non-contributing parameters within a model. This process reduces the model's size and its computational demands, making it more compatible with resource-constrained edge devices.

Quantization is a process that reduces the precision of the numerical values used within a model. By using less precise numbers (for example, 16-bit integers instead of 32-bit floating-point numbers), we can decrease the size of the model and speed up its computations. The advantage of quantization is that this reduction in precision typically has minimal impact on model accuracy, making it a valuable tool in optimizing AI models for the edge.

Knowledge distillation is an approach where a smaller, more resource-efficient model (often called the "student") is trained to emulate the behavior of a larger, more complex model (the "teacher"). The objective is to develop a compact model that retains the performance of the larger model but requires significantly fewer resources, which is ideal for deployment on edge devices.

These techniques, among others, play a crucial role in making AI at the edge not just a theoretical concept, but a practical reality across industries and applications. The upcoming articles in this series will dive into some more of such methodologies and explore their real-world applications.

Conclusion

As we move towards a future that is increasingly interconnected, the need for secure, efficient, and quick data processing becomes paramount. AI at the Edge is a promising solution to these growing demands. It pushes us to rethink the structure of our AI models, moving away from the cloud's comfort and venturing towards the edge. While challenges persist, advances in techniques like pruning, quantization, and knowledge distillation are closing the gap, making edge AI more viable each day. The road ahead is complex, but one thing is clear - the future of AI is not just in the cloud, but also at the edge, where data is born.

This is an introductory article to the Bringing Intelligence to the Edge Series. Future articles will introduce readers to some more trends and technologies shaping the future of Edge AI.

This introductory article unveils the "Bringing Intelligence to the Edge" series, exploring the transformative potential of AI at the Edge

The first article examines the challenges and trade-offs of integrating AI into IoT devices, emphasizing the importance of balancing performance, ROI, feasibility, and data considerations for successful implementation.

This second article delves into the transformative role of Endpoint AI and embedded vision in tech applications, discussing its potential, challenges, and the advancements in processing data at the source.

The third article delves into the intricacies of TinyML, emphasizing its potential in edge computing and highlighting the four crucial metrics - accuracy, power consumption, latency, and memory requirements - that influence its development and optimization.

The fourth article delves into the realm of data science and AI-driven real-time analytics, showcasing how AI's precision and efficiency in processing big data in real-time are transforming industries by recognizing patterns and inconsistencies.

The fifth article delves into the integration of voice user interface technology into microcontroller units, emphasizing its transformative potential.

The sixth article delves into the profound impact of edge AI on system optimization, maintenance, and anomaly detection across diverse industries.

About the sponsor: Mouser Electronics

Mouser Electronics is a worldwide leading authorized distributor of semiconductors and electronic components for over 1,200 manufacturer brands. They specialize in the rapid introduction of new products and technologies for design engineers and buyers. Their extensive product offering includes semiconductors, interconnects, passives, and electromechanical components.