Four Metrics You Must Consider When Developing TinyML Systems

Article 3 of Bringing Intelligence to the Edge Series: Balancing the critical metrics of accuracy, power consumption, latency, and memory requirements is key to unlocking the potential of Tiny Machine Learning (TinyML) in low-power microcontrollers and edge computing.

This is the third article in a 6-part series featuring articles on "Bringing Intelligence to the Edge". The series looks at the transformative power of AI in embedded systems, with special emphasis on how advancements in AI, embedded vision, and microcontroller units are shaping the way we interact with technology in a myriad of applications. This series is sponsored by Mouser Electronics. Through their sponsorship, Mouser Electronics shares its passion and support for engineering advancements that enable a smarter, cleaner, safer manufacturing future.

In the rapidly evolving landscape of Artificial Intelligence (AI), the emergence of Tiny Machine Learning (TinyML) signals an exciting shift towards efficient, localized computation. The field has the potential to revolutionize edge computing, enabling AI functionality on low-power, resource-constrained devices.

The successful development of TinyML systems hinges on the consideration and optimization of four critical metrics: accuracy, power consumption, latency, and memory requirements. Given the specific challenges and objectives intrinsic to TinyML, an in-depth comprehension and strategic management of these parameters are key to realizing a robust TinyML system.

The article will provide an exploration of these metrics, offering a clear understanding of their significance, their interplay, and how they can be effectively managed during TinyML system development.

Understanding the Four Key Metrics in TinyML System Development

Recently, with advancements in machine learning (ML) there has been a split into two scales: traditional large ML (cloud ML), with models getting larger to achieve the best performance in terms of accuracy, and the nascent field of tiny machine learning (TinyML), where models are shrunk to fit into constrained devices to perform at ultra-low power.

As we get further into the characteristics and challenges of TinyML, it's worth taking a closer look at the four critical metrics that are paramount in the development and optimization of TinyML applications, with each playing a unique role in shaping the performance and feasibility of these systems. Our subsequent discussion will explore how these metrics are balanced in TinyML, illustrating how this field is innovating to operate effectively within these demanding constraints.

Accuracy has been used as the main metric for the performance of ML models for the last decade, with larger models tending to outperform their smaller predecessors. In TinyML systems, accuracy is also a critical metric, but a balance with the other metrics is more necessary, compared to cloud ML.

Power consumption is a critical consideration, as TinyML systems are expected to operate for prolonged periods on batteries (typically in the order of milliwatts). The power consumption of the TinyML model would depend on the hardware instruction sets available. For example, an Arm® Cortex®-M85 processor is significantly more energy efficient than an Arm Cortex-M7 processor, thanks to the Helium instruction set. It would also depend on the underlying software used to run the models (i.e., the inference engine); for example, using the CMSIS-NN library improves the performance drastically as compared to reference kernels.

Latency is important, as TinyML systems operate at the endpoint and do not require cloud connectivity, the inference speeds of such systems are significantly better than cloud-based systems. Furthermore, in some use cases, having ultra-high inference speed (in milliseconds) is critical to be production ready. Similar to the power consumption metric, it depends on the underlying hardware and software.

Memory is a big hurdle in TinyML, as designers squeeze down ML models to fit into size-constrained microcontrollers (less than 1MB). Thus, reducing memory requirements has been a challenge, and during model development, many techniques, such as pruning and quantization, are used. Furthermore, the underlying software plays a large role as better inference engines optimize the models more effectively (better memory management and libraries to execute layers).

“The system metric requirement will vary greatly depending on the use case being developed.”

The Interplay of the Four Metrics: A Delicate Balance

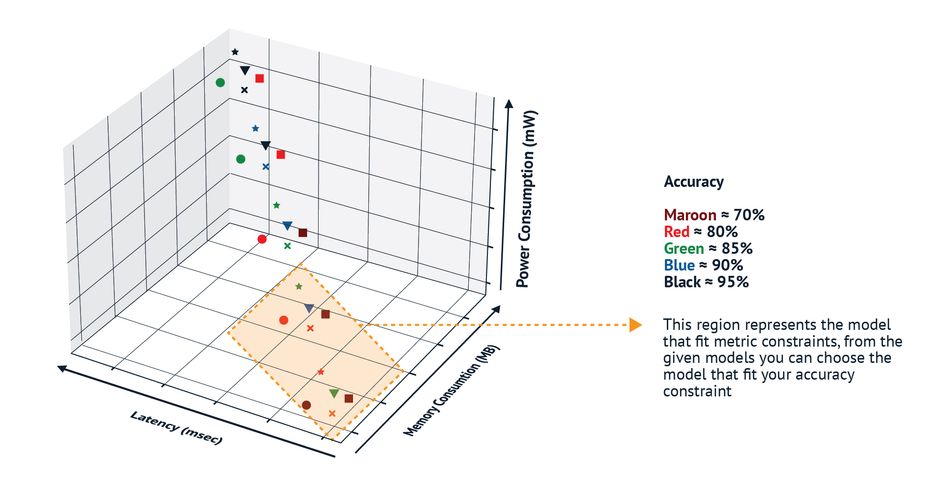

As the four metrics are correlated—there tends to be an inverse correlation between accuracy and memory but a positive correlation between memory, latency, and power consumption—improving one could affect the others. So when developing a TinyML system, it is important to carefully consider this. A general rule would be to define the necessary model accuracy required as per the use case and then compare a variety of developed models against the three other metrics (Figure 1), given a dummy example of a variety of models that have been trained.

The marker shapes represent different model architectures with different hyperparameters that tend to improve accuracy with an increase in architecture size at the expense of the other three metrics. Depending on the system-defined use case, a typical region of interest is shown, from that, only one model has 90% accuracy, if higher accuracy is required, the entire system should be reconsidered to accommodate the increase in the other metrics.

Benchmarking TinyML Models

Benchmarks are necessary tools to set a reproducible standard to compare different technologies, architectures, software, etc. In AI/ML, accuracy is the key metric to benchmark different models. In embedded systems, common benchmarks include EEMBC’s CoreMark and ULPMark, measuring performance and power consumption, respectively. In the case of TinyML, MLCommons has been gaining traction as the industry standard where the four metrics discussed previously are measured. Due to the heterogeneity of TinyML systems, to ensure fairness, four AI use cases with four different AI models are used and have to achieve a certain level of accuracy to qualify for the benchmark. Renesas benchmarked two of its microcontrollers, RA6M4 and RX65N, using TensorFlow Lite for microcontrollers as an inference engine, and the results can be viewed here.

Conclusion

The emergence of TinyML underscores the potential for AI in low-power microcontrollers. The critical metrics of accuracy, power consumption, latency, and memory requirements provide a roadmap for evaluation and optimization. However, each metric interplays with others, and understanding their trade-offs is essential. Adapting these parameters to specific use cases is vital, and benchmarking models using industry standards aids in development. As TinyML advances, innovative model architectures, optimization techniques, and hardware advancements will drive its evolution, redefining the boundaries of embedded systems and edge computing.

This article is based on an e-magazine: Bringing Intelligence to the Edge by Mouser Electronics and Renesas Electronics Corporation. It has been substantially edited by the Wevolver team and Electrical Engineer Ravi Y Rao. It's the third article from Bringing Intelligence to the Edge Series. Future articles will introduce readers to some more trends and technologies shaping the future of Edge AI.

This introductory article unveils the "Bringing Intelligence to the Edge" series, exploring the transformative potential of AI at the Edge

The first article examines the challenges and trade-offs of integrating AI into IoT devices, emphasizing the importance of balancing performance, ROI, feasibility, and data considerations for successful implementation.

This second article delves into the transformative role of Endpoint AI and embedded vision in tech applications, discussing its potential, challenges, and the advancements in processing data at the source.

The third article delves into the intricacies of TinyML, emphasizing its potential in edge computing and highlighting the four crucial metrics - accuracy, power consumption, latency, and memory requirements - that influence its development and optimization.

The fourth article delves into the realm of data science and AI-driven real-time analytics, showcasing how AI's precision and efficiency in processing big data in real-time are transforming industries by recognizing patterns and inconsistencies.

The fifth article delves into the integration of voice user interface technology into microcontroller units, emphasizing its transformative potential.

The sixth article delves into the profound impact of edge AI on system optimization, maintenance, and anomaly detection across diverse industries.

About the sponsor: Mouser Electronics

Mouser Electronics is a worldwide leading authorized distributor of semiconductors and electronic components for over 1,200 manufacturer brands. They specialize in the rapid introduction of new products and technologies for design engineers and buyers. Their extensive product offering includes semiconductors, interconnects, passives, and electromechanical components.