Entering the Age of AI in Engineering

And the Challenges of Meaningful Training Data

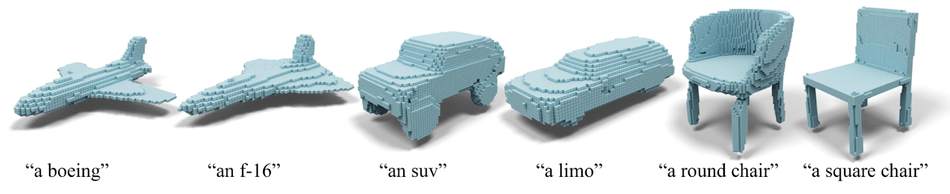

The rise of generative AI, as demonstrated by text-based applications like Chat GPT and Bard, as well as image generators like Midjourney and Stable Diffusion, has sparked a wave of anticipation in the engineering realm. The hope is for similar tools to evolve that can assist in 3D design space, helping to tackle complex engineering problems.

Emerging research on converting text to 2D to 3D point clouds or SDFs to 3D meshes is fueling enthusiasm for shape generation possibilities. But, the journey from creating something that merely 'looks' right to devising something that 'performs' to meet functional requirements is paved with many challenges. They demand distinct training data, a very approach to specifying requirements to produce forms and a different way of thinking about design.

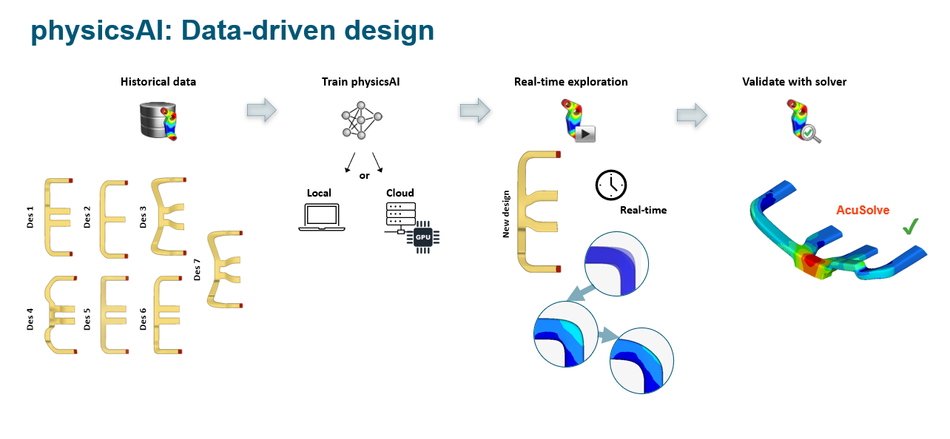

The engineering industry is beginning to witness the first AI-integrated commercial software tools suitable for the design and engineering. These include offerings from established software firms like Altair, with a rich history in 'simulation-driven design', and startups like Monolith and PhysicsX working to help engineers to predict high-performance problems while also reducing reliance on simulation in the design iteration process (not the validation process).

Altair's designAI combines physics-based simulation-driven design and machine learning-based AI-driven design to create high-potential designs earlier in development cycles. While physicsAI is used to predict physics outcomes quickly leveraging historical simulation data to deliver physics predictions in a fraction of the time it takes traditional solvers to do the same. PhysicsAI uses geometric deep learning to operate directly on meshes and CAD models while rapidMiner, Altair’s data analytics and AI platform enables users to develop data pipelines and machine learning models without needing in-house data science expertise.

Capturing and understanding design intent is crucial for developing machine learning models that can predict performance and optimize designs.

Jaideep Bangal - Director of Simulation Driven Design and Manufacturing, Altair

While AI's application in manufacturing for process development, optimization, and quality assurance is being quickly established, the design space still grapples with a lack of rich metadata for training ML algorithms. The use of simulation to synthesize training data, despite its computational expense, provides the prospect of exploring solutions beyond the realm of known possibilities.

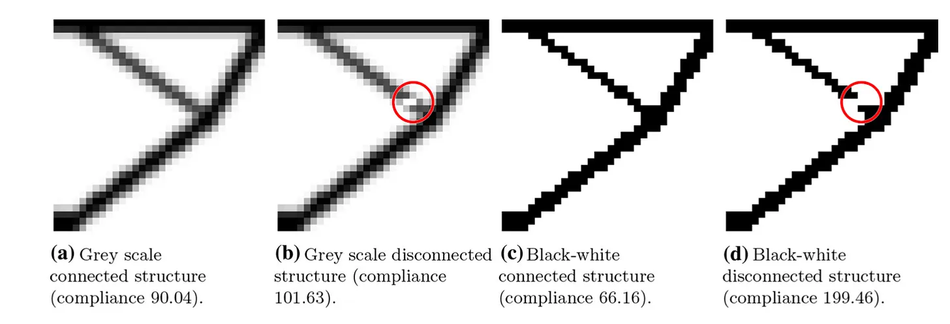

Evaluating generative models poses significant challenges, often necessitating a comparison of the distribution of generated designs with training data. This merely confirms if the produced design aligns with the training data.

Including simulation in the training data and workflow offers the potential for a more objective form of synthesis and evaluation. It may help uncover designs that, despite differing from the training data, perform well.

Metrics for evaluating generative models are very tricky and often come down to comparing the distribution of generated designs with the training data. This only tells us if the generated design is like the training data.

But simulation allows us to evaluate more objectively, so we might be able to find designs that are unlike the training data but perform well, i.e. the network produces something that’s both novel and useful.

Karl D.D. Willis Senior Research Manager, Autodesk Research AI Lab.

Simulation, despite its promising potential in exploring novel solutions outside of the training data, can carry a hefty computational price tag.

Before the investment in establishing the dataset and training begins to pay dividends, a large number of optimization problems may need to be solved to provide solutions. Academic literature shows that, in the most favorable scenarios, this break-even number is in the thousands. In less favorable instances, it can reach hundreds of thousands, even for problems with a mere few thousand design variables.

These approaches often lack generality, meaning their effectiveness is restricted to limited applications, number of boundary conditions and other parameters. This limitation further complicates their implementation in real-world scenarios, as engineers must contend with a diverse array of conditions when designing and optimizing products.

Striking the right balance between leveraging simulation for exploring novel solutions and managing computational costs and constraints is crucial in the pursuit of AI-driven engineering advances. The development of more efficient and adaptable simulation techniques, alongside the intelligent integration of AI, will be key in overcoming these challenges and maximizing the potential of AI-assisted engineering solutions.

High resolution is required either to resolve local field properties like stress concentrations or to allow optimization of huge structures like airplane wings or bridge decks.

One finite element evaluation may take hours on super computers making it infeasible to generate data enough for training an ANN.

Prof. Ole Sigmund TopOpt Group - Technical University of Denmark

In the manufacturing domain, proprietary design, simulation, and manufacturing data is often considered the "secret sauce" of each company. This proprietary information is closely guarded, sometimes to the extent that it's not even shared internally.

Amassing a warehouse of meaningful data, both within and beyond these corporate "walled gardens," poses a formidable challenge. This protective stance towards data not only limits the potential for broad collaborative efforts, but also hampers the comprehensive training of machine learning algorithms, potentially stymieing the evolution of AI in engineering.

It underscores the need for new strategies and protocols that encourage data sharing while preserving intellectual property and competitive advantage.

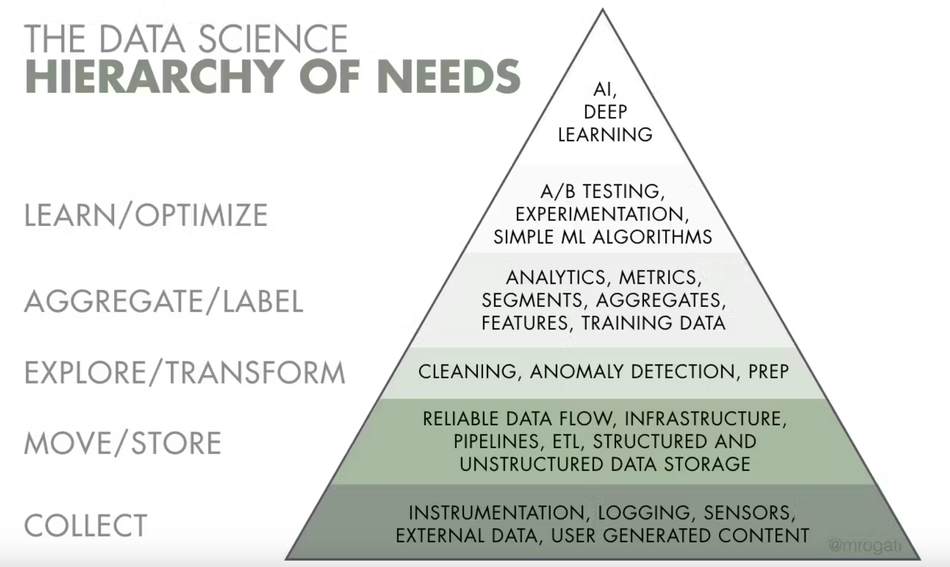

Most companies looking to integrate AI into their engineering workflow are at this very earliest, data collection and distillation phase.

We all want to get to the top of the pyramid – deploying powerful AI models that revolutionize our businesses. However, many of us are still at the base of that pyramid, figuring out how to effectively log and clean data.

Chris McComb – Director, Human+AI Initiative -Carnegie Mellon University

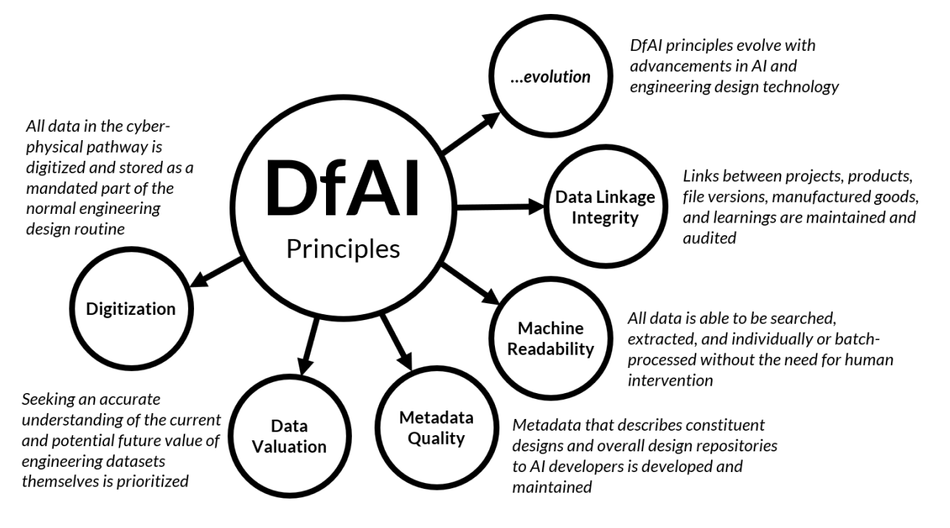

While many researchers are focusing on the task of integrating AI into engineering, addressing a multitude of data-related challenges. Chris McComb and his team at Carnegie Mellon University are taking a broader approach by examining not only the mechanical processes and individual approaches towards Design for Artificial Intelligence (DfAI), but also the dynamics of team-based collaboration.

Their research delves into understanding the subtleties of how engineers interact with AI-enabled engineering software and, just as crucially, their interpersonal communication within this context.

By exploring both the technical and human aspects, their work aims to pave the way for more efficient and effective AI integration in the engineering process, fostering innovation and breakthroughs in the field.

One thing that I encourage people to keep in mind when working with AI is that we are not Teflon – just as we influence AI tools, the tools also influence us. Exactly how AI influences us depends on how we work with it, though.

Chris McComb – Director, Human+AI Initiative -Carnegie Mellon University

The utilization of AI as a tool prompts a shift in our focus, moving away from basic modeling operations and towards activities of higher value such as data distillation and problem framing.

By integrating AI as an equal collaborator within our teams, we foster a hybrid human/machine work environment that has the potential to outperform purely human teams.

However, while it might be tempting to believe that AI is an unwavering ally, it is important to note that it is not always beneficial. Much like any tool, AI's effectiveness is contingent on its appropriate application and use within the given context.

Chris and his team developed an AI Designer that surpassed its human counterparts in engineering performance.

However, mixed teams consisting of both human engineers and an AI designer didn't always fare as well as the individual AI or human entities.

This underscores the potential of AI in enhancing design, while simultaneously revealing potential risks if blindly trusting results from a black box system.

As we embrace this new era, it's crucial to consider both the opportunities and challenges that integrating AI into our engineering practices presents.

Chris McComb, Karl D.D. Willis and Jaideep Bangal will be presenting alongside other experts in the field of computational design, engineering and AI at CDFAM Computational Design (+DfAM) Symposium in NYC June 14-15.