Why is Warehouse Automation So Important for AI Professionals?

The impact of artificial intelligence within warehouses will act as a crystal ball for AI, robotics in other industries

Photo by Ramon Cordeiro

Most robots used in factories today are powerful but blind. They are programmed to do specific tasks repeatedly with high precision and speed, but until now have not been able to adapt to changes in the environment or handling variables. Robot arms often lack the ability to grasp an object if it is moved a few inches, not to mention picking or sorting millions of different products in a warehouse.

As a result system integrators design shaker tables, feeders, conveyor belts, etc., so robots can be offered the same parts in the right orientation. Larger industrial robots need cages so they don’t accidentally hurt nearby people. Unlike human hands, which can perform tens of thousands of different tasks, robot arms can only be programmed for single tasks, and they need the proper end effectors.

Additionally, most robots cannot sense, unless you equip them with force or vision sensors. They don’t know if tasks are performed properly, because they cannot see or feel.

Traditional computer vision requires pre-registration of all objects, i.e. scanning and building computer-aided design (CAD) models for all items beforehand with expensive 3D cameras. The process is time-consuming and inflexible. Even if there was a box with exactly the same shape, but a different size, a robot couldn’t automatically recognize the differences and figure out how to grasp the new item.

In addition, translucent packaging, reflective surfaces, and deformable objects (such as food and clothing) make robotic vision an even more difficult problem to solve. The additional non-robot hardware and integration end up costing four times more than a robot arm, which costs anywhere between $10,000 to $100,000.

Adding intelligence to robots

To solve these issues, deep reinforcement learning (DRL) is unleashing the full potential of robotics, giving them the ability to recognize and react to their surroundings and handle product variations. Robots can now learn from experiences with off-the-shelf cameras and deep neural networks.

With enough data and practice, robots can now teach themselves new abilities, as was seen with robots’ ability to identify images and win video games. With every grasp and trial, a robot gets smarter and more adept at mastering the task. Furthermore, cloud-connected robots allow learnings to be shared with each other. This is a tremendous shift, making robotics solutions more dexterous, flexible, and scalable.

Deep Learning (DL) is primarily used for image classification. Deep neural networks learn representations, rather than task-specific algorithms.

Reinforcement learning (RL) is inspired by behavioral psychology. It learns control policies by trial-and-error through reward or punishment.

Deep Reinforcement Learning (DRL) combines these two approaches. It is currently suited for:

· Simple or simulated tasks

· Fault-tolerant tasks

· Just enough variation to make explicit modeling difficult

· Easy-to-define goals (reward functions)

· Semi-structured environments

Warehouse as a testing ground for AI

Automation in warehouses is widely considered the “low-hanging fruit” for these technologies because tasks tend to be similar across warehouses, and the environment is more structured. For example, order picking represents more than 40% of operational costs across most warehouses. While tasks are repetitive, they involve some variation with products and packaging design, making it impossible to hard-program robots in advance.

Furthermore, labor costs account for up to 70% of a warehouse’s total budget. Pressured by e-commerce companies such as Amazon, retailers are looking to automation to address labor shortages and reduce costs. Customer demand, price competition, and fast delivery requirements are driving a strong business case for warehouses to be the first adopter of AI-enabled robots.

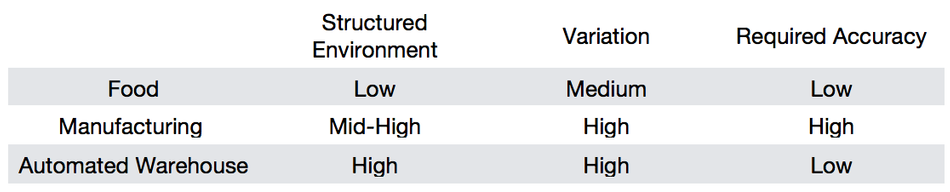

Chart: Why do we see AI-enabled robots in the warehouse first?

Most tasks in a warehouse are fault tolerant, unlike tasks on a production floor or in the automotive industry. If a robot accidentally drops an object in a warehouse, it can try again as long as it achieves the required speed and success rates without damaging the goods.

DRL is particularly suited for fault-tolerant simple tasks — actions a human can perform within a few seconds — with enough variation in semi-structured environments. As technology improves in environments like warehouses, it can then be applied in other industries, such as manufacturing, where tasks are more complicated and more uncertainties exist. Thus, an automated warehouse acts as an incubator, predicting a knowledge spill-over to many other market segments.

Piece-picking is the holy grail

Warehouse automation has been around for decades, in the form of automated storage, retrieval systems, and mobile robots like those from Kiva (now Amazon Robotics), that move items around.

But most of the pick-and-pack operations within a warehouse are still performed by humans, with labor costs accounting for 50 to 70% of the overall warehouse budget. Worker shortages have driven up salaries by a rate of 6 to 8% every year, while robot costs have fallen considerably since the 1990s.

Piece-picking has long been the holy grail of robotics. Year after year, incumbents such as Amazon and KUKA host robotics challenges for startups and academic teams to create machines that can identify, pick up and stow goods. The emergence of deep learning allows robots to recognize, pick, and place hundreds or up to thousands of items.

But the technology is not yet perfect. It’s still challenging for a machine to recognize tens of millions of objects, and manipulate deformable objects or items with transparent packages. However, our interviews with Locus Robotics and Osaro indicate that many industry experts expect the technology will mature. In addition, we will have a robot vision camera that can recognize almost everything in a warehouse within five years. If that happens, it will affect not just warehouses, but retail, delivery, and many other applications that we haven’t yet imagined.

Pushing towards standard models

Technology improvements in warehouse automation and beyond will also push the mature, but fragmented, robotics industry to become more standardized and modular. Given the nature of narrow AI, most Silicon Valley startups in the AI-enabled robotics industry choose to focus on specific vertical applications and collect proprietary data as a defensive advantage.

Piece-picking by robots is the best example. As the technology improves, we will see warehouse piece-picking startups enter manufacturing, food, and agriculture markets. Moving away from manually programming a robot and having it learn and act autonomously on specific simple tasks — and sharing the learning — will become more important in the future.

However, it’s not easy to find scalable and technically feasible business cases like piece picking that customers are willing to pay for. For example, assembly tasks vary from factory to factory, making it difficult to get sufficient training data or scale a solution across different customers.

Given current technology constraints, there’s still a trade-off between precision and flexibility. Deep learning allows robots to handle variation, but these AI-enabled robots still cannot achieve the same precision and accuracy as traditional robots, at least not today.

Some startups are providing modules or technology stacks for other players in the ecosystem to mitigate the complexity and scalability issues mentioned above. New areas where startups are likely to enter and disrupt include teleoperation modules, controllers that allow for safer human-robot collaboration, human-centered AI protocols, and sensors that can quantify human behavior.

Eventually, we may see unified operating systems for AI-enabled robots similar to Windows or Android in the consumer electronics space, or standard user interfaces that will fundamentally transform the fragmented robotics industry and further accelerate its disruption.

Standard modules and interfaces will bring down the cost to enter the market and shorten the time to market. As DRL and robotics technology improves, we will see a boom across industries including agriculture, food processing, home, and even surgical robotics.

How technology will transform the industry landscape

Led by industry leaders like Amazon and Ocado, a British online grocery store, retailers see efficient and cost-effective supply chains as a key to success and are incentivized to automate their warehouses for lower cost, lower management complexity, and higher scalability. While Amazon keeps its Kiva mobile robots and automated warehouse solutions for in-house use only, Ocado launched its fulfillment platform to help other companies store, pick, and transport groceries. But most retailers still rely on material-handling companies and system integrators to build and maintain an automated warehouse.

Incumbent material-handling companies tend to exploit the current technology, focusing on high-revenue-potential retrofit projects. This gives startups an opportunity to enter from the lower-end markets, where incumbents are less interested, and thus would not respond immediately. Dozens of startups offer scalable and affordable solutions that address customer needs for robots that can handle high-variability, low-precision tasks, such as piece picking in the warehouses.

On the other hand, large robotics companies have long focused on developing high-speed and more-precise solutions to serve their main customers in the automotive and manufacturing industries. The pursuit of absolute precision is contrary to the process of deep learning, where results improve over time through trial and error.

Some robotics companies saw an opportunity to enter new segments that require robots to handle high-variability, but lower-precision tasks, and began to build in-house AI teams and explore partnerships with startups developing deep neural network solutions for robots.

There are currently more than 20 warehouse piece-picking robot startups worldwide, including Osaro and Righthand Robotics. Most of these startups (between seed round and Series C funding) are working on pilot projects with incumbent material-handling, retail, and robotics companies.

Software and services will also capture more values, while premiums in hardware will decrease. With this power shift, as well as potential mergers and acquisitions, the landscape will change significantly over the next few years.

The future of AI-enabled robotics

Thoughts vary on the effect that AI and DRL will have on the robotics industry. One scenario is the robotics industry will consolidate because of the AI network effect, which says results improve as the amount of training data they’re given increases. This idea is that whoever owns the most proprietary data will win the competition.

However, new researches in the areas of Transfer Learning, Meta Learning, and Zero-Shot Learning make it possible to learn from fewer and fewer examples, generalizing learning results across different fields. Cloud-based AI and robotics will also help accelerate learning and diffusion.

As these technologies evolve, the landscape may face yet another significant change. This all starts from the warehouse!