Tactile sensors enable robots to carry unsecured loads

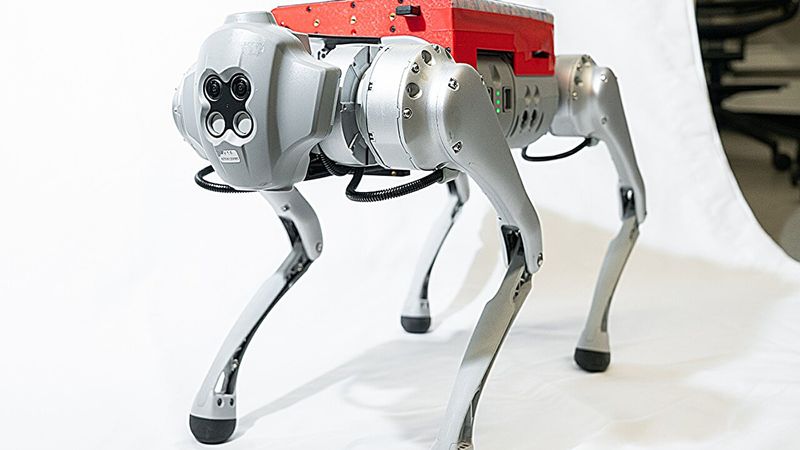

A new quadrupedal system with tactile sensing, LocoTouch, enables robot dogs to transport unsecured cylindrical objects on their backs for more than 60 meters while navigating around cones and over obstacles.

This article was first published on

engineering.cmu.eduIf you’ve ever moved into a new home, you know the challenge of packing a moving truck—it’s like solving a giant, three-dimensional puzzle. Everything needs to fit just right, and nothing can be left loose or unbalanced, or it risks shifting and breaking in transit. For humans, balancing objects, whether it’s a tray of food or a stack of moving boxes, comes naturally thanks to the coordination between our muscular and vestibular systems. But for robots, maintaining balance while carrying loads is far more complex. They must constantly track both the position of the object and their own body to make real-time adjustments to stay upright.

To overcome this challenge in robotics, researchers in the Department of Mechanical Engineering at Carnegie Mellon University have developed a tactile sensor that enables a four-legged robot to carry unsecured cylindrical objects on its back for long distances.

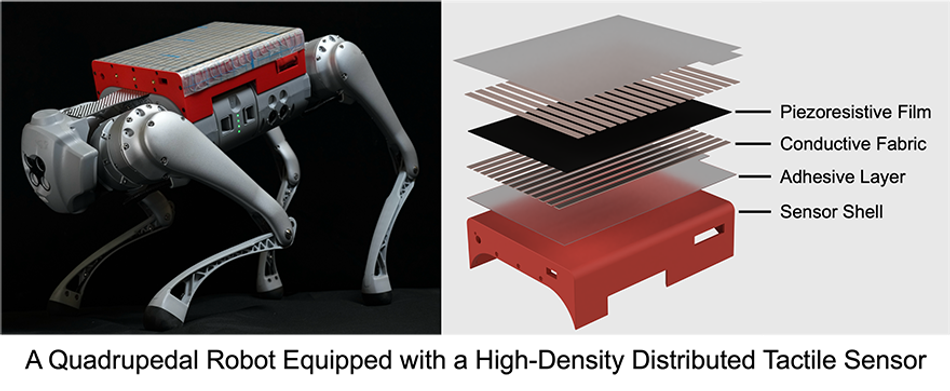

Historically, quadrupedal robots needed to rely on boxes to hold objects for transport, which limited the variety of objects that could be moved. With LocoTouch, a high-density distributed tactile sensor array that covers the entire back of the robot, the robot receives feedback about the pose of the object on its back and adjusts its own movement to keep the object onboard.

“The tactile sensor consists of a piezoresistive film, sandwiched between conductive electrodes made of conductive fabric,” said Changyi Lin, Ph.D. candidate in the Safe AI Lab. “Each sensing unit is housed at the intersection of conductive electrodes, so when the object moves and consequently deforms the piezoresistive film, the change in resistance is detected by the intersecting electrodes.”

With more than 4,000 digital twins of the robot dog and reinforcement learning, the team was able to train the robot how to adjust for nearly any movement an object on its back might make. The skills learned in simulation transferred to the real-world with no need for fine tuning. In the lab, the robot was able to circumvent cones, walk over obstacles, and adjust to external intervention (a person bumping the object around), all while transporting objects of varying shapes and sizes to a finish line more than 60 meters away.

“Robots should work for humans, so they need to be able to interact with our environment and perceive our world the way we do. This is the first time tactile sensing has been deployed in quadrupedal robots but it won’t stop there,” said Ding Zhao, assistant professor of mechanical engineering. “With this feedback loop, robots will be capable of more advanced tasks. We are working on scaling the sensors so that they can cover an entire robot, next.”

Robots should work for humans, so they need to be able to interact with our environment and perceive our world the way we do.

<cite><strong>Ding Zhao, Associate Professor, Mechanical Engineering</strong></cite>

The team says that this technology brings us one step closer to home-helper robots. Beyond that they plan to utilize it outdoors, to carry sensors to hard-to-reach spots to monitor landslides.Opens in new window The technology can also transfer to hospitals, manufacturing floors, and who knows? Maybe one day it will be installed in the bed of a truck to make moving just a little bit easier.