Revolution of Endpoint AI in Embedded Vision Applications

Article 2 of Bringing Intelligence to the Edge Series: Advancements in AI and embedded vision technologies are revolutionizing various industries, enabling real-time decision-making, enhancing security, and facilitating automation in various applications.

This is the second article in a 6-part series featuring articles on "Bringing Intelligence to the Edge". The series looks at the transformative power of AI in embedded systems, with special emphasis on how advancements in AI, embedded vision, and microcontroller units are shaping the way we interact with technology in a myriad of applications. This series is sponsored by Mouser Electronics. Through their sponsorship, Mouser Electronics shares its passion and support for engineering advancements that enable a smarter, cleaner, safer manufacturing future.

Endpoint AI is a new frontier in the space of artificial intelligence (AI) that takes AI to the edge. It is a revolutionary way of managing information, accumulating relevant data, and making decisions locally on a device. Endpoint AI employs intelligent functionality at the edge of the network; in other words, it transforms the Internet of Things (IoT) devices that are used to compute data into smarter tools embedded with AI features. This in turn improves real-time decision-making capabilities and functionalities.

The goal is to bring machine-learning-based, intelligent decision-making physically closer to the source of the data. In this context, embedded vision shifts to the endpoint. Embedded vision incorporates more than breaking down images or videos into pixels—it is the means to understand pixels, make sense of what is inside, and support making a smart decision based on specific events that transpire. There have been massive endeavors at the research and industry levels to develop and improve AI technologies and algorithms.

In this article, we concentrate on Endpoint AI and its transformative role in embedded vision applications. We underscore its pivotal function in optimizing on-device decision-making and data management. We also evaluate how this evolution allows for refined interpretation of visual pixel data, assisting real-time intelligent decision-making.

What Is Embedded Vision?

Embedded computer vision is a technology that imparts machines with the sense of sight, which enables them to explore the environment with the support of machine-learning and deep-learning algorithms. There are numerous applications across several industries whose functionality relies on computer vision, thus becoming an integral part of technological procedures. In precise terms, computer vision is one of the AI fields that enables machines to extract meaningful information from digital multimedia sources to take actions or make recommendations based on the information that has been obtained. Computer vision is, to some extent, akin to the human sense of sight.

However, the two differ on several grounds. Human sight has the exceptional ability to understand many and varied things from what it sees. On the other hand, computer vision recognizes only what it has been trained on and what it is designed to do exactly, and that too with an error rate. AI in embedded vision processes trains the machines to perform supposed functions with the least processing time and has an upper edge over human sight in analyzing hundreds of thousands of images in a lesser timeframe.

Embedded vision is one of the leading technologies, with embedded AI used in smart endpoint applications in a wide range of consumer and industrial applications. There are a number of value-added use cases examples, such as counting/analyzing the quality of products on a factory line, keeping a tally of people in a crowd, identifying objects, and analyzing the contents of a specific area in the environment.

While considering the processing of embedded vision applications at the endpoint, the performance of such an operation may face some challenges. The data flow from the vision sensing device to the cloud for the purposes of analyzing and processing could be very large and may exceed the network available bandwidth. For instance, a 1920×1080 px camera operating with 30 frames per second (FPS) may generate about 190MB/s of data. In addition to privacy concerns, this substantial amount of data contributes to latency during the round trip of data from the edge to the cloud, then back again to the endpoint. These limitations could negatively impact the employment of embedded vision technologies in real-time applications.

IoT security is also a concern in the adoption and growth of embedded vision applications across any segment. In general, all IoT devices must be secured. A critical issue and concern in the use of smart vision devices is the possible misuse of sensitive images and videos. Unauthorized access to smart cameras, for example, is not only a breach of privacy, but it could also pave a way for a more harmful outcome.

Vision AI at the Endpoint

As we continue to explore the world of AI, we find ourselves at the intersection of AI and embedded vision, creating an exciting domain known as Vision AI. This term refers to the deployment of AI techniques in visual applications, leading to smart, efficient, and real-time image and video processing. An important aspect of Vision AI is its application at the edge of the network - the "endpoint" - where data is processed locally on the device itself. This approach is paving the way for faster, more efficient, and more secure operations in numerous sectors.

Here are some key facts about Vision AI:

Endpoint AI can enable image processing to infer a complex insight from a huge number of captured images.

AI uses machine-learning and deep-learning capabilities within smart imaging devices to check a huge amount of previously well-known use cases.

For optimum performance, embedded vision requires AI algorithms to run on the endpoint devices and not transmit data to the cloud. The data here is captured by the imaging recognition device, then processed and analyzed in the same device.

In applications where power consumption at the endpoint is limited, microcontrollers (MCUs) or microprocessors (MPUs) need to be more efficient to take on the high volumes of multiply-accumulate (MAC) operations that are required for AI processing.

Deployment of AI Vision Applications

There are unlimited use cases for the deployment of AI in vision applications in the real world. Here are some of the examples where Renesas Electronics can provide comprehensive MCU- and MPU-based solutions, including all the necessary software and tools to enable quick development.

Smart Access Control

Security access control systems are becoming more valuable with the addition of voice and facial recognition features. Real-time recognition requires embedded systems with very high computational capabilities and on-chip hardware acceleration. To meet this challenge, Renesas provides a choice of MCU or MPU that offers very high computational power that also integrates many key features that are critical to high-performance facial and voice recognition systems, such as built-in H.265 hardware decoding, 2D/3D graphic acceleration, and error correction code (ECC) on internal and external memory to eliminate soft errors and allow for high-speed video processing.

Industrial Control

Embedded vision has a huge impact as it enhances many applications, including product safety, automation, and product sorting. AI techniques can perform multiple operations in the production process (such as packaging and distribution), which can ensure quality and safety during production in all stages. Safety is needed in areas such as critical infrastructure, warehouses, production plants, and buildings that require a high level of human resources.

Transportation

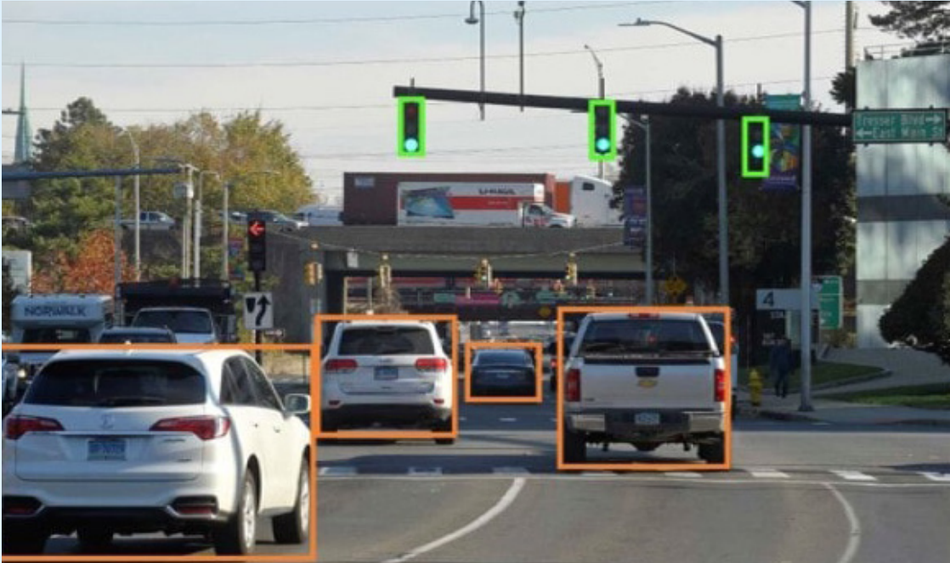

Computer vision presents a large scale of ways to improve transportation services. For example, in selfdriving cars computer vision is used to detect and classify objects on the road. It is also used to create 3D maps and estimate movement around. By using computer vision, self-driving cars gather information from the environment using cameras and sensors, which then interpret and analyze the data to make the most suitable response by using vision techniques such as pattern recognition, feature extraction, and object tracking (Figure 1).

In general, embedded vision can serve many purposes, and these functionalities can be used after customization and the needed training on different types of datasets from many areas. Functionalities include monitoring physical area, recognizing intrusion, detecting crowd density, and counting humans or objects or animals. They also include identifying people or finding cars based on license plate numbers, detecting motion, and analyzing human behavior in different cases.

“Embedded vision is one of the leading technologies, with embedded AI used in smart endpoint applications in a wide range of consumer and industrial applications.”

Case Study: Agricultural Plant Disease Detection

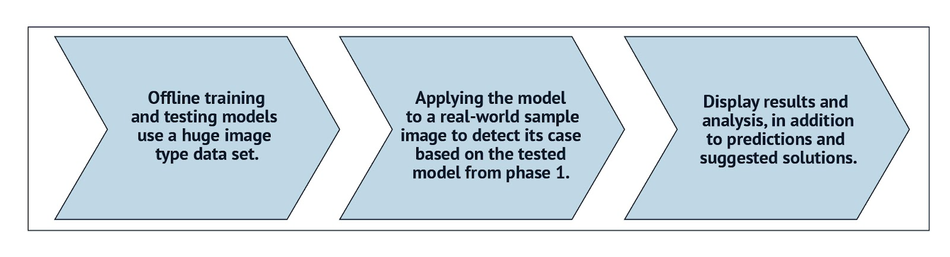

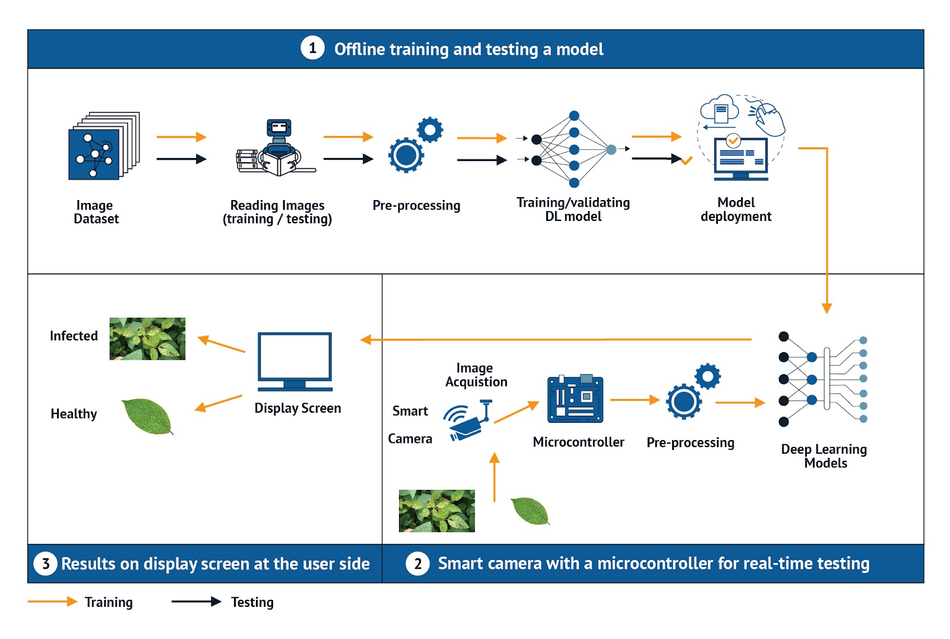

Vision AI and deep learning may be employed to detect various anomalies—for example, detecting plant disease. Deep learning algorithms—one of the AI techniques—are used widely for this purpose. According to research, computer vision gives better, more accurate, faster, and lower-cost results compared to the costly, slow, and labor-intensive results of previous methods. The process that is used in this case study can be applied to any other detection. There are three main steps for using deep learning in computer/machine vision (Figure 2).

Step one is performed on normal computers in the lab, whereas step two is deployed on a microcontroller at the endpoint, which can be on the farm. Results in step three are displayed on the screen on the user side. Figure 3 shows the process in general.

Vision AI Gateway Solution

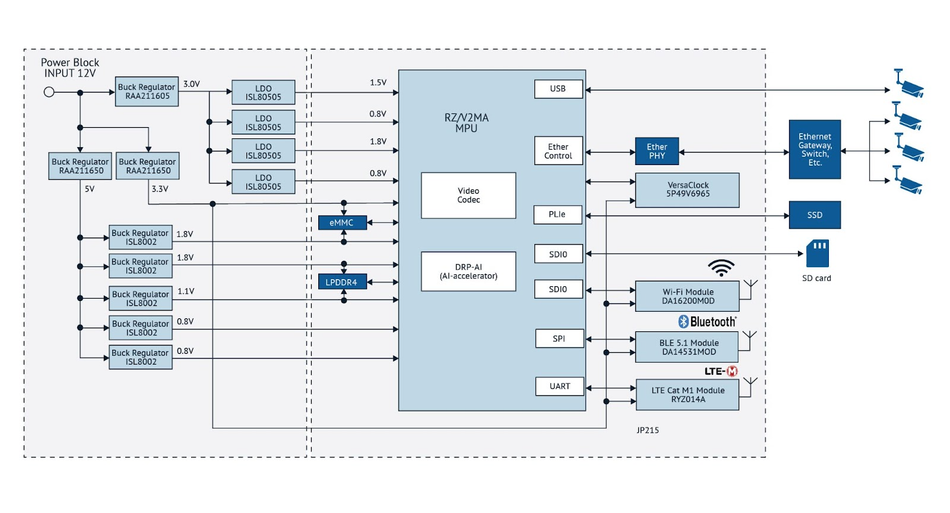

Renesas Electronics’ Vision AI Gateway solution efficiently handles vision information from multiple cameras and performs high-speed artificial intelligence (AI) processing. It includes a Renesas RZ/V2M AI-MPU with rich peripheral functions and an optimized power supply system.

Renesas Electronics RZ/V2L High Precision Entry-Level AI MPUs are general-purpose microprocessors equipped with Renesas' AI-dedicated accelerator (DRP-AI) for vision, a 1.2GHz dual-core Arm® Cortex®-A55 CPU, 3D graphics, and a video codec engine. The DRP-AI accelerator provides both real-time AI inference and image processing functions with the capabilities for camera support such as color correction and noise reduction. This enables users to implement AI-based vision applications without requiring an external image signal processor (ISP).

The solution realizes high performance and low power consumption at the same time. It delivers better value for gateway applications by integrating edge AI capability.

System Benefits:

Fast vision AI processing via decoding of video streams (H.264 or H.265) and handling multiple AI inferences with minimum switching overhead

Support for high-speed vision gateway function with Ethernet protocol: 100/1000Mbps and fast USB connection: USB 3.1 Gen 1 (5Gbps)

Peripheral extension capabilities with PCI express 2.0 (Gen 2/2 Lane)

Built-in power sequencing control function of the RZ/V2MA, making power supply design easier and improving reliability

Integration of various communication devices, high-performance Wi-Fi module, compact and high-performance Bluetooth® Low Energy module, and LTE CatM1 cellular IoT module

Conclusion

We are experiencing a revolution in high-performance smart vision applications across a number of segments. The trend is well supported by the growing computational power of microcontrollers and microprocessors at the endpoints, opening up great opportunities for exciting new vision applications. Renesas Vision AI solutions can help you to enhance overall system capability by delivering embedded AI technology with intelligent data processing at the endpoint.

Renesas’s advanced image processing solutions at the edge are provided through a unique combination of low-power, multimodal, and multi-feature AI inference capabilities. Take the chance now and start developing your vision AI application with Renesas Electronics.

This article is based on an e-magazine: Bringing Intelligence to the Edge by Mouser Electronics and Renesas Electronics Corporation. It has been substantially edited by the Wevolver team and Electrical Engineer Ravi Y Rao. It's the second article from Bringing Intelligence to the Edge Series. Future articles will introduce readers to some more trends and technologies shaping the future of Edge AI.

This introductory article unveils the "Bringing Intelligence to the Edge" series, exploring the transformative potential of AI at the Edge

The first article examines the challenges and trade-offs of integrating AI into IoT devices, emphasizing the importance of balancing performance, ROI, feasibility, and data considerations for successful implementation.

This second article delves into the transformative role of Endpoint AI and embedded vision in tech applications, discussing its potential, challenges, and the advancements in processing data at the source.

The third article delves into the intricacies of TinyML, emphasizing its potential in edge computing and highlighting the four crucial metrics - accuracy, power consumption, latency, and memory requirements - that influence its development and optimization.

The fourth article delves into the realm of data science and AI-driven real-time analytics, showcasing how AI's precision and efficiency in processing big data in real-time are transforming industries by recognizing patterns and inconsistencies.

The fifth article delves into the integration of voice user interface technology into microcontroller units, emphasizing its transformative potential.

The sixth article delves into the profound impact of edge AI on system optimization, maintenance, and anomaly detection across diverse industries.

About the sponsor: Mouser Electronics

Mouser Electronics is a worldwide leading authorized distributor of semiconductors and electronic components for over 1,200 manufacturer brands. They specialize in the rapid introduction of new products and technologies for design engineers and buyers. Their extensive product offering includes semiconductors, interconnects, passives, and electromechanical components.