MultiSense SLB

A tri-modal high-resolution, high-data-rate, and high-accuracy 3D range sensor.

Technical Specifications

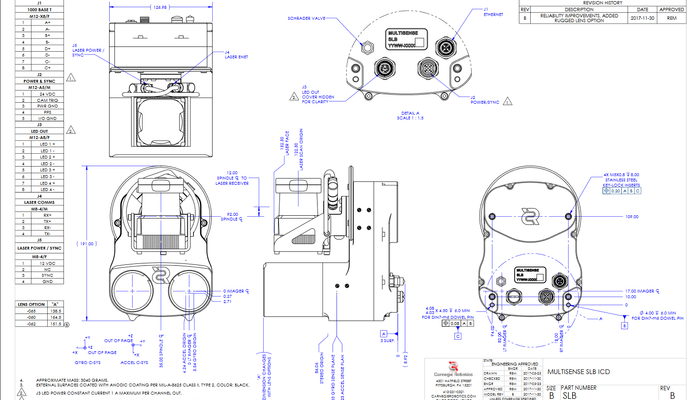

| Height | 19.1 |

| Width | 12.7 |

| Depth | 13.0 |

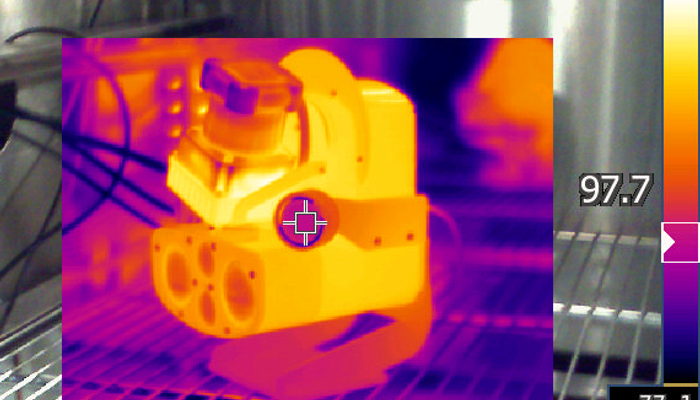

| Operating temperature | -10 / +50 |

| Input voltage | 24 |

| 18 - 47 | |

| Power draw | 20 |

| 75 | |

| Interfaces | |

| Stereo details | |

| Algorithm | |

| Range | 0.4 - 10 |

| Std Lens | FOV |

| Focal length | |

| CMOSIS CMV2000 | |

| Mono or Color Bayer | |

| Depth Resolution | @ 1m |

| @ 10m | |

| Laser details | |

| Model | |

| Laser | 905 |

| Scan rate | 40 |

| Field of view | 270 |

| Angular resolution | 0.25 |

| Detection range | 0.1 - 30 |

| +/-30 | |

| +/-50 | |

| Multi-Echo | |

| IP rating |

Overview

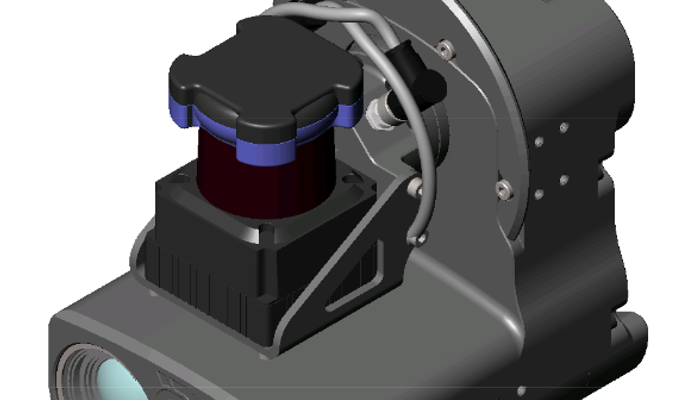

The sensor is suitable for use in a wide variety of robotics, automation, and sensing applications, such as autonomous vehicles, 3D mapping, and workspace understanding. The MultiSense SLB is packaged in a rugged, compact housing, along with a low-power FPGA processor, and is pre-calibrated at the factory.

The original MultiSense SL is the sensor of choice for the Atlas humanoid robots in the DARPA Robotics Challenge (DRC). As the “head” of the humanoid, the SL provides the majority of perceptual data used for tele-operation as well as automated control.

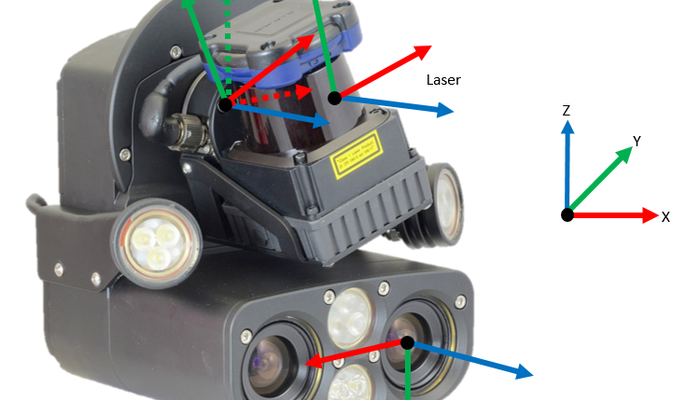

The MultiSense SLB produces 3D point clouds from both the spinning laser and the stereo camera which are accurately aligned and colorized onboard the sensor. The stereo sensor provides extremely dense “full frame” range data at high frame-rates, which is complemented by high accuracy data, at lower rates, from the spinning laser. The sensor can also output standard color video.

The MultiSense SL combines a Hokuyo UTM-30LX-EW laser and a Carnegie Robotics updated MultiSense S7 high resolution stereo camera into a fused GigE device. The Hokuyo—which outputs 43,000 points per second—is axially rotated on a spindle at a user-specified speed. The stereo camera has a 7 centimeter baseline and can be configured with either a 2 or 4 megapixel sensor, making it the highest resolution commercial stereo camera available to date. On-board processing handles image rectification, stereo data processing, time synchronizing of laser data with a spindle encoder, spindle motor control, lighting timing, and managing the GigE interface. The GigE output includes time-synced laser and stereo data in a ready-to-use format. The advantages of this architecture are that a powerful external computer is not needed for stereo processing, and that the user does not need to provide stereo processing algorithms or stereo/laser calibration. Each MultiSense sensor ships fully calibrated from the factory.

The stereo portion of the MultiSense SLB can find over 11 million feature matches every second. A precise calibration process creates the necessary information to transform the pixel-level information into an accurate range measurement, while also compensating for lens distortion, small variations in lens alignment, and other manufacturing tolerances. The stereo point cloud can be augmented by overlaying color image data onto the point cloud—resulting in compelling, very low latency, life-like 3D data sets.

A ROS-based API and tools allow you to view live image and 3D range data; adjust laser, camera, and stereo parameters; log data; playback logs; check the unit's calibration; and change the sensor's IP address. An open-source C++ library and Gigabit Ethernet interface make it easy to integrate live data into your robot, vehicle, mobile equipment, lab environment, or other application.

References

Describes the project, specifications, and links to CAD file downloads.

Describes the compatibility, installation, troubleshooting.