The evolution of hardware and software console testing automation

Developing a tool that evaluates button and switch devices from both hardware (through physical input) and software perspectives is the next logical step in the evolution of device testing automation.

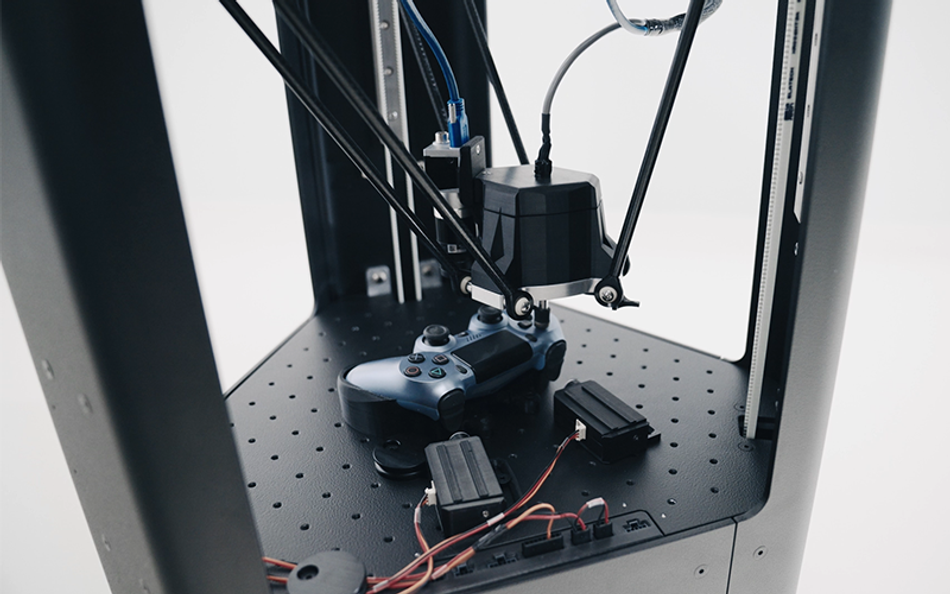

MATT interacting with both top and lateral buttons of the controller

Automation of device testing (covering both hardware and software elements of said devices) is a trend that has been steadily growing. Touchscreen device testing is the leading use case, with a large number existing solutions available. However, replicating human interaction on devices with physical buttons and switches is more complex, and fewer solutions are available. Developing a tool that evaluates button and switch devices from both hardware (through physical input) and software perspectives is the next logical step in the evolution of device testing automation.

The use case

The case of MATT, a physical input device testing robot, performing test scenarios on a gaming console originated not from the need of testing the console, its controller, or a video game, but through one client’s need of interacting with those elements to test the network security and additional related parameters. The use case can be summed up to executing simple commands in a video game to generate movement in order to send network packets via WIFI.

To develop the use case and to easily integrate with the device, multiple addons needed to be implemented for MATT to accurately interact with not only the controller, but the whole console suite.

During the solution’s creation process two technical challenges were met, namely interacting with the controller’s side buttons (in addition to the top ones), and connecting with the monitor displaying the console’s output, as well as creating a system to monitor and analyze the collected video stream and determine if what is shown on the screen is the correct response to the executed action.

Planning

From the beginning of the use case’s development process the goal was set to make MATT be able to use the gaming console as a human would and simulate an online player in a video game. This meant that the testing robot needed to navigate the console’s menu, adjust the desired settings, choose a character and enter a game. Additionally, to prevent the servers from determining MATT was not an active player (as it was not taking part in the game through actually playing the story line, the missions, following specific instructions) a series of game specific actions were executed.

Having the goal set to making MATT replicate as closely as possible as an actual human QA manual tester and replicate how they behave during testing processes with the same requirement, meant that the robot had to behave with the dexterity, observation, and visual abilities of a person. For this reason, the plan was to create a system that would give the device testing robot the ability to recognize console-specific elements and interact with them with the precision of a real player, starting from menu navigation, game selection, online game launch to acting like an active player on a platform specific game (ex: Mario Cart, Call of Duty, Elder Scrolls, Pokémon).

Challenges and Solutions

Building the controller and console set-up

MATT’s platform was built to physically support mobile devices: phones, tablets, or smartwatches- mounted using the same system. Dealing with device features different from everything tested until then (shape, size, utility, way of interaction), special cradles and mechanisms were developed for the controllers to be set into place and operated on. Even more so, the mechanical side of the interaction with the consoles needed to accommodate multiple console models (Play Station, Nintendo, Xbox). Dealing with diversity in shape and button placement, for each console type, 3D printed cradles were created for their specific controllers to be placed on MATT’s platform. For each one of them, the position of their side buttons (L and R buttons) was analyzed, and actuators designed and set into place for optimal interaction. Real life operability of the consoles was assured by actuators acting upon lateral buttons and MATT’s fingers pressing on the controller’s top buttons (joystick, directional pad, menu button, menu and option selection buttons). All button pressing commands on controllers were realized through and controlled by MATT’s software.

Using the specially designed mechanisms and cradles the controllers and actuators were placed on MATT’s platform and a TV monitor set up for the console’s output. To accurately interact with the controllers, their button placement was mapped out using the calibration process included in MATT’s API. Considering that gaming console’s actions can also be composed by complex button combinations including interaction between both top and lateral buttons (for example L and left arrow button to ‘throw’ in GTA5 on a PS4), high level functions were implemented, automatically translating to movements in the respective actuator and MATT’s fingers. This method opened the possibility of writing testcases replicating human interaction with the console.

Capturing and analyzing the console's output

For touchscreen testing use cases, MATT’s computer vision API and a variety of other algorithms were used to take pictures of the device’s screen and verify if the icon choice or response to gestures were executed correctly (with the response given to the performed action shown on the device’s screen).

The intricate situation for the gaming console case was the response output. It did not appear on the device mounted on MATT’s platform (as in the touchscreen device’s instance) but was displayed on a separate TV screen. Hence, the challenge was approached by firstly finding a method to integrate with a TV monitor and secondly, capturing the video feed directly sent to the TV and performing the computer vision analysis on the console video stream.

The first step was to establish the navigation through the console’s menu. Taking into consideration that gaming consoles output HDMI signals that are transmitted directly to a monitor, integrating an HDMI capture card to the computers used to perform the test case turned out to be the ideal solution. This method permitted real time image recognition to be performed on the captured video stream. Additionally, using computer vision selected menu or in-game elements were easily recognized followed by commands sent to MATT to further navigate through the consoles’ or game’s interface. For example, MATT pressing the center of the directional pad to confirm a setting choice, or an actuator pressing R2 side button and accessing the right menu tab.

The aim of the developed mechanism was to create a close to perfection synergy between what was actually seen on the TV screen and computer vision, which allowed for accurate commands to be passed on to MATT for execution, as well as effectively constructing and monitoring feedback loops. Whenever a button was not pressed, wrongly pressed, or a button pressing was not registered, the mistake was easily noticed, and the command automatically repeated. As a result, the menu navigation and the process of joining a real game was achieved effortlessly, as well as performing preliminary actions (charge ammo, choose character attributes, move left-right, jump, crouch) to simulate a player starting the game and prevent the servers from expelling ‘MATT player’ from a human player’s playthrough. In the end the system was able to accomplish what was aimed at from the beginning: behaving and reacting as a human tester would.

Conclusion

The client’s final use case was to generate enough WIFI traffic by creating movements inside of a video game, with the purpose of capturing and analyzing WIFI network packets that are sent between the gaming console and the access point (the WIFI access point). At its core, the use case was based mostly on security aspects and WIFI security protocols, but its design made it possible for multi situational application (covering testing protocols for game designers, joystick manufacturers, software developers) that requires human input for navigating through a menu or console controls.

In the end, using MATT’s computer vision API and the specifically developed mechanisms for console and controller interaction, actions and in-console or in-game element recognition are performed with precision of a human, simulating real life player interaction.