Optimizing Cloud and Visualization Technologies for Faster and More Accurate Simulation Solutions

The potential of simulation solutions will be unlocked by combining cloud-based services, VR/AR innovation, and Lightfield technology.

Together with Ansys, a developer of simulation software, we are creating a series of content about the technologies that drive innovation. This article was written by engineer Nicolas Sierra Sarmiento with editing from Wevolver.

Simulation Demands Intuitive 3D Visualizations

Simulation technology made up of various software packages enable designers and engineers to build, test and analyze objects, systems and ideas. Simulation software has exponentially improved over the last decade, and there is now a growing need for supporting technologies to better leverage the potential of simulation. This article examines the use of emerging visualization technologies that will enable faster and more accurate simulation solutions. Additionally, it discusses the rise of cloud computing that will be necessary to deliver seamless interactive experiences.

The rapid evolution of simulation technologies promotes innovation that elevates how people interact with the detailed models and data that simulation delivers. As innovation in the simulation sector experiences continual dramatic changes, mechanisms to support demanding technical requirements are also required to provide better and faster data processing, tracking, measuring, and mapping of environments.

An answer to these challenges is Assistive Technologies (AT). ATs are innovations developed to support or enhance the performance and technical capabilities of the equipment used for the design, acceleration of data processing, and/or rendering of 3D and virtual environments. Because higher computational performance is necessary to complete these and other tasks, computing-based ATs are becoming essential to bring simulation technologies to more applications and devices without worrying about software and hardware limits. ATs include virtual reality (VR) and augmented reality (AR), as well as other emerging hardware such as 3D-enabled tablets.

Basics of Virtual Reality

In principle, VR is a computer-generated digital setting experienced through sensory stimuli observed in 2D or 3D[1]. VR offers an interactive and multi-sensorial experience of a fictitious world in an enclosed environment where the user determines how the simulated world interacts. Users typically experience the simulated environments by putting on immersive head-mounted displays (HMDs).

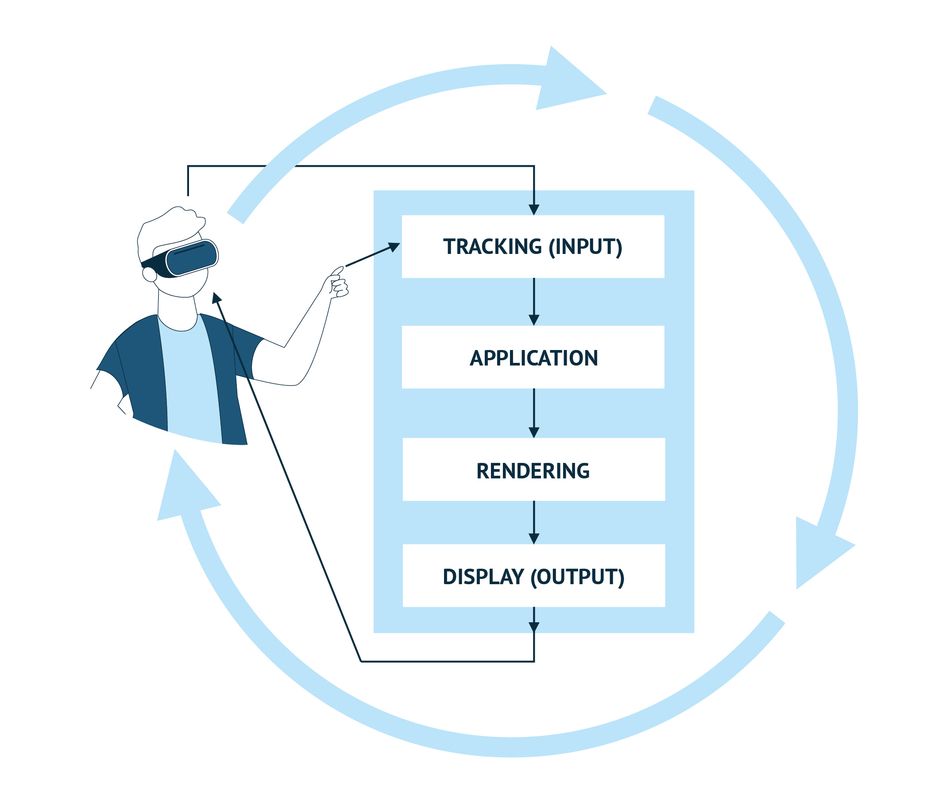

The user sends information into the system, and the feedback returns to the user from the system with particular stimuli. Data is collected from the user, such as eye position, user location, hand movements, etc., and transmitted to the application to update geometry, user inputs, and other simulation features (Figure 02). The rendering transforms the computer format to a user format delivering visual, auditory, and haptic outputs.

Basics of Augmented Reality

In contrast to VR, where an environment is fictitious, AR is a set of technologies that helps see the real world differently. With the aid of computer-made images, a processor, and remote (cloud contained or downloaded directly through the internet) or local data sources, a device can augment the real world by superimposing virtual objects that can be seen or perceived in real-time[2]. AR can be created anytime, anywhere using either fixed (e.g., rooms) or movable systems (phones). Together with cloud computing, a real-time AR simulation can be experienced on low-compute environments and devices.

The next generation of VR/AR solutions

Ansys has developed a physics-based and real-time solution called VRXPERIENCE Perceived Quality for design evaluation by using VR technologies that allow interactive tracing and configuration of prototype features while aiming for significant accuracy. Prototypes can be significantly improved by having access to predictive and validating options that can help control the impacts of light, color, and material variation. This enables engineers and designers to make better decisions through virtual prototypes that impact product design optimization.

The Ansys VRXPERIENCE allows users to take CAD formats and optimize models through light, color, and material libraries. Based on the physics of light, accurate rendering can be delivered due to the optical propagation capabilities that can be manipulated in 360° sets. This service enables scalable rendering completed with calculations designed to find the best options between accuracy and interactivity. HMD-based visualization can guide users to efficiently improve their designs through different simulations. As a result, productivity and prototype quality are considerably increased from the moment VR is used for prototyping.

The Lume Pad tablet

To date, most devices that are used to generate 3D images are based on stereoscopy. This technique creates images with the major depth on a flat screen but does not provide images of a vividness that can replicate what the human eye can see, which is one of the factors severely limiting the optical delivery of VR/AR services. Lightfield technology is one solution to this challenge. The technology collects the radiance of light rays coming from different directions. It provides elegant and intense visual experiences that take advantage of angular information obtained at different distances and positions in the scene[4].

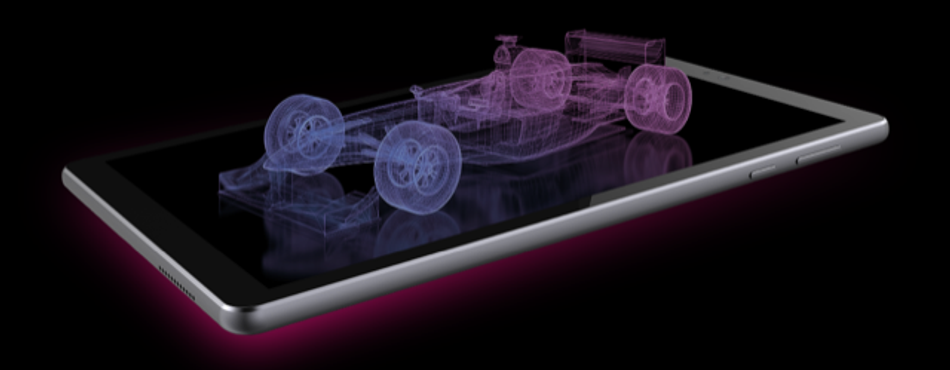

California-based company Leia has built upon Lighfield’s characteristics and developed a patented technology called Diffractive Lightfield Backlighting (DLB™) that uses finely tuned nano-structures to project a dense Lightfield from a transparent, edge-lit substrate. The Lume Pad is Leia’s keystone product that is capable of sending variable amounts of light to contrasting directions, resulting in content that can be examined in high fidelity 3D without the use of glasses or eye-tracking.

With the DLB™, the tablet can use optimal resolutions (2D resolution 2560×1440) where about 16 images are sent to the display simultaneously (the resolution of each Lightfield view is 640x400). In addition to the DLB™ technology, Leia uses other software and hardware necessary to bring about the total performance of the Lume Pad. In particular: nanotechnology, manufacturing, video processing tools, and the development of its LF content. The Lume Pad is helping advance the way simulation can be experienced and potentially broadening the way simulation is delivered to different applications. One exciting example is the possibility for DLB to offer a much more interactive and intuitive way to view and understand the digital twins of smart buildings or public infrastructure, opening this critical tool to more users.

Technological Convergence to deliver the future of simulation

Graphic processing units (GPUs) perform in parallel to central processing units (CPUs) to enhance visualization in 2D surfaces[5]. They take CPU’s off-loaded functions with a high degree of parallelism, for example, running several mathematical functions or data points simultaneously. High-performance computing (HPC) or inclusive deep learning are technologies taking advantage of modern GPUs. But, traditional GPUs might not be enough to withstand the requirements of new simulation tools. Common restraint factors are equipment availability (including price), processor performance, data storage, growing digital collaborative work, and running times for data processing. For high-end 3D visualizations, other types of infrastructure are required.

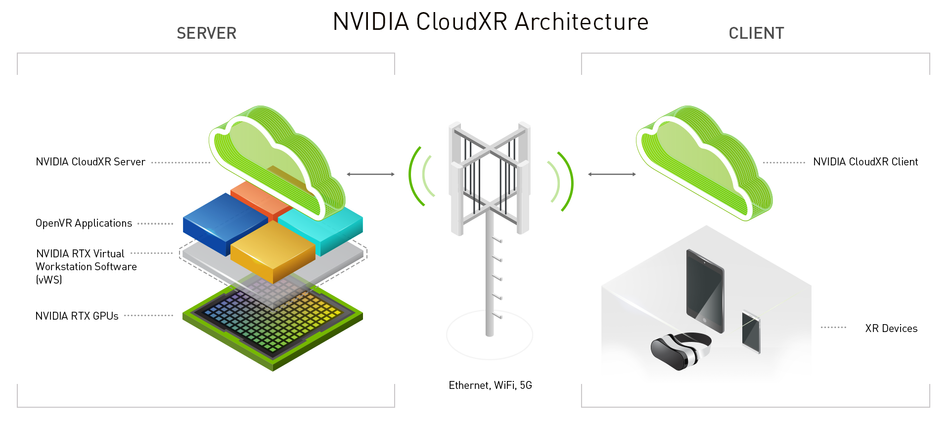

In the case of data processing, cloud-based GPUs offer a great solution to deal with high loads of information and stream visualizations back to remote devices in real-time. An example of cloud-based immersive streaming is NVIDIA’s CloudXR, which delivers graphics-intensive VR/AR experiences across radio signal or ethernet. Simulation focussed companies such as Ansys are integrating NVIDIA cloud services into some products.

In the search to improve graphical processing, Ansys uses cloud-based GPU virtualization to accelerate their simulation and rendering. When run in a virtualized environment, NVIDIA CloudXR leverages NVIDIA RTX Virtual Workstation (RTX vWS) to stream fully accelerated immersive graphics to a remote device such as an HMD or the Lume Pad. By running their simulations remotely on powerful GPUs and streaming them with CloudXR and RTX vWS, Ansys users are able to visualize their designs and interact with them, without interrupting their simulation.

Conclusion

As simulation for design, monitoring, testing, and more becomes more and more integrated into all industries, assistive technologies that enable us to engage more richly with simulation will continue to appear. With the aid of these technologies, simulation will continue to expand as a method of design, research, monitoring, and testing.

References

1. Jerald J. The VR Book: Human-Centered Design for Virtual Reality. 1st ed. Özsu MT, Hart JC, editors. Morgan & Claypool Publishers All; 2016. 636 p.

2. Furth B, Carmigniani J, Hugues O, Fuchs P, Nannipieri O, Kalkofen D, et al. Handbook of Augmented Reality. Furth B, editor. Boca Raton, Florida: Springer New York Dordrecht Heidelberg London; 2011. 769 p.

3. Ansys. VRXPERIENCE Perceived Quality. © 2020 ANSYS, Inc. All Rights Reserved.

4. Mahmoudpour S, Schelkens P. On the performance of objective quality metrics for lightfields. Signal Process Image Commun [Internet]. 2021;93(January):116179. Available from: https://doi.org/10.1016/j.image.2021.116179

5. Edition S, Parker M. Digital Signal Processing 101. Second Edi. Jonathan Simpson; 2017. 411 p.