How CAMS, the Cameras for Allsky Meteor Surveillance Project, detects long-period comets through machine learning

Designed to monitor the whole sky for signs of meteors, which can be traced back to their cometary origins, the CAMS project has recently received a big upgrade to its detection and visualization pipeline — with the SpaceML project bringing citizen scientists into the mix.

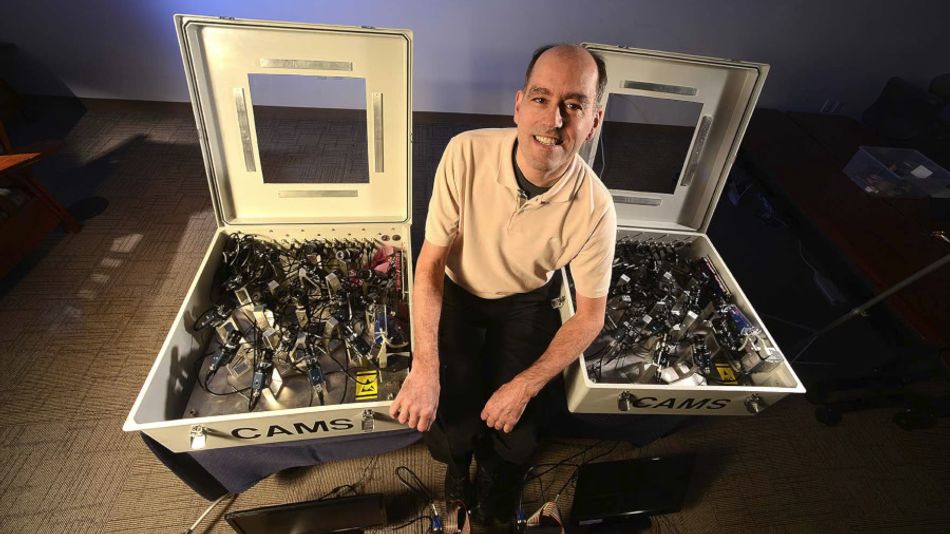

The CAMS project uses affordable low-light camera arrays to monitor the skies, with an AI-equipped pipeline for automated detection and tracking.

Long-period comets (LPC) are a combination of hard-to-find and of considerable risk to the Earth’s ecosphere. With scientific evidence pointing to an impact from an object measuring around 10km (around 6.2 miles) across having caused the mass extinction of the majority of dinosaur species, there’s considerable interest in providing as much early warning of a potential impact as possible — which is where the CAMS project comes in.

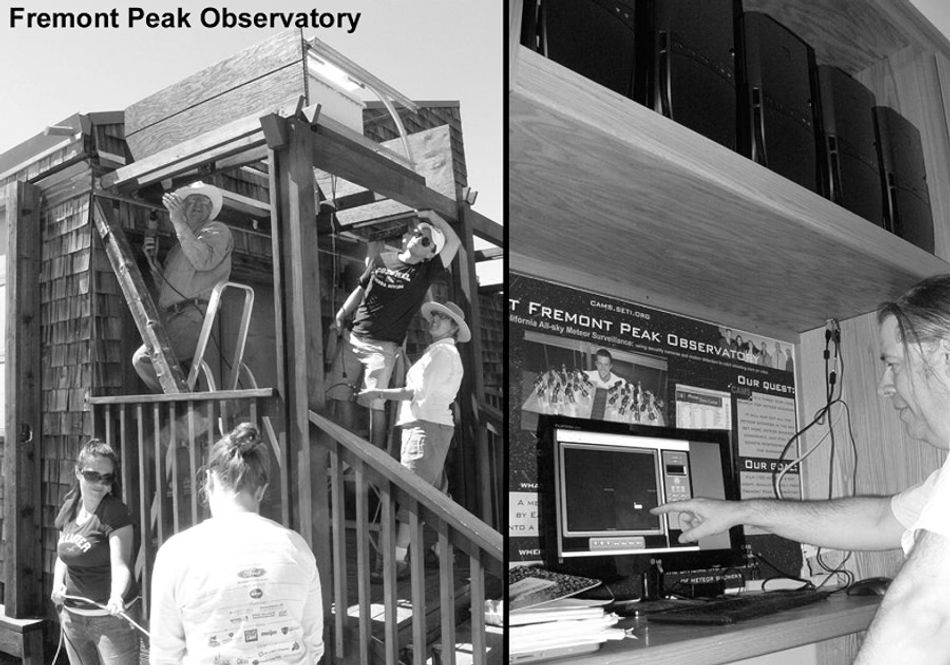

The Cameras for All-sky Meteor Surveillance (CAMS) project went live in October 2010 with the installation of low-light camera systems at the Fremont Peak Observatory in California. By recording imagery from these cameras using a specially-developed compression system and passing it through a software utility designed to detect the presence of meteors, it offered a chance to spot the evidence of long-period comets that other observation approaches could miss — and as additional monitoring stations went online, the number of meteors it found and could tie back to previously-unknown long-period comets increased accordingly.

Despite software assistance, however, the monitoring process required considerable human intervention — to the point where data was only extracted from the sites on a bimonthly basis. Daily updates, where a night’s observations would be available to the scientific community for analysis the very next day, would need something different: The application of machine learning.

The CAMS AI pipeline

Designed to reduce the amount of work required of human operators, the current artificial intelligence pipeline available to CAMS sites was outlined in a 2017 paper published in the Proceedings of the International Meteor Conference (IMC) 2017.

“We set out to improve and automate the classification of meteors from non-meteors using machine learning and deep learning approaches,” Marcelo De Cicco and colleagues explain in the paper. The goal: The complete removal of the human element from the CAMS data handling pipeline, without any reduction in the accuracy of the processed results.

The result is a new, six-stage pipeline. First, local machines installed at the operator sites and responsible for capturing the sky data perform local processing to determine whether a flagged object is a meteor or a non-meteor — the latter including objects like clouds, planes, and birds which could trigger a false detection in the system.

For this, the team developed a random forest classifier offering a binary meteor or non-meteor classification, a convolutional neural network which outputs a probability score for a series of image frames, and a long-short term memory (LSTM) network designed to predict light curve tracklets’ likelihood of corresponding to a meteor. Precision and recall scores of high-eighty and low-ninety per cent were recorded.

The second stage is to retrieve the data from the remote site, a step which previously relied on in-person bimonthly visits and the pickup of physical DVD media on which the data had been burned. In its place: An automated data transmission via the File Transfer Protocol (FTP), placing data from multiple sites onto a single server for further processing.

That processing, the third pipeline stage, is handled using a series of Python scripts which interface with and automate CAMS’ existing software stack — including MeteorCal, which integrates additional information including information about the site, the cameras installed, and star measurements.

The fourth stage is coincidence calculation, which takes confirmed meteors and uses information from multiple cameras to generate a trajectory — identifying deviations in the video capture which could lead to errors and automatically correcting. As with the verification stage, the automated approach — based on classifiers looking at light curve shape and maximum errors in geographic position — is designed to considerably reduce the human labor involved in the process.

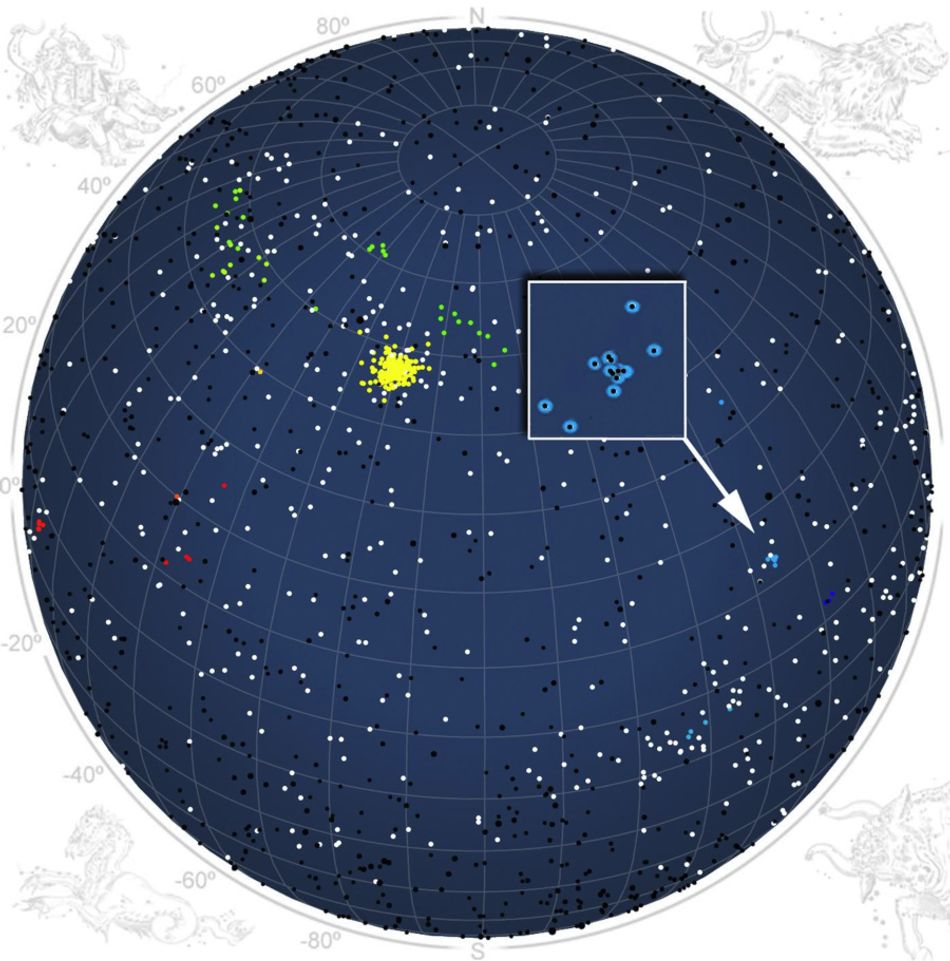

The fifth stage: Data clustering, identifying outbursts and new showers that would indicate the presence of a previously-unknown long-period comet. Using the t-Stochastic Neighbor Embedding (t-SNE) approach to unsupervised machine learning to process parameters followed by density-based spatial clustering of applications with noise (DBSCAN) for grouping identification, the pipeline is able to spot both previously-unidentified meteor shower groups and potential meteor outbursts alike.

Accessible visualization

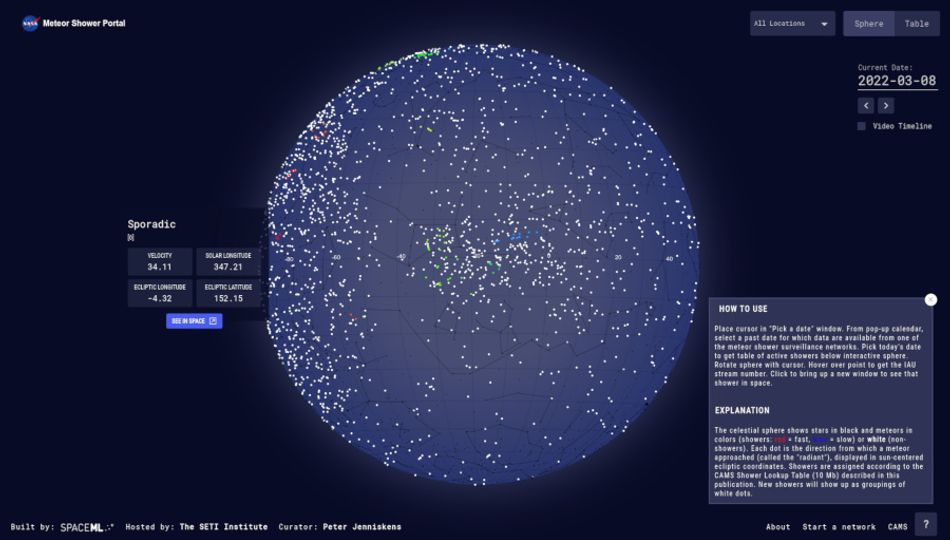

The sixth stage, meanwhile, is the most visible. CAMS data which has been through all five of the previous stages is, in its raw form, accessible only to scientists trained in its interpretation. To make it more broadly available, it has to be rendered into a more accessible form: A visualization step.

This final stage takes the data and, using custom-written JavaScript, renders it onto a freely-rotatable sphere accessible in any modern web browser — even on devices like smartphones and tablets. Users, whether investigating the data for scientific purposes or out of pure curiosity, can examine the whole sky — either using the freshest data, gathered from each station’s overnight capture, or walking backwards through time all the way to CAMS’ original captures in 2010.

This ability to compare current activity to previous data provides an easy way to visualize unusual activity and new showers. More than this, however, it has the potential to make the subject accessible to a far broader audience.

“This new method of visualizing meteor radiants,” the team notes, “will improve public interest in meteor science, as well as allowing a novel approach to studies of new meteor showers.”

For CAMS, that’s a key feature: While the project launched with only a small number of professionally-operated stations, it has since opened its doors to the wider citizen science community — pulling in data from observation stations with a few as one or two cameras and processing it through the same automated pipeline, increasing the sky surveillance coverage available to the project.

SpaceML and citizen science

A key contributor to the CAMS AI pipeline, and the CAMS project more broadly, is SpaceML, an extension of the NASA Frontier Development Lab AI accelerator founded with the goal of distributing open-source research and attracting citizen scientists to contribute to project development and deployment.

Built around the idea of applying startup and agile software development principles to scientific research, as a means of reducing time-to-success, the SpaceML program takes selected projects from the Frontier Development Lab — including, but not limited to, the CAMS AI pipeline — and provides extended development with a view towards real-world deployment rather than pure research.

A meritorious aspect of the program is its open-source nature, which aims to reduce the barrier to entry for would-be citizen scientists. Small “extension projects,” often focused on nice-to-have features rather than core functionality, are provided by FDL researchers to SpaceML volunteers with guidance, access to cloud computing resources via Google Colab, and — once a project has reached sufficient maturity — mentorship.

“SpaceML has a focused aim of converting research into product,” a paper presented at the COSPAR 2021 Cross-Disciplinary Workshop on Cloud Computing explains, “reaching the highest NASA Technology Readiness Level (TRL 9).”

SpaceML’s pilot run in 2020 attracted high school students from across the world, none of whom had any background in AI projects. Despite this, they contributed to research in topics ranging from self-supervised learning on unlabelled satellite imagery, unlabelled data balancing, multi-resolution satellite data search, and more — including contributions to improving the CAMS AI pipeline beyond the state of the art detailed in the project’s 2017 paper.

SpaceML community members, working on projects identified by SpaceML CAMS AI project lead and author of the original CAMS machine learning approach Siddha Ganju, have helped optimise the pipeline and make the data even more accessible.

Burcin Bozkaya, director of the applied data science graduate program at the New College of Florida, fielded seven students for CAMS projects under SpaceML. “Two teams working on a dataset and a problem for 13 weeks did pay off,” Bozkaya writes of the experience.

“The teams were able to improve the earlier prediction results obtained by SpaceML by a significant margin, thanks to two genuine ideas: a) a parallel LSTM model that is much better suited to the dataset provided, because the data represent multiple cameras and their associated data records, and b) a CNN-LSTM model that proved to be quite effective and also much faster, thanks to the CNN layers’ ability to extract critical features for the LSTM to take advantage of.”

Another contributor, Sahyadri Krishna, worked on an effort to cover a blind spot in the CAMS camera network over India. "My journey with the CAMS project started with the intention of helping to build techniques that would bridge this gap in observations,'' he writes. “As I embarked on the Indian extension of the program, I learnt valuable techniques and gained significant experience working with peers internationally.”

Yet another SpaceML community contributor, located this time in Nigeria, offered additional improvements to the pipeline and improvements to the interactive visualization tool — which now includes the ability to zoom in to individual meteor showers, visualizes constellations as a geographic reference, and provides data export functionality — all developed at a local cyber-cafe.

Onwards and upwards

Between all the parties involved, the CAMS project has been going from strength to strength. In 2015 the camera network assisted researchers in retrieving a meteorite which landed in California, tracing its potential origin to the Gefion asteroid family; in 2015, it identified a new meteor shower called the Volantids; in 2016 it guided researchers to another asteroid fragment in the Apache White Mountain reservation, finding it originated in the asteroid belt between Mars and Jupiter.

In 2019, a meteor shower was detected and potentially tied to a comet described in the book Histories of the Wars from 533 AD; later that year an outburst was identified and used to refine the orbital period of the Grigg-Mellish comet originally observed in 1907; and in 2020 a shower led to the discovery of a previously-unknown long-period comet, to name just some of the project’s achievements.

By 2020, CAMS project improvements and enhancements had led to new and unusual meteor showers being reported almost monthly from a network which has now grown to over 600 cameras globally — and there’s no sign of that slowing down. The project recently oversaw the creation of a student-run observation station in India, to cover the current observation gap in the northeastern hemisphere, in partnership with the IISER Tirupati Astronomy Club CELESTIC, and is raising funds for its operation and further expansion.

More information on CAMS is available on the SETI Institute website while SpaceML has its own site covering its CAMS work and more, thanks to the work of contributors Alfred Emmanuel, Julia Nuygen, Sahyadri Krishna, Chicheng Ren, Jesse Lash, Chad Roffey, Amartya Hatua, and Siddha Ganju.

References

Peter Jenniskens, Peter S. Gural, Loren Dynneson, B. J. Grigsby, Kevin E. Newman, Mike Borden, Mike Koop, David Holman: CAMS: Cameras for Allsky Meteor Surveillance to establish minor meteor showers, Icarus Vol. 216 Iss. 1. DOI 10.1016/j.icarus.2011.08.012.

Marcelo De Cicco, Susana Zoghbi, Andres P. Stapper, Antonio J. Ordoñez, Jack Collison, Peter S. Gural, Siddha Ganju, José-Luis Galache, and Peter Jenniskens: Artificial intelligence techniques for automating the CAMS processing pipeline to direct the search for long-period comets, Proceedings of the International Meteor Conference 2017. ui.adsabs.harvard.edu/abs/2018pimo.conf…65D

Anirudh Koul, Siddha Ganju, Meher Kasam, and James Parr: SpaceML: Distributed Open-source Research with Citizen Scientists for the Advancement of Space Technology for NASA, COSPAR 2021 Workshop on Cloud Computing for Space Sciences. DOI arXiv:2012.10610 [cs.CV].

Peter Jenniskens and Siddha Ganju: Cameras for Allsky Meteor Surveillance (CAMS), SETI Institute. www.seti.org/cams

Peter Jenniskens and SpaceML Contributors: NASA Meteor Shower Portal, SETI Institute. meteorshowers.seti.org/

Burcin Bozkaya: A Practical Data Science Approach to Detecting Meteors with CAMS, SETI Institute, Feb. 2022. www.seti.org/practical-data-science-approach-detecting-meteors-cams

Sahyadri Krishna: My experience with the SpaceML mentorship program for CAMS, SpaceML, Dec. 2021. medium.com/spaceml-mentorship-programme-for-cams/my-experience-with-the-spaceml-mentorship-programme-for-cams-846962abb65