Applying large-scale language models outside language: Examples from materials discovery, cybersecurity, and building management

You've probably heard about the amazing capabilities of some recent AI models, such as GPT, AI21, or BLOOM. Perhaps you use one of these models yourself. Either directly, or through another product like Wordtune, YouWrite, Jasper – or ChatGPT. It turns out that while these models are trained on language data, they can be used for other applications as well. In my latest article, you can read more about applications in materials discovery, cybersecurity, and even building management.

Language models outside language. Image generated by Stable Diffusion.

This article was first published on

www.wevolver.comYou’ve probably heard or read about the amazing capabilities of some recent AI models, specifically large-scale language models like GPT (GPT-3 at the time of this writing), AI21, or BLOOM. Perhaps you’ve tried one of these models yourself, either directly or as part of another product. For example, a writing assistant like Wordtune, YouWrite, or Jasper. Or, more recently, ChatGPT.

As their name suggests, large-scale language models (LLMs) were developed and trained with language data. But it turns out that they can be applied to other use cases, outside language. GitHub Copilot is probably one of the best-known examples of this. It’s a tool that helps you write software. You describe to the model what you want to do, and it suggests code.

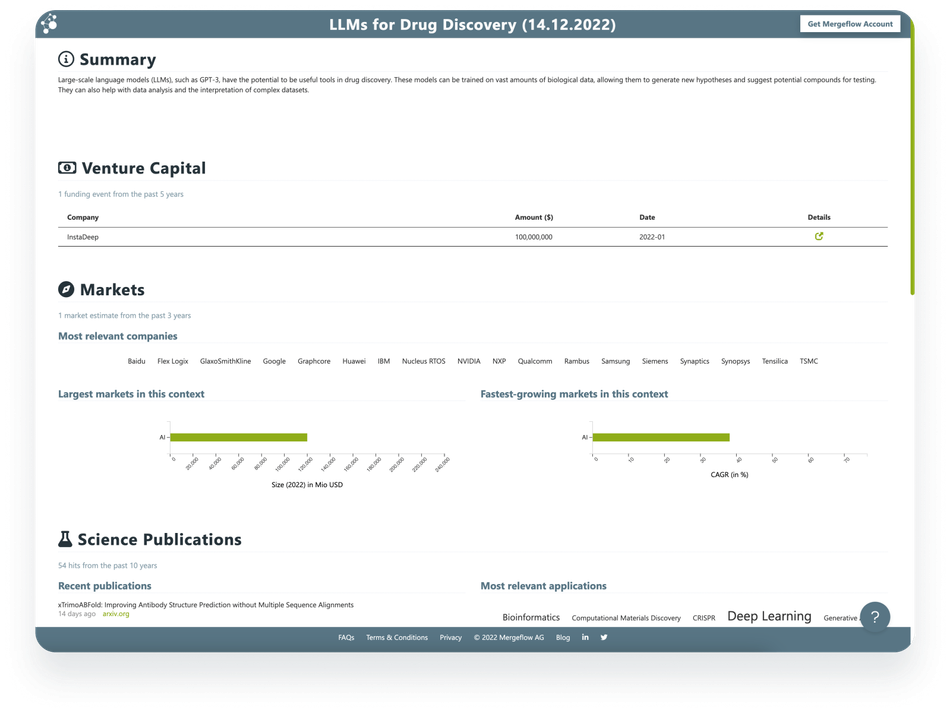

It’s also no big secret anymore that these models are started to get used for drug discovery:

Probably because of these applications outside language, more and more people refer to these models as foundation models, rather than language models: They are the foundation for many use cases, not just language-related ones.

So, what are some other non-language examples where LLMs, or foundation models, are currently being explored? I used Mergeflow to find out.

You’ll notice that all my examples below are research papers. This might be an indicator of how nascent the field of “doing things with foundation models” is. But even if you’re a “product person”, or a “company person”, I encourage you to take a look: the time it takes to get from research paper to product can be really short when it comes to LLMs.

Materials discovery

Materials discovery is the process of identifying new materials and understanding their properties. It can help inform decisions about which materials are best suited for particular applications. And it helps accelerate the development process by providing insights into how materials behave under different conditions.

I used Mergeflow to research foundation models for materials discovery, or computational chemistry, and then zoomed in on research papers that didn’t deal with language. For example, I excluded papers on materials-related information extraction from text.

This paper, for instance…

Materials Transformers Language Models for Generative Materials Design: a benchmark study

…used data from material databases (ICSD, OQMD, and Materials Project) to predict new materials. The authors verified the materials using DFT calculations. DFT (Density Functional Theory) calculations are used to determine the energy and electronic properties of a molecule (I’m not a materials scientist but I assume that this is basically like doing a plausibility check for a hypothesized material).

And this paper…

Composition based oxidation state prediction of materials using deep learning

…uses LLMs to predict oxidation states of inorganic materials. The oxidation state of an atom is a measure of its electron-accepting or electron-donating ability. Atoms with a positive oxidation state are electron-donating, while atoms with a negative oxidation state are electron-accepting. According to Mergeflow’s data and analytics, oxidation states play a role in battery and fuel cell technology, and in catalyst design:

Cybersecurity

In cybersecurity, an attack packet is a piece of data that is used to attack a computer or network. Attack packets can be used to perform a variety of attacks, including denial of service, man-in-the-middle, phishing, buffer overflow, SQL injection, and spoofing.

Imagine you’re in charge of the security of a system, and you see attack packets hitting your system. And based on what kinds of attack packets you see, you have to infer what kind of attack you might be dealing with.

When you do this, chances are you’ll see lots of such packets every day. For example, the authors of this paper…

Attack Tactic Identification by Transfer Learning of Language Model

…used data from a honeypot (= a system that aims to attract attackers and learn more about their tactics and tools) with 227,000 packets per day. Obviously, you need some kind of analytics to help you interpret such volumes of data.

And this is where foundation models come in: They used LLMs to learn attack patterns from (text) descriptions of known attacks, and then used the model to classify previously-unseen attack packets. (so this is a “borderline case” — yes, the model uses language to train but then is used to classify data that’s not language, i.e. the attack packets).

Building management

Building management refers to the process of overseeing the day-to-day operations of a building. It involves managing the maintenance of the building systems, such as heating, ventilation and air conditioning (HVAC), electrical systems, plumbing, and fire suppression systems. It also includes other aspects, such as tenant lease management, but the focus here is on the infrastructure aspects.

Building management is quite an active area of technology, as you can see in the report from Mergeflow below (click on the screenshot below to get to the report):

Perhaps a bit similar to the cybersecurity example above, building management systems produce large amounts, or streams, of data. The challenge then is to interpret the data. For example, given data streams from all kinds of building systems, how do you know where you could optimize energy consumption?

The problem is, you need labeled data. In other words, you need to know what certain kinds of data mean (e.g. what do certain combinations of weather and other sensor data mean with respect to energy consumption?). And in order to substantially reduce the amount of hand-labeled data needed for a good model, this paper…

Semantic-Similarity-Based Schema Matching for Management of Building Energy Data

…uses a combination of foundation models and active learning. Active learning is a machine learning technique that focuses on selecting the best samples to query from a dataset, in order to get the most accurate answer with the fewest queries. It involves selecting a small subset of data points and querying them for their values, then using the results to choose the next set of samples to query. This allows the machine learning algorithm to quickly home in on the correct answer without having to query all of the data points initially.