A Practical Guide to Understanding Sensor Fusion with Edge Impulse: Building a Smart Running Jacket

Edge AI and sensor fusion revolutionize tech by enabling instant, informed decisions through multi-sensor data analysis. Explore their application by building a Smart Running Jacket with Sony Spresense and SensiEDGE CommonSense via Edge Impulse platform.

Introduction

Edge AI and sensor fusion are transforming how devices understand and interact with the world. By embedding AI into devices at the network's edge and combining data from various sensors, these technologies enable real-time, efficient decision-making across diverse fields. This fusion brings in a new era of smart, responsive systems capable of sophisticated analytics and autonomous actions directly where data is collected.

This article explores these concepts through a practical application: the development of a Smart Running Jacket. Utilizing the Sony Spresense and SensiEDGE CommonSense boards, we demonstrate how to harness sensor fusion and Edge Impulse platform to create a wearable that distinguishes between different types of physical activities and ambient lighting to control integrated LED lighting for improved safety and visibility.

Understanding Edge AI and Sensor Fusion

Edge AI refers to executing AI algorithms directly on a device, enabling real-time data processing without needing cloud connectivity. This approach significantly enhances the responsiveness of devices, reduces latency, and maintains user data privacy. For wearable technologies, Edge AI facilitates immediate decision-making, such as activating safety features based on the user's current activity and surrounding conditions.

Sensor Fusion involves integrating data from multiple sensors to achieve a comprehensive understanding of the device's environment. By intelligently combining inputs from various sources, such as accelerometers, gyroscopes, and light sensors, wearables can accurately interpret complex scenarios beyond the capability of any single sensor.

The Smart Running Jacket Concept

The Smart Running Jacket represents an innovative fusion of wearable technology and practical utility, designed to enhance the safety and convenience of runners, in low-light conditions. By harnessing the capabilities of the Sony Spresense board alongside the SensiEDGE CommonSense sensor suite, this jacket exemplifies the potential of Edge AI and sensor fusion in crafting responsive, user-centric wearable devices.

At its core, the jacket leverages a combination of motion detection and ambient light sensing to intelligently determine the wearer's activity and the surrounding lighting conditions. During daytime or in well-lit environments, the jacket functions as a normal athletic wear. However, as the ambient light diminishes, indicating dusk, dawn, or nighttime conditions, and the wearer's movement corresponds to a running pattern, the jacket activates its built-in LED lighting system. This feature not only increases the wearer's visibility to others but also enhances the runner's safety by alerting nearby vehicles and pedestrians to their presence.

The development process for the Smart Running Jacket presents a practical application of sensor fusion, where data from various sensors are integrated to make informed decisions. By combining inputs from an accelerometer, a light sensor, and potentially other sensors available on the CommonSense board, the jacket can accurately discern the wearer's state and adjust its features dynamically.

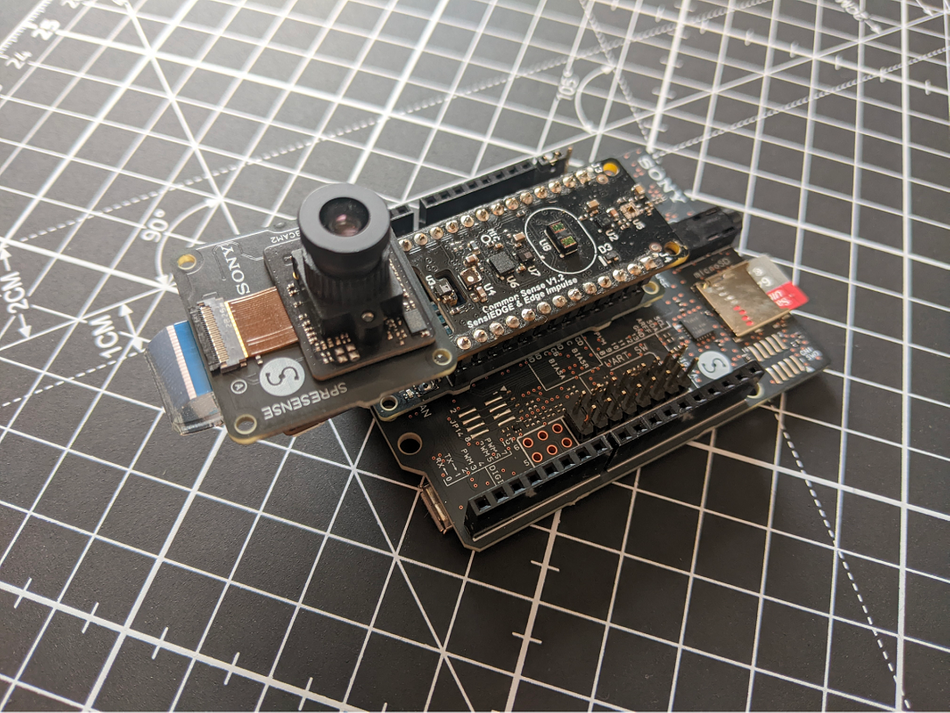

The Hardware: Sony Spresense and SensiEDGE CommonSense

The Smart Running Jacket's development utilizes the advanced Sony Spresense board and the SensiEDGE CommonSense sensor extension, offering a powerful combination for wearable technology. The Sony Spresense features a high-performance Sony CXD5602 processor with six Arm® Cortex M4F cores, optimized for low power consumption and high computational efficiency, making it ideal for Edge AI tasks. It supports extensive digital I/O options for versatile connectivity and includes 1.5 MB SRAM and 8 MB Flash memory for complex algorithm implementation.

The SensiEDGE CommonSense compliments Spresense with a rich sensor array, including Air Quality Sensor, Accelerometer, Magnetometer, Gyro, Temperature, Microphone, Proximity, Humidity, Pressure, and Light, among others. This broad spectrum of sensors is crucial for collecting diverse environmental and motion data, essential for the sensor fusion that drives the jacket's intelligent lighting response.

Suggested reading: Redefining Sensor Fusion with CommonSense addon for Sony Spresense and Edge Impulse

Project Design Flow

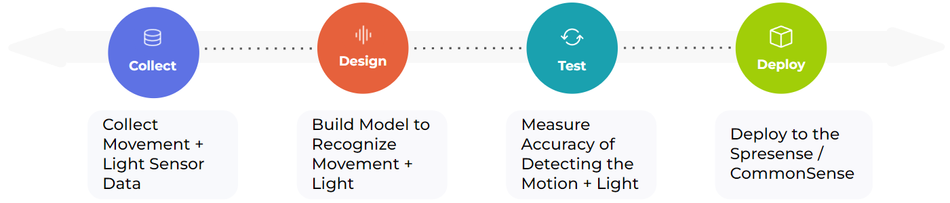

The development of the Smart Running Jacket through the Sony Spresense and SensiEDGE CommonSense involves a streamlined machine learning (ML) project design flow, essential for crafting an effective Edge AI application. This process comprises four stages:

Collect: Data collection is the first crucial step, focusing on gathering movement and ambient light data to differentiate between running in low light and being stationary in bright conditions.

Design: In this phase, the machine learning model is crafted to identify running patterns and ambient light levels, utilizing algorithms suitable for the Sony Spresense's capabilities.

Test: The model's accuracy is validated against unseen data, ensuring it reliably triggers the jacket's LED lights under the correct conditions.

Deploy: The trained model is then deployed onto the Spresense board, enabling real-time decision-making and control of the jacket's safety features based on sensor inputs.

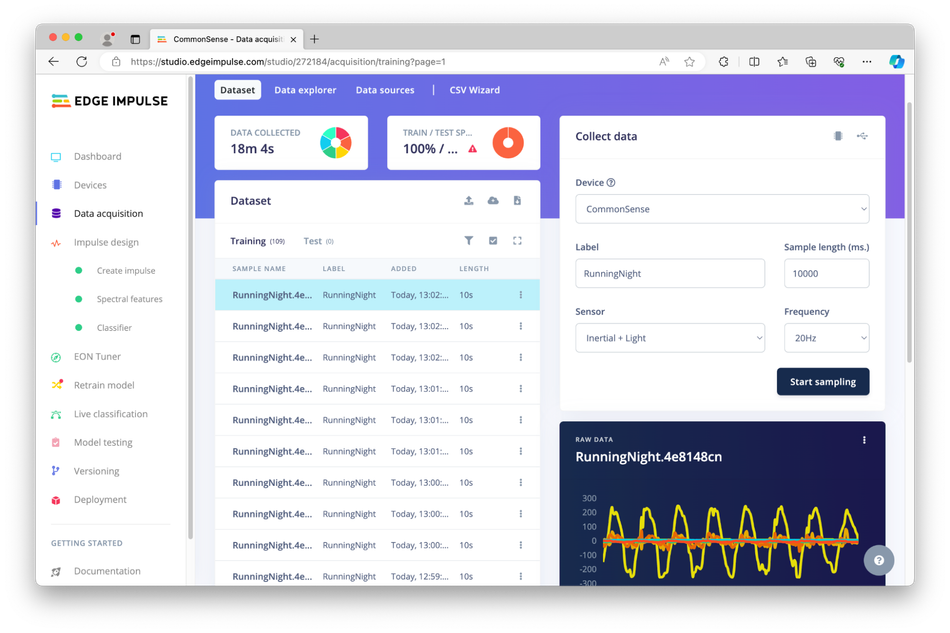

Collecting the Dataset

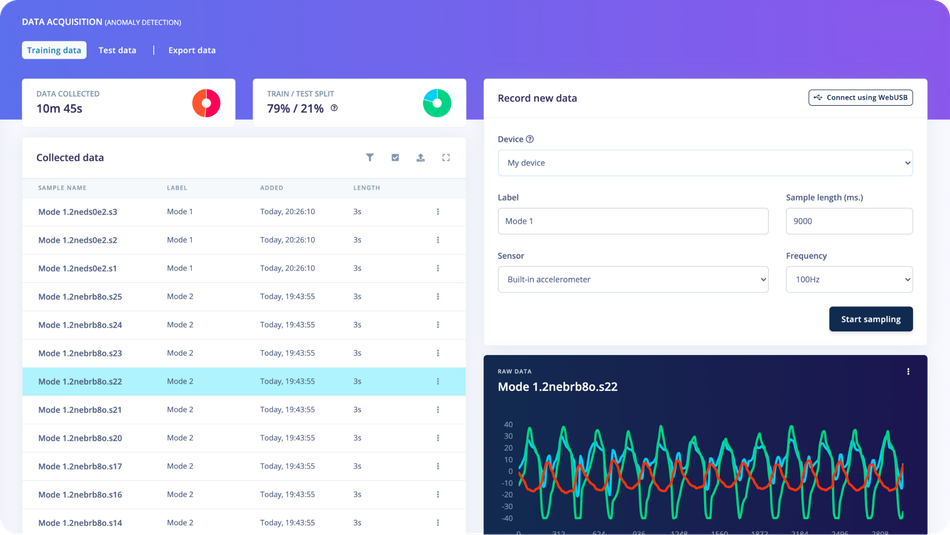

The foundation of the Smart Running Jacket project is the meticulous collection of a comprehensive dataset, utilizing the IMU (Inertial Measurement Unit) and Light Sensor on the SensiEDGE CommonSense board. This process is facilitated through Edge Impulse Studio, a robust platform designed to streamline the creation and management of machine learning datasets for edge devices.

Data Collection for Different Scenarios

The dataset aims to cover a range of activities and lighting conditions to ensure the model can accurately interpret the wearer's environment and actions. The scenarios include:

Running motion in daylight

Running motion in darkness

Idle or other motions in daylight

Idle or other motions in darkness

The critical condition for activating the jacket's built-in lighting is identified as running motion in darkness, necessitating a dataset that precisely captures this activity among others.

Dataset creation

Edge Impulse Studio offers versatile methods for dataset creation, including:

Uploading existing files directly into the studio

Capturing new data from within the studio interface

Uploading files via Command Line Interface (CLI)

Connecting to cloud storage solutions like Amazon S3 or Google Cloud Storage

Cloning existing projects from Public Projects or Demos, which includes datasets and the Ingestion Service

Generating synthetic data using advanced tools like Nvidia Omniverse

Suggested reading: Revolutionizing Edge AI Model Training and Testing with Nvidia Omniverse Virtual Environments

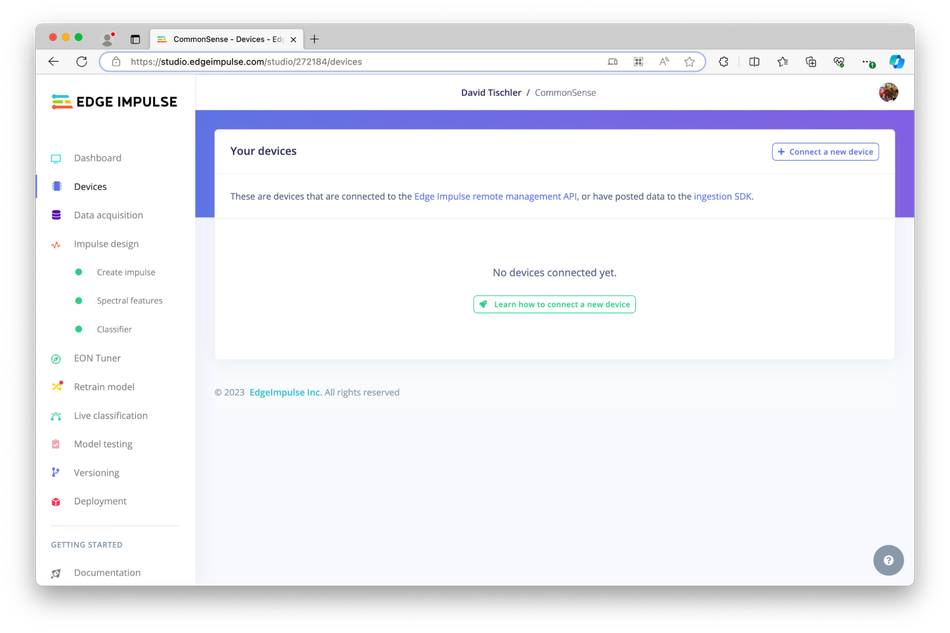

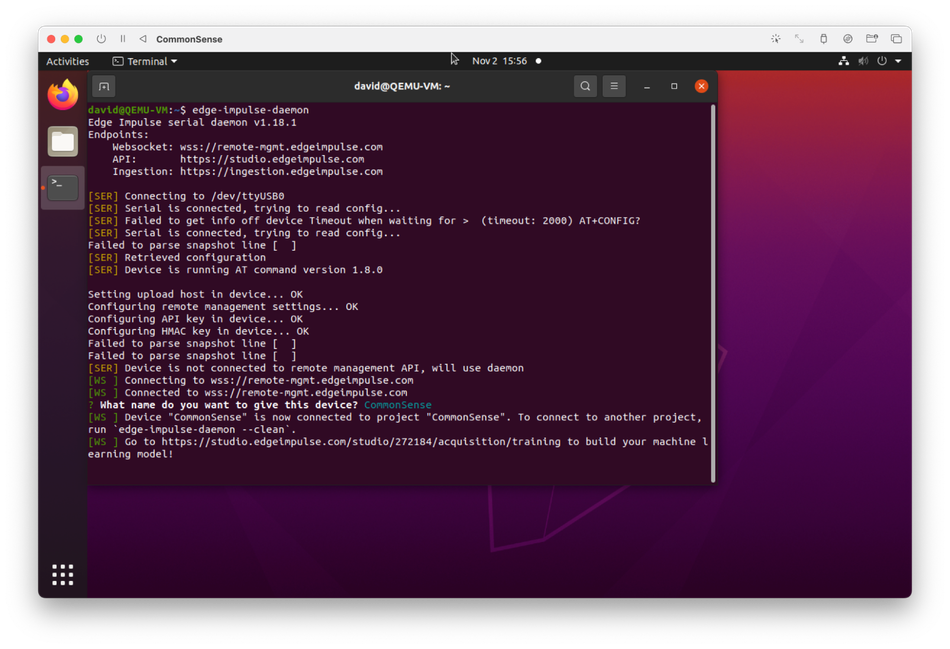

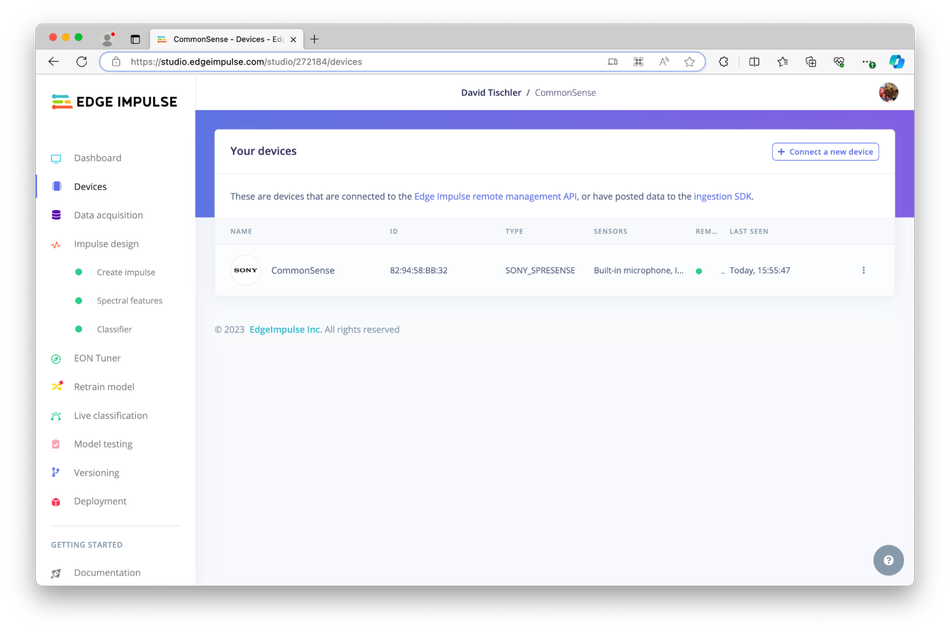

For this project, data will be captured directly from the CommonSense board. To integrate the Sony Spresense with Edge Impulse Studio, following steps are required:

Installation of Edge Impulse CLI tooling, compatible with Mac, Windows, and Linux systems.

Installation of Edge Impulse firmware on the Spresense board, utilizing the provided flashing utility.

Running the Edge Impulse daemon, which establishes a connection between the Spresense board and Edge Impulse Studio.

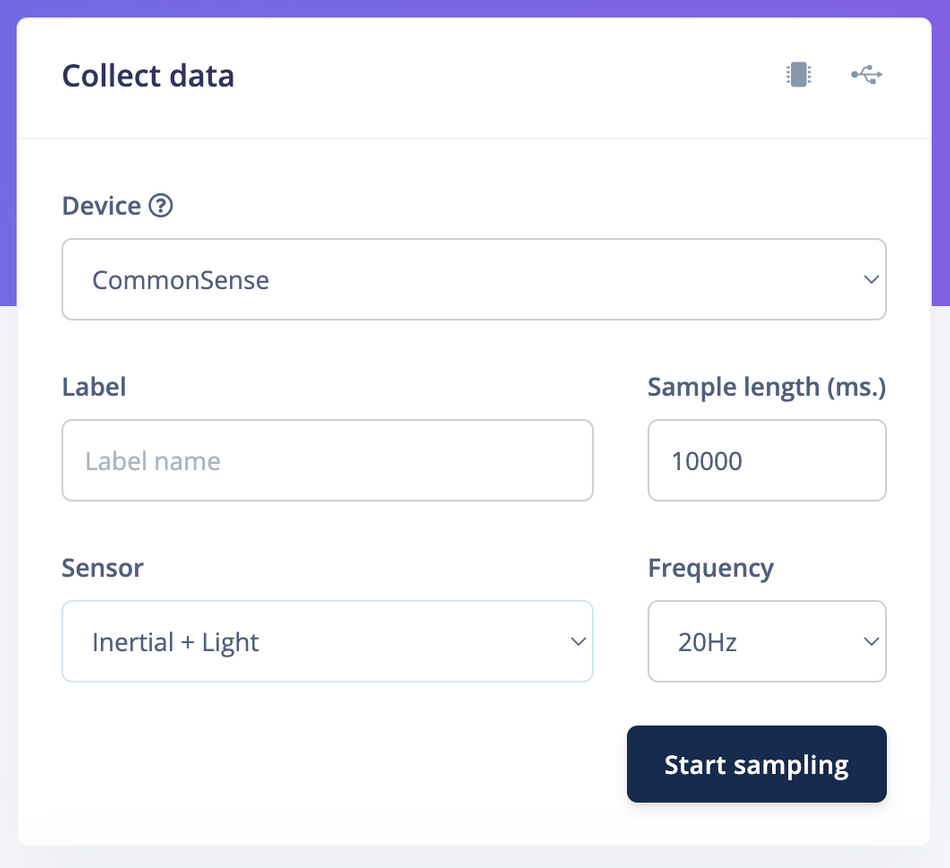

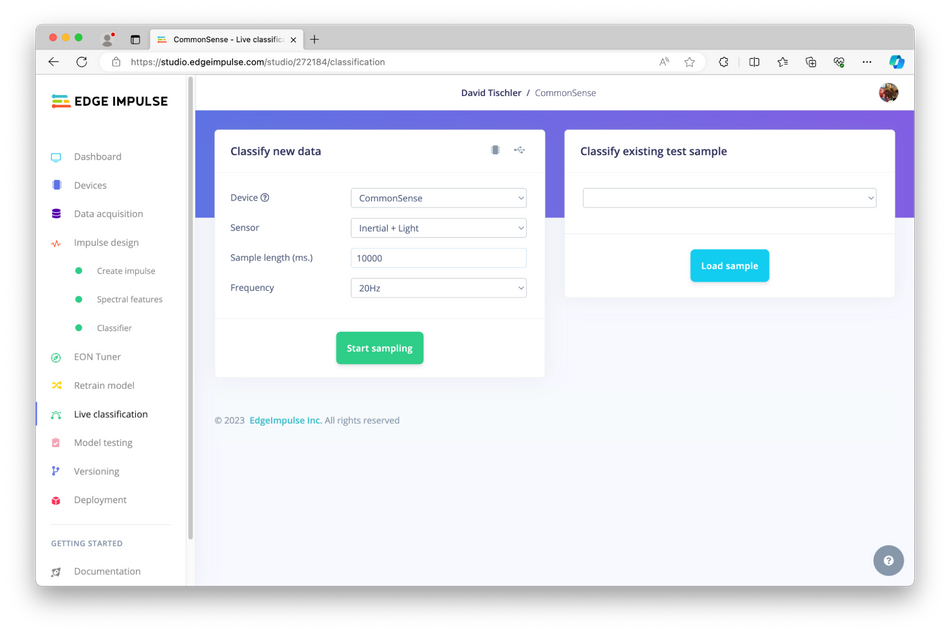

Data Sampling Process

The data sampling process begins by connecting the Spresense device to the computer following the instructions provided in the documentation. In Edge Impulse Studio, users select the relevant sensor(s) to collect data and assign labels to each data type (e.g., "Running in Dark''). Subsequently, the "Start Sampling'' command is initiated. It's worth mentioning that alternative methods for data collection, such as utilizing a smartphone or computer, can also capture additional or supplementary training data.

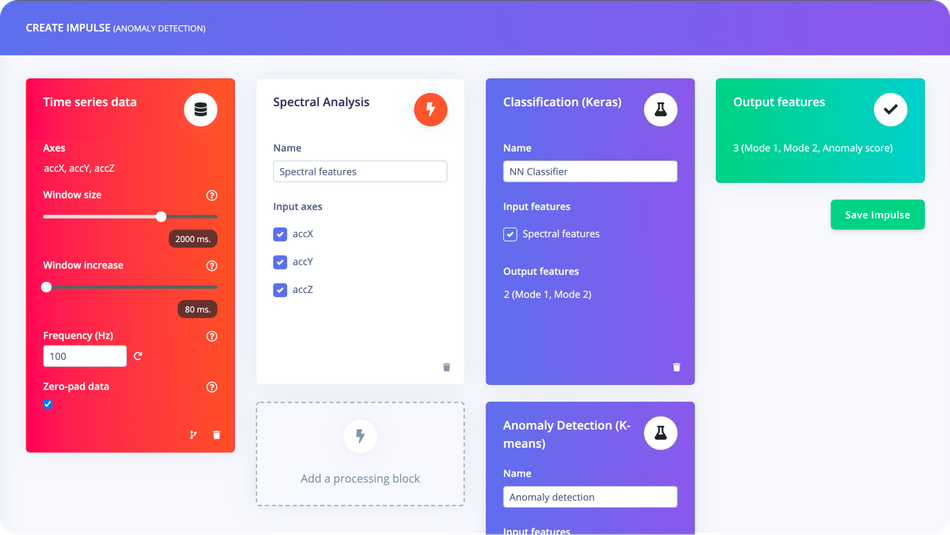

Building an Impulse

An Impulse constitutes a complete machine learning pipeline, tailored for edge devices, and comprises three fundamental building blocks: the input block, the processing block, and the learning block. This structure is designed to efficiently process and learn from sensor data, facilitating the creation of a responsive and intelligent wearable device. Check out the official documentation on building an Impulse to read more about the process.

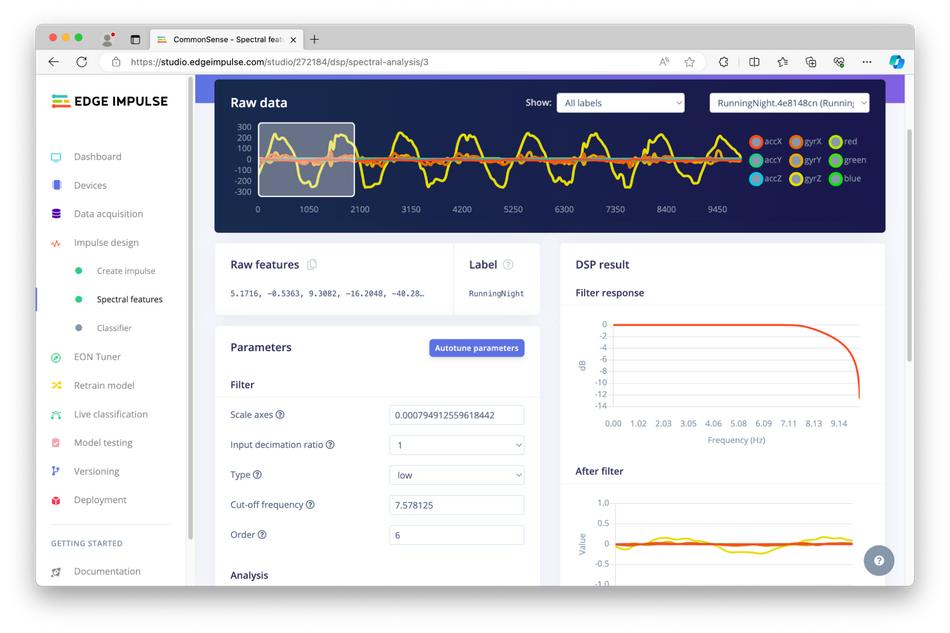

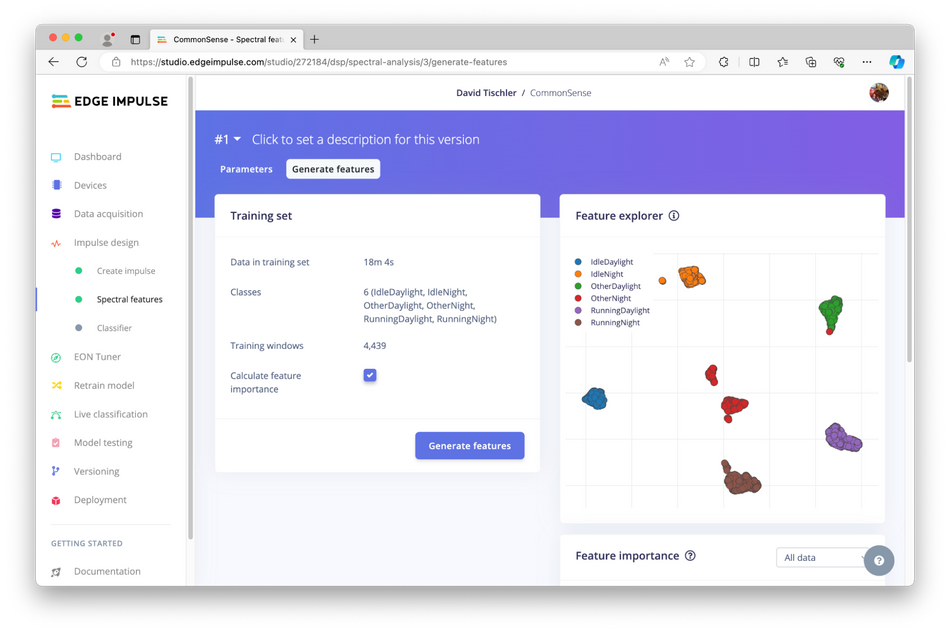

Feature Generation

The journey of building an Impulse begins with the "Spectral Features" block, where the digital signal processing (DSP) results are analyzed. In this stage, raw data from the sensors undergoes transformation to extract meaningful features that are crucial for the model's understanding and classification tasks.

By clicking "Generate Features" within Edge Impulse Studio, developers can visualize how these features distribute and cluster based on the different activities and lighting conditions captured in the dataset. This visualization aids in refining the feature extraction process, ensuring that the model can distinguish between the various states effectively.

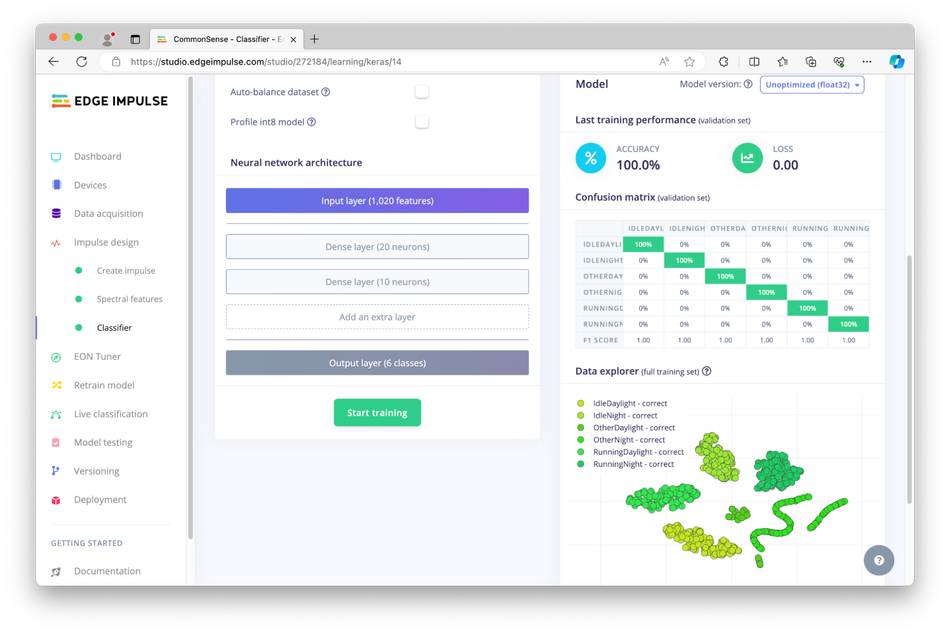

Neural Network Classifier

With the features generated, the next step is to train the neural network classifier. Edge Impulse Studio offers a wide array of options and settings, including an "Expert Mode" for advanced customization of the model, as explained in the official documentation. Training a neural network is a compute-intensive process that may vary in duration depending on the dataset's size and complexity.

Once the "Start training" button is clicked, the platform processes the input data against the defined features, applying the neural network algorithms to learn the patterns associated with each activity and lighting condition.

Upon completion, the platform provides a detailed report on the model's accuracy and its ability to cluster the different states. Achieving high accuracy, as in our case of 100%, indicates a well-separated dataset where each motion and lighting condition is distinctly recognized by the model.

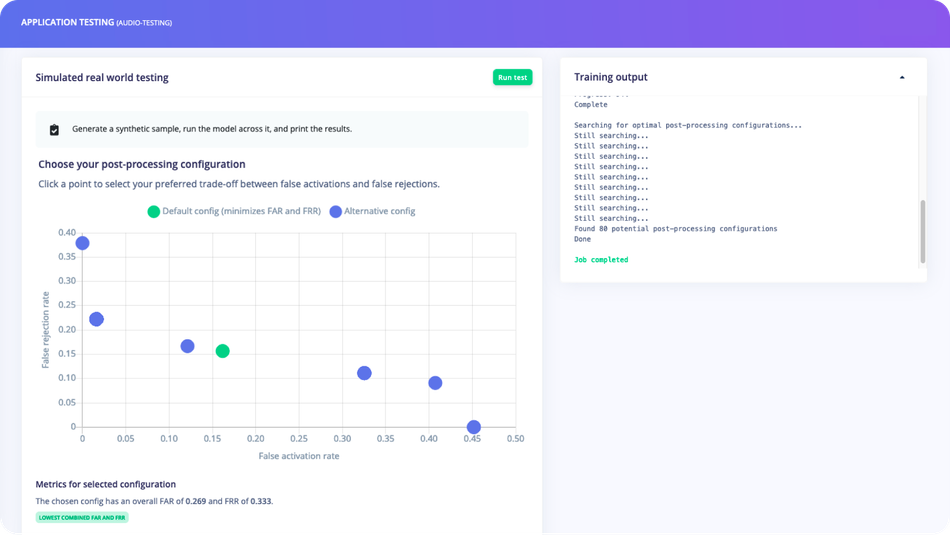

Model Testing

Model testing is a crucial phase in validating the Smart Running Jacket's neural network classifier. It involves evaluating the model's performance using unseen data and live conditions to ensure it accurately predicts activity and lighting states.

This process helps identify any necessary refinements, utilizing metrics like accuracy and precision. Iterative improvements are made based on test results, ensuring the model's reliability in real-world scenarios, ultimately confirming the jacket's intelligent response system functions as intended for user safety and convenience.

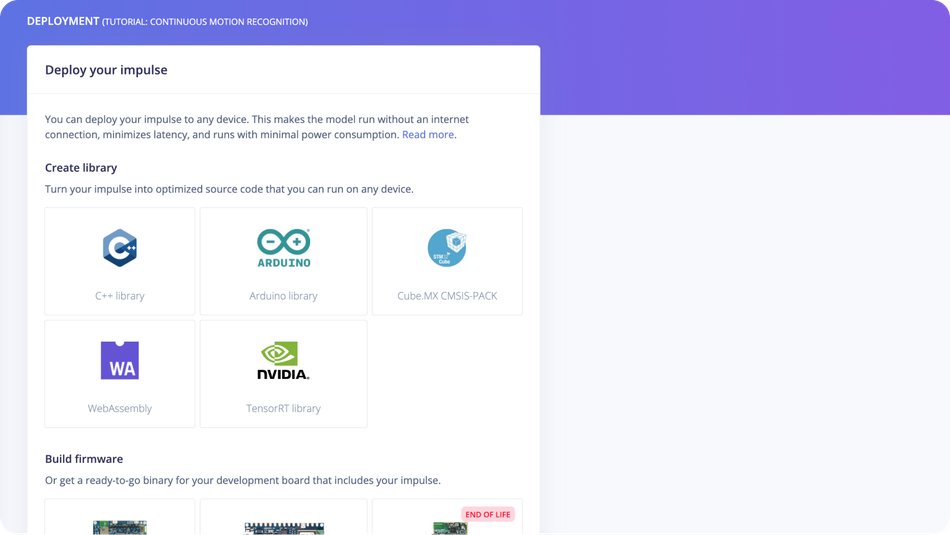

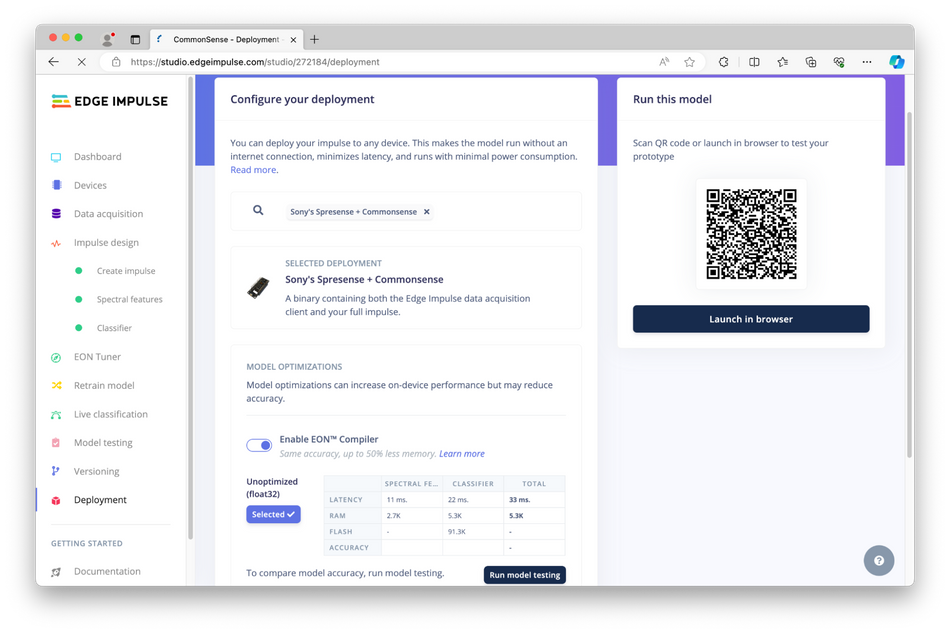

Deployment

The deployment phase transitions the Smart Running Jacket from a prototype to a functional device. This process starts with building the firmware, selecting the appropriate deployment option for the Sony Spresense and CommonSense setup, which enables running inference and displaying results via CLI output.

Users have the flexibility to choose among Arduino Library, C++, etc., catering to different integration needs within larger applications.

After clicking "Build Model" in Edge Impulse, the firmware is downloaded. Following unzipping, the Sony Spresense is connected via USB, and the device is flashed with OS-specific scripts. To initiate inference, the edge-impulse-run-impulse command is executed in the CLI, marking the final step in bringing the Smart Running Jacket's intelligent features to life.

Trying out the Smart Jacket

The final stage of the Smart Running Jacket's development involves rigorous practical testing to ensure its sensors and algorithms perform accurately under real-world conditions.

During daylight, the light sensors registered varying levels of illumination, reflecting the natural fluctuations in light intensity caused by the jacket's movement.

Conversely, nighttime conditions yielded significantly lower light intensity readings, aligning with expectations for the jacket's LED activation feature. Moreover, the accelerometer and gyroscope data showed distinct patterns when the wearer was walking or running, with a repeating motion pattern indicative of hand movement.

These observations confirmed the jacket's capability to differentiate between different levels of activity and ambient light conditions, essential for its intelligent operation.

These observations confirmed the jacket's capability to differentiate between different levels of activity and ambient light conditions, essential for its intelligent operation.

Expanding the dataset can enable the jacket to be trained to recognize driving conditions as well.

Prototype to Production

Transitioning the Smart Running Jacket from prototype to production involves key firmware and hardware considerations. While the initial phase utilized a binary firmware download, options exist to utilize a library or modify the open-source base firmware available on GitHub.

On the hardware front, although Sony Spresense and SensiEDGE CommonSense serve as developer boards, there's potential to design custom PCBs using the CXD5602 processor or streamline the CommonSense board. Integration includes connecting LED lighting to available pins and incorporating a battery for power, marking significant steps towards a market-ready product.

The electronics can be seamlessly integrated into the jacket, with LED systems sewn into the fabric and the charging port hidden inside a pocket. Cabling can be discreetly routed between fabric layers or along seams, ensuring functionality without compromising aesthetics or comfort.

This holistic approach encapsulates the journey from an innovative concept to a wearable technology product ready for the consumer market.

Conclusion

Using the example of a Smart Running Jacket we showcased the transformative potential of Edge AI and sensor fusion within wearable technology. This project, from concept through to practical application, highlights how technologies like Edge AI and sensor fusion can be harnessed to enhance user safety and experience.