The Scalability Challenge: Focusing on Hybrid AI in the Future of Generative AI Models

How on-device and cloud AI will foster the advancement and scalability of next-generation generative AI experiences

This article was discussed in our Next Byte podcast.

The full article will continue below.

This is the second article in a short series. Read the first piece here.

Introduction

Generative AI is a rapidly advancing field of artificial intelligence (AI) focused on creating novel data assets like images, text, or music. Such AI models are trained using extensive datasets, enabling them to create new data resembling what they were trained on. The growth in generative AI's development owes itself to several key factors: availability of large datasets, improved computing hardware capabilities, and the evolution of novel machine learning (ML) algorithms and architectures.

Large language models–based chatbots like ChatGPT, which can generate coherent and contextually relevant text and even images, have gained the interest and creativity of researchers, businesses, and the public. Generative models have demonstrated remarkable capabilities across a range of applications, from natural language understanding and text generation to creative content creation and even virtual assistants. However, as these models grow in complexity and sophistication, they bring to the forefront an increasingly pressing issue in the form of scalability challenges that apply to both training and inference.

These challenges encompass the need for significant computational resources, the growing size of models, and increasing costs. Latency and real-time responses primarily relate to the inference phase, while ethical considerations, interoperability, privacy, energy consumption, and cost management are critical factors throughout the entire lifecycle of AI systems, including training and inference.

One promising solution arises in response to these challenges - on-device AI. Deploying AI models directly on user devices (like smartphones and tablets) offers multiple advantages. It reduces reliance on cloud resources, cutting computational and energy costs. It improves privacy by processing data locally, reducing the need to send sensitive information to the cloud. It also lowers latency, supports offline functionality, and aligns with sustainability goals by reducing energy consumption.

What is Hybrid AI?

To properly define Hybrid AI, we need to introduce the terms on-device AI and hybrid AI. On-Device AI, also known as edge AI or local AI, refers to the deployment of AI algorithms and models directly on user devices (smartphones, tablets, IoT devices, autonomous vehicles, etc.), without heavy reliance on cloud-based infrastructure. It enables real-time processing and decision-making on the device itself, reducing the need for constant data transmission to remote servers. Hybrid AI represents a synergy between local devices and cloud infrastructure, strategically dividing AI computations to optimize both user experiences and resource efficiency.

In certain situations, AI processing predominantly occurs on the device, with the option to delegate tasks to the cloud as needed. In cloud-centric setups, devices will opportunistically offload AI workloads from the cloud, where possible and subject to their capabilities. Employing a hybrid AI architecture, or relying solely on on-device AI, yields numerous advantages including cost savings, improved energy efficiency, enhanced performance, heightened privacy and security, and personalized experiences on a global scale.

Within a hybrid AI framework, on-device AI processing delivers consistent performance that can rival or surpass cloud-based alternatives, particularly in scenarios characterized by congested cloud servers and network connectivity issues. Instances of high demand for generative AI queries in the cloud can result in lengthy queues and elevated latency, and in extreme cases, lead to service disruptions. These challenges can be mitigated by redistributing computational workloads to edge devices.

Furthermore, the presence of on-device processing capabilities in a hybrid AI architecture enables generative AI applications to function seamlessly in locations with limited or no connectivity. In a device-centric hybrid AI setup, the device serves as the central point of operation, with cloud resources primarily utilized for tasks that exceed the device's processing capacity. Given that many generative AI models can efficiently run on the device, edge devices can handle the bulk of the processing load. As the development of on-device AI continues, it holds significant potential to revolutionize AI service deployment and user experiences while maintaining a balance between performance and ethical considerations.

Unique Benefits of On-Device AI

On-device AI offers several unique benefits, including:

Cost-efficiency

By processing data locally, it reduces the need for extensive cloud infrastructure, which can be expensive to maintain and scale. On-device processing eliminates the necessity of sending data to the cloud, a process that can be both expensive and time-consuming. For instance, on-device AI for image generation, can be employed to generate high-quality images in real-time without relying on cloud-based servers. A mobile photography application equipped with generative AI can apply artistic filters, improve image quality, or create artistic effects directly on the user's device.

This ensures that users can quickly and seamlessly edit and generate images without uploading them to a remote server, preserving both privacy and reducing latency. Additionally, it allows for offline image generation, enabling users to create and edit images even when they're in locations with poor or no internet connectivity. This level of on-device image generation can significantly improve the user experience and provide powerful tools for content creation without compromising data privacy or speed.

Energy Savings

On-device AI mitigates the issue of energy consumption by performing computations locally. This is particularly crucial for devices with constrained battery life, like smartphones and wearables. Comparing the power efficiency of running generative AI models on edge devices versus to the cloud, edge devices offer superior performance per watt and reduced energy consumption, potentially resulting in environmental and cost benefits.

Performance and Low Latency

By processing tasks locally, it minimizes communication and computing delays associated with cloud-based AI. This is particularly important in real-time applications such as autonomous vehicles, medical devices, and interactive content, where even milliseconds of delay can have a significant impact on functionality and user experience.

Enhanced Privacy and Security

This approach reduces the risk of data breaches and unauthorized access during data transfer and cloud storage. Users can have greater confidence that their personal information remains on their devices, reinforcing privacy and security standards, especially for applications handling sensitive data, like financial transactions and medical information.

Personalization and User Experience

These models can adapt to the user's context and needs, improving user experiences by recommending relevant products or services based on their preferences and current circumstances. For example, a shopping application could use on-device AI to recommend products similar to past purchases or consider the user's location and weather conditions. Real-time personalization boosts relevance, engagement, and customer satisfaction.

Case Studies: On-Device AI in Action

The following case studies illustrate the transformative potential of on-device AI across various domains, showcasing its benefits.

Automotive

In the automotive industry, AI-driven cockpits are revolutionizing the driving experience. These systems, like those in smartphones and PCs, are starting to employ in-vehicle digital assistants powered by generative AI to create highly personalized and hands-free interactions for drivers and passengers.

These digital assistants leverage personal and vehicle sensor data, including cameras and microphones, to improve the driving journey. Third-party services can also integrate seamlessly, improving navigation, offering proactive assistance, and enabling in-car commerce, such as ordering food. Furthermore, the cockpit experience is improved through individual recognition, customization of media content, and the rise of in-vehicle augmented reality. Vehicle maintenance becomes proactive as AI digital assistants analyze data to predict maintenance needs, to seamlessly communicate with users using natural language, and to provide precise repair guidance. This proactive approach not only enhances reliability but also effectively reduces costs.

Qualcomm, which leads the field in cockpit and in-vehicle infotainment solutions with numerous global automakers choosing Snapdragon® Cockpit Platforms. These platforms power digital cockpits, with many already in production or design stages. Partners include Honda, Mercedes, Renault, Volvo, and others. The Snapdragon Cockpit Platform's latest iteration focuses on superior in-vehicle user experiences, safety, comfort, and reliability, setting new standards for connected car digital cockpit solutions. Qualcomm's Snapdragon Ride™ Platform complements this, offering scalable automated driving SoC platforms for ADAS, featuring vision perception, parking, and path planning.

Extended Reality

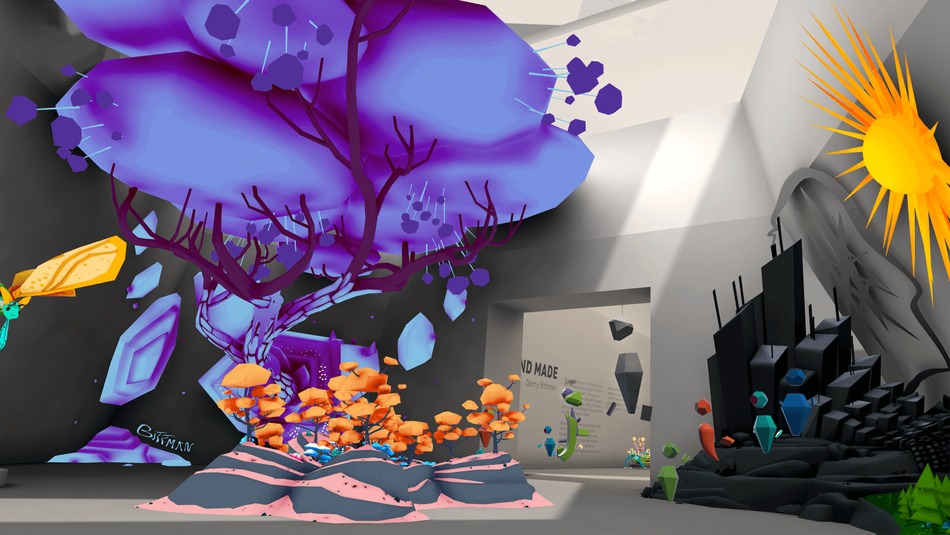

For Extended Reality (XR), generative AI has the potential to democratize 3D content creation. In the near future, AI rendering tools will enable content creators to generate 3D objects and entire virtual worlds using various inputs like text, speech, images, or video. Furthermore, text-to-text models will facilitate lifelike conversations for avatars, transforming the way we interact with XR content. Despite the exciting possibilities, widespread adoption timelines remain uncertain, though significant progress is expected soon. Immersive XR experiences will benefit from text-to-image models for realistic textures, likely available on smartphones and XR devices within a year.

These capabilities will involve distributed processing, with headsets handling perception and rendering, while generative AI models run on paired smartphones or in the cloud. In a few years, text-to-3D and image-to-3D models will likely produce high-quality 3D objects, evolving to generate entire scenes and rooms. Eventually, XR may enable users to immerse themselves in virtual worlds constructed from their imagination. Virtual avatars will undergo a similar evolution, utilizing text-to-text models for natural conversations and text-to-image models for outfits. Over time, avatars may become increasingly photorealistic, fully animated, and mass-producible, driven by image-to-3D and encoder/decoder models. This progression promises to revolutionize how we create and experience immersive XR content, with continual advancements anticipated in the years ahead.

Challenges and Solutions

The technical challenges specific to hybrid AI are being actively addressed through ongoing research and innovation:

Model size

Model size is a notable challenge, particularly on resource-constrained devices. As large models are challenging to store and run efficiently, a potential solution involves employing compression and quantization techniques to reduce model size while maintaining accuracy. Qualcomm is actively researching ways to enhance quantization techniques, specifically aiming to quantize 32-bit floating point parameters to 8-bit or 4-bit integers in neural networks while maintaining accuracy. The research encompasses various model quantization methods, such as Bayesian learning, adaptive rounding, and transformer quantization, as part of Qualcomm AI Research's ongoing efforts.

For instance, the Qualcomm AI Research team employed the AIMET tool (AI Model Efficiency Toolkit) to quantize the 32-bit floating point Stable Diffusion AI model into an 8-bit integer format while preserving almost the same accuracy level. By optimizing and scaling the model for INT8 precision operations instead of FP32, Qualcomm successfully enabled Stable Diffusion to run efficiently on handheld devices without internet connectivity, significantly reducing the model's size while maintaining accuracy and enhancing performance on low-power Snapdragon AI accelerators.

Computational power

Large AI models may place heavy computational demands, particularly on devices with restricted processing capabilities. A potential solution involves leveraging specialized hardware like AI accelerators to enhance AI model performance on such devices. In 2022, Snapdragon 8 Gen 2 provided groundbreaking AI, integrated across the entire system, powered by their fastest, most advanced Qualcomm AI Engine to date. Users can experience faster natural language processing with multi-language translation or have fun with AI cinematic video capture.

The latest Hexagon Processor introduced a dedicated power delivery system, which adapts power according to the workload. Special hardware improves group convolution, activation function acceleration, and the performance of the Hexagon Tensor Accelerator. Support for micro-tile inferencing and INT4 hardware acceleration provide even higher performance while reducing power and memory traffic. The transformer acceleration dramatically speeds up inference for multi-head attention that is used throughout generative AI, resulting in AI performance up to 4.35X on certain use cases with MobileBERT.

These ongoing research efforts are addressing the technical challenges of hybrid AI, with expectations to overcome them successfully as the technology advances and becomes more widely adopted and utilized.

Conclusion

On-device AI plays a critical role in addressing the future scalability challenges of generative AI models. It has the potential to revolutionize numerous industries and significantly enhance user experiences. By enabling local processing and real-time personalization, on-device AI reduces the dependence on cloud resources, improves privacy and security as well as the efficiency of AI applications. This approach not only supports the responsible development of AI technologies but also opens new possibilities for scalable and personalized AI solutions across diverse sectors, from healthcare to entertainment, promising a brighter future for both businesses and users alike.

To stay up to date on the latest from Qualcomm, join the “What’s Next in AI and Computing” newsletter and read their hybrid AI whitepapers (part 1, part 2)