Bringing Cognitive Intelligence to the Edge: A Conversation with Synaptics' John Weil

Wevolver spoke with John Weil, Vice President of IoT and Edge AI Processor Business at Synaptics, to understand the challenges of bringing AI to the edge, how the Synaptics Astra™AI Native platform enables cognitive computing in real-world devices, and what the future holds for edge intelligence.

Edge AI has long promised smarter, faster, and more private device intelligence. But as AI moves beyond the data center, it faces a fundamental challenge in complexity in its implementation closer to the end user. Between fragmented hardware, evolving models, and growing performance expectations, developers are often stuck navigating an overcrowded, under supported and often not interoperable ecosystem.

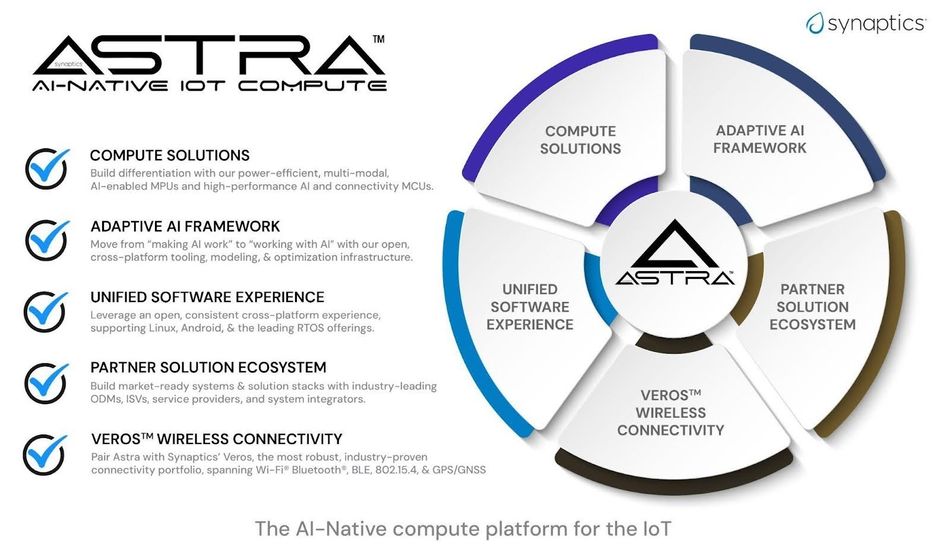

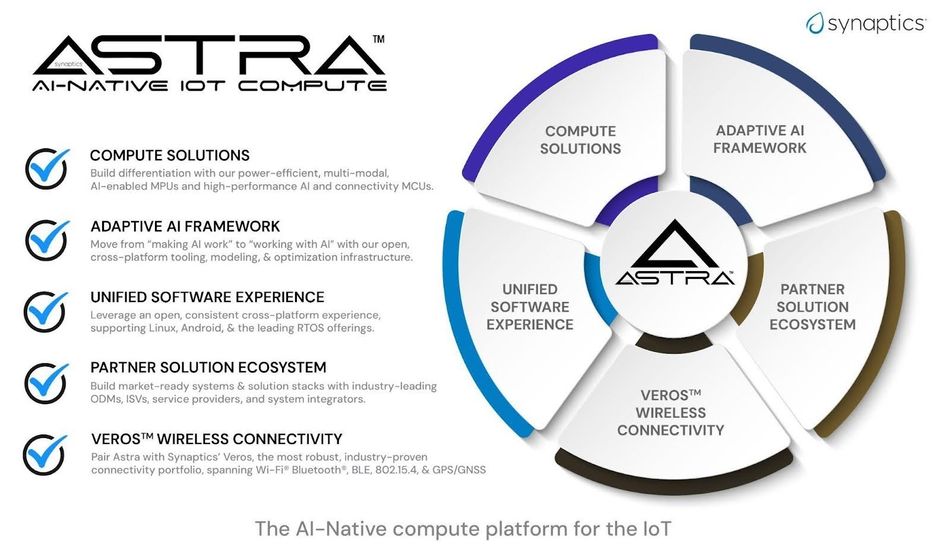

That’s where Synaptics comes in. With a focus on delivering cognitive computing at the edge, the company’s AI-native Astra platform was built from the ground up to address the unique challenges of deploying AI for smaller, compute- and power-constrained and more customised use cases.

To unpack what that means and how Synaptics is tackling edge intelligence differently, Wevolver sat down with John Weil, Vice President of IoT and Edge AI Processor Business at Synaptics. With decades of experience in embedded systems, Weil has a clear vision for where cognitive edge computing is headed and how Synaptics is helping shape that future.

The push to bring AI closer to the end user creates pressure around hardware choices, development tools, and future-proofing. From your perspective, how would you describe the current edge AI landscape?

John Weil: First you have to understand the spectrum of how AI can be deployed, and you can look at it in three segments

On one end, you’ve got the cloud side: Here there are huge compute resources with an ability to run very high end, high performance applications. This is super critical to many aspects of our lives and how we use computing on a go-forward basis.

On the other end, there’s the tiny edge: These are products with a small footprint, often application-specific standard products (ASSPs) with some amount of AI compute. You see these in things like small cameras embedded in consumer products or intelligent sensors. They’re moving from just traditional machine learning to making decisions based on that data.

Then there’s the middle, that big untapped center of the market that is critical to how AI can enhance a broad range of products in our homes, in workplaces, in cars and really deliver more personalized, intuitive experiences to users. This is where IoT, really starts to fulfil its purpose. These are devices that are more intelligent than standard ASSPs and offer contextual awareness in a way that even cloud-based systems can’t. It’s also where multiple modalities tend to come together.

Interestingly, we’ve got all this research moving fast on the cloud side (new models, new toolsets), so now the challenge is helping customers in the embedded space take advantage of that progress. Things are moving quickly in this middle zone, and it’s chaotic. So the question becomes: how do we make it easier for developers to deal with that speed, the tools, the models, and the complexity of multiple modalities all at once?

You describe Astra as "AI-native." What does that mean, and how does this design approach shape the platform?

John Weil: Our thinking around AI-native is that you don’t just add AI to a processor and call it done. You start from the ground up by designing the AI compute elements—what many people know as neural processing units—as part of the core chip architecture.

It is similar to how you think about building security into a product. You don’t bolt on security after you’ve built something. You design it in from the start. That’s what we do with AI. In Astra, we integrate those neural processors tightly into other parts of the system, such as the audio and video pipelines, so that AI can operate on that data right as it comes in.

So if you’re streaming video into the product, you can run AI models on it directly. That could mean recognizing a face, detecting a gesture, or understanding speech, and doing that all on the chip without needing to pass everything through the CPU or GPU. That’s what we mean when we say AI-native: it's built in from the start and stitched into the way the system processes information.

Multimodal interaction is a big part of cognitive computing. How does Astra support this, and can you give us an example of what that looks like in practice?

Multimodal interaction is a big part of cognitive computing. How does Astra support this, and can you give us an example of what that looks like in practice?

John Weil: When we talk about bringing AI to embedded systems, we always come back to the idea of starting with a valid "why." Why are you adding AI to a product? What problem are you solving?

And often, it comes back to trying to make machines feel a bit more human in how they sense and interact. With Astra, we can combine multiple modalities (audio, video, vision…) on the device. We can do that at the right price and performance point, which is a critical need in embedded products.

One example is the espresso machine. You can now have a device that sees you walk up, detects your intent based on where you're looking or whether you're holding a coffee mug, and understands when you say something like, "I'd like two shots of espresso, and I want them long." It doesn't need to be connected to the cloud. It can interpret your voice, gaze, and presence, and respond in an intuitive way.

We've also done demos where the system handles speech with slang or changes in tone, and still gets it right. It knows you're talking to it and understands what you mean. That’s the kind of interaction Astra enables with multimodal support.

These interactions raise questions about privacy, latency, and power. How does Astra manage those requirements, especially in consumer devices?

John Weil: Surprisingly, power isn’t the hardest part. The heating element in a coffee machine, for example, uses more energy than the compute we’re talking about. As long as you’re in the single-digit watt range, you're in good shape for embedded AI.

The bigger challenge is often price. How do you add that level of intelligence without driving up cost? That’s where doing AI at the edge makes sense. You can skip adding large touchscreens and offload the interaction to voice and vision, which can be more intuitive anyway.

Privacy and latency also benefit. If the device processes everything locally, you get instant responses and keep user data inside the device. That’s especially helpful when you want to do things like child detection—say, making sure a kid can’t turn on the oven—without needing to send anything to the cloud.

And these devices know what they are. A coffee maker with Astra isn’t going to try to answer questions not related to its core function as a device- for example, what is the capital of France. It knows its job, and it does it intelligently and reliably.

The edge landscape is fragmented: different processors, different toolchains, and different requirements across products. How does Astra help developers deal with that complexity?

John Weil: Right now, there’s no perfect standard that brings everything together. Your toaster and your coffee maker don’t automatically talk to each other. Everyone has their own ecosystem, whether it’s Google, Amazon, Apple, or others. And they’re not necessarily working together because everyone wants to own the data.

What we are working on, and what you’ll start seeing, is a concept called an AI hub. This device sits locally in your home, and different smart products can share information with it. The hub makes decisions locally, like, “Hey, someone’s at the front door, let me check the backyard camera too.” So now you have context across devices, without needing to send data to the cloud.

The first versions will likely be ecosystem-specific, but the direction is clear. The idea is to keep things private, fast, and smart across the whole environment. And yes, you could imagine an Astra chip running in that hub, and we also pair it with our connectivity solutions to handle that device-to-device communication.

Synaptics recently announced a partnership with Google. What does this collaboration bring to Astra, and why is it important?

John Weil: The vision behind this partnership is to keep Astra aligned with where the AI ecosystem is headed, at every level. That means designing AI into the DNA of our chips while staying closely connected to research and tool development. It's not enough to build powerful hardware. You need to bring that together with the tools and models coming out of universities, research labs, and companies pushing the boundaries.

We break it down into three layers: hardware, software tools, and models. You either invest massively across all three, or you partner with the best. That’s where Google comes in. They've been doing AI research longer than most. Before people were even talking about AI models and cloud compute, they were publishing foundational work on tensors and compilers.

Through our collaboration, we’re integrating some of Google’s open-source compute elements directly into our future roadmap products. On the software side, we’re contributing alongside them to frameworks like MLIR and the IREE compiler. MLIR came out of the same lineage as LLVM and Clang, open-source compiler frameworks that changed how modern compilers are built. IREE is an AI compiler that can handle and optimize complex models across different compute blocks. These tools help us bridge the gap between fast-moving research and practical deployment on embedded systems.

We partnered with Google because they’ve been consistent leaders in open-source AI, and because it lets us bring new capabilities to developers without having to build everything in isolation.

Astra focuses on AI processing, but connectivity is also critical to edge intelligence. How does Synaptics approach that?

John Weil: Complementing our AI efforts, we also provide wireless connectivity solutions under our Veros™ brand, including Wi-Fi, Bluetooth, and 802.15.4 for Thread or Zigbee. These are often used right alongside Astra in smart home products.

It’s important because you don’t just need intelligence at the edge; you need devices that can talk to each other. With Astra plus Veros™, we’re enabling that machine-to-machine communication, and connecting to the cloud when needed. It’s part of how we help customers build complete, efficient systems, whether they are controlling an appliance locally or coordinating across a broader smart home setup.

Final Thoughts

As edge AI moves from promise to practice, the complexity of building real-world systems becomes more apparent. What Synaptics offers with Astra is a tightly integrated, scalable platform built for context, responsiveness, and privacy. Whether it's enabling voice and vision interaction in a coffee machine or forming the core of an AI hub coordinating multiple devices, Astra shows what’s possible when AI is built into the fabric of a product.

To explore the tools, SDKs, and resources that power Astra, visit the Synaptics Developer Portal: https://developer.synaptics.com