Automated ocean waste retrival the target for new, specialized open-source EfficientDets

With growing concern over microplastics from ocean waste, autonomous underwater vehicles — AUVs — have been proposed as a tool for cleaning up our seas, but only if they can pick the plastics out from the fish: Enter these tweaked EfficientDets, boosting accuracy for the task.

Plastic waste running from rivers to the seas is a growing problem, which will take a major clean-up to solve. Enter the AUVs.

Microplastics are of growing concern, both for human health and the broader ecology. With millions of tonnes of plastic waste entering the marine ecosystem ever year, solutions are needed urgently — and while initiatives to prevent the waste from entering the ecosystem in the first place, by moving away from single-use plastics and implementing stronger recycling requirements, are a vital step, they do nothing for the waste already floating around our oceans.

Autonomous underwater vehicles (AUVs), however, can help address the latter. Equipped with suitable grabbers, AUVs could roam the seas to locate plastic waste and remove it for recycling or more proper disposal — but only if they can recognize it. That’s where a team of researchers, working with the United Kingdom’s Natural Environment Research Council, aims to help: Improving computer vision systems to give AUVs better skills in recognizing plastic waste in underwater environments.

Smarter vision

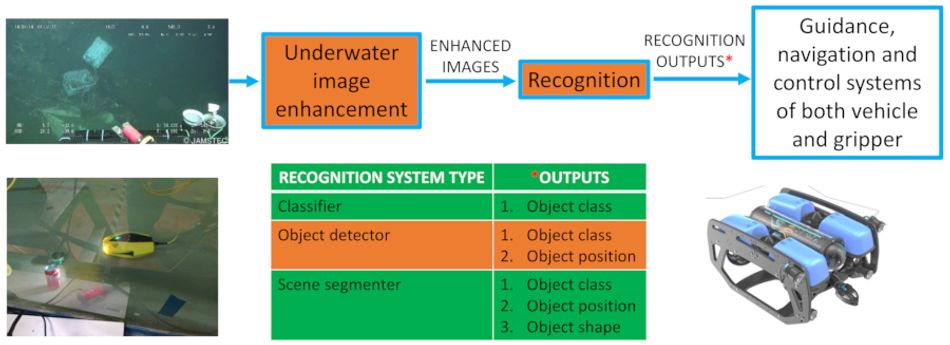

The researchers envision the control system of a plastic-hunting AUV as three blocks: An image enhancement block, specifically designed for the murky low-light conditions found under the sea; a recognition block, capable of discerning plastic waste from other underwater objects and locating them accurately; and a guidance block, which navigates the vehicle to the waste and coordinates its collection.

Seeing the potential for boosted performance, first author Federico Zocco and colleagues concentrated their efforts on the image enhancement and recognition blocks. The team started by applying a state-of-the-art object detector class, EfficientDets, to the task of recognizing plastic waste and differentiating it from other undersea objects like fish.

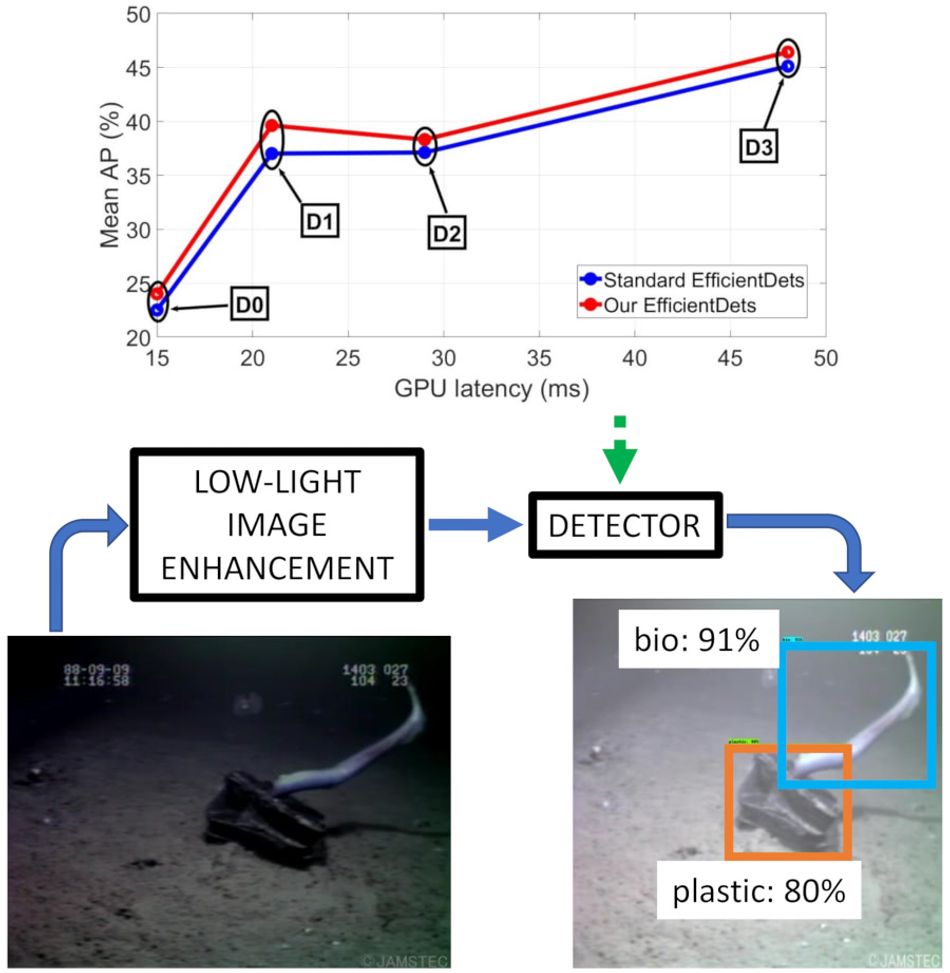

Created by a team working at Google Research, EfficientDets are designed to offer accurate object detection at a low latency — offering anything up to a ninefold size reduction and a forty-twofold drop in computational requirements than previous approaches. Zocco and colleagues, however, believed it possible to improve upon this — particularly in terms of specializing EfficientDets for the task at hand.

Initially, the team concentrated on architectural modifications: Changing the number of weighted bi-directional feature pyramid network (BiFPN) layers, the number of convolutional layers, and scaling up parent detectors in order to find the best balance between latency and accuracy. Then, the resulting improved EfficientDets were trained on the Trash-ICRA19 dataset with biological, plastic, and remote-operated vehicle classes — before being trained again on a custom dataset, In-Water Plastic Bags and Bottles (WPBB), with 900 fully-annotated images of the target items recorded in a testing pool by a Chasing Dory drone.

Better vision

In addition to improving the object detection block, the team also investigated how the images themselves can be improved — taking the low-light captures of a AUV’s camera feed and making them better-suited to object detection and classification.

The team took the L2UWE framework detailed by Tunai Porto Marques and Alexandra Branzan Albu in 2020, designed for efficient enhancement of low-light underwater imagery, and applied it to a subset Trash-ICRA19 images which had been modified for simulated low-light conditions. While an improvement in accuracy was noted, it came at a considerable cost: “L2UWE is too computationally demanding for real-time applications,” the team noted, with the inference time per image reaching over 11 seconds when the framework was applied as a pre-processing step.

A simpler approach was then tried: Just boosting the brightness of the image on a per-pixel basis, with no thought to enhancement at all. Doing so showed a notable increase in accuracy, though without analyzing each image and determining the amount of brightening required it’s a rough approach at best.

“A more efficient approach,” the team notes, “is to tune the value of c [the increase in brightness applied to images] offline using genetic algorithms or particle swarm optimization and subsequently use it for low-light real-time marine debris detection within an automation pipeline.”

The team’s overall results show promise: With EfficientDets already being considered state-of-the-art, a reported 1.2-2.6 per cent increase in average precision for the task of plastic waste recognition underwater with no increase in latency is a notable achievement — and the team has made it possible for others to improve the approach still further by publishing both the WPBB dataset and the project’s source code on GitHub under the permissive MIT license.

The team’s next step: Implementing the proposed detection pipeline on a physical AUV, integrated with the navigation and gripper control system for automated waste collection.

The paper detailing the team’s work has been made available on Cornell’s arXiv preprint server under open-access terms.

References

Federico Zocco, Ching-I Huang, Hsueh-Cheng Wang, Mohammad Omar Khyam, and Mien Van: Towards More Efficient EfficientDets and Low-Light Real-Time Marine Debris Detection, to be submitted to IEEE Robotics and Automation Letters. Preprint DOI arXiv:2203.07155 [cs.CV].

Mingxing Tan, Ruoming Pang, and Quoc V. Le: EfficientDet: Scalable and Efficient Object Detection, Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2020. DOI 10.1109/cvpr42600.2020.01079.

Tunai Porto Marques and Alexandra Branzan Albu: L2UWE: A Framework for the Efficient Enhancement of Low-Light Underwater Images Using Local Contrast and Multi-Scale Fusion, Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2020. DOI 10.1109/CVPRW50498.2020.00277.