CHAPTER 1

Industry Trends Driving Edge AI Adoption

The transformative power of edge AI lies in its ability to deliver localized intelligence where it is most critical, redefining how industries operate. From enabling real-time decisions in autonomous vehicles to driving predictive maintenance in manufacturing and ...

CHAPTER 2

The Role of Edge AI in Transforming Industry Trends

In 2018, Gartner predicted that by 2025, 75% of enterprise-generated data would be created and processed outside a traditional centralized ...

CHAPTER 3

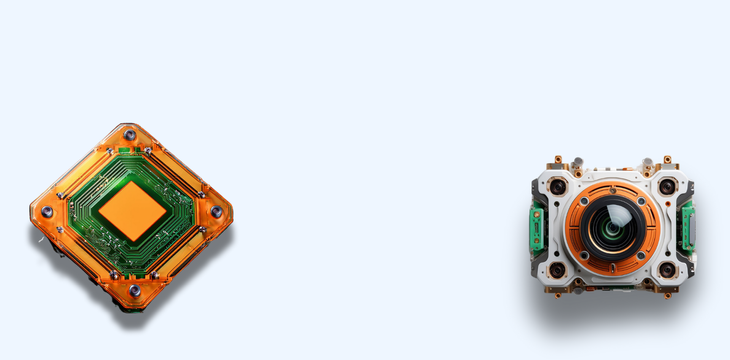

The Technological Enablers of Edge AI

The deployment and operation of AI systems and models at the edge come with many benefits for industrial organizations, yet they still pose a host of challenges. For instance, challenges posed by the limited processing power of edge devices, compared to conventio ...

CHAPTER 4