Three Edge AI Architectures That Demand Smarter Memory—and Why?

In this article, we'll explore how three edge AI architectures; robotics, system-on-modules (SoMs), and medical devices, are driving this shift, and how MRAM and FeRAM are enabling smarter, more resilient memory at the edge.

Introduction

AI is one of the biggest growth drivers in the semiconductor industry today—but so far, only a few companies currently benefit from this trend, such as hyperscalers and large data centers. However, more and more are entering the second wave of artificial intelligence, a movement called “AI Everywhere,” where intelligence is shifting to the edge: into the hands of robots, medical devices, industrial controllers, and more. In this new reality, real-time inference, local decision-making, and data privacy aren’t optional, they’re essential.

The good news? This edge-centric AI trend doesn’t rely on high-bandwidth, high-cost memory. Instead, it can thrive using today’s available memory technologies, particularly emerging options like MRAM and FeRAM, which have long been waiting for the right moment to break through. “AI Everywhere” might be that moment.

In this article, we’ll explore how three edge AI architectures; robotics, system-on-modules (SoMs), and medical devices, are driving this shift, and how MRAM and FeRAM are enabling smarter, more resilient memory at the edge.

From Cloud to Edge: The Next Frontier of AI

In the early stages of AI adoption, most processing occurred in cloud-based data centers. Data was collected by endpoint devices, transmitted to the cloud for processing, and the results were sent back. But for many industries, that’s no longer fast or reliable enough.

Autonomous robots need to make real-time decisions without depending on the cloud.

Wearables and diagnostic devices must perform inference instantly, even in rural or offline conditions.

Industrial gateways in factories or infrastructure must function regardless of bandwidth availability.

This new era of edge AI demands localized, real-time inference. It brings the AI closer to where data is generated, and as a result, introduces a unique set of challenges related to latency, connectivity, privacy, and power efficiency. Memory lies at the center of all these concerns.

Why Memory Is Critical for Edge AI?

While much attention is paid to edge processors, such as MCUs and NPUs, memory plays an equally important role. In edge environments, every millisecond and every milliwatt counts. Memory determines how quickly a device wakes from sleep, how long it can operate on limited power, and how often it can be updated without failure.

The key architectural constraints include:

Limited space and thermal budgets: Edge systems must fit in compact designs without excessive heat output.

Fast wake-up times: Devices should power on and start inference in under a second.

Endurance: Frequent data logging and model updates require high write cycles.

Security and resilience: Data must be preserved during outages and protected from EMI and radiation.

Modern edge SoCs often combine MCUs, NPUs, and a complex memory hierarchy, including volatile SRAM, and non-volatile Flash or EEPROM. However, legacy memory technologies are beginning to show their age in edge applications.

Comparative Analysis: Memory Tech at the Edge

Traditional memories come with inherent limitations. In contrast, MRAM (Magnetoresistive RAM) and FeRAM (Ferroelectric RAM) offer the best of both worlds: non-volatility with high-speed access. They also provide significantly higher endurance and lower power consumption.

Memory Type | Non-Volatile | Write Speed | Write Endurance | Power Usage |

Flash | Yes | ~100 ns | ~10⁴ cycles | Moderate |

EEPROM | Yes | ~1 ms | ~10⁵ cycles | Moderate |

SRAM | No | ~10 ns | Unlimited | High |

MRAM | Yes | ~10 ns | >10⁶ cycles | Low |

FeRAM | Yes | <50 ns | Up to 10¹² cycles | Ultra Low |

These advantages make MRAM and FeRAM ideally suited for edge AI environments, where performance, endurance, and energy efficiency are non-negotiable.

Case Studies: Three Architectures Driving Smarter Memory

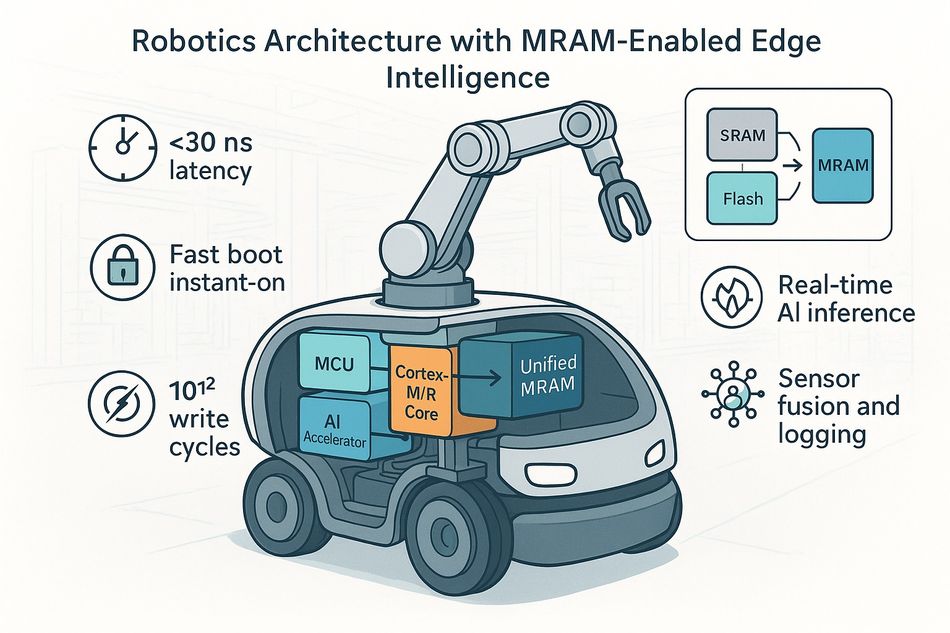

Robotics: Fast Boot and Sensor Fusion

Robotic platforms, such as autonomous mobile robots (AMRs) and collaborative arms, require real-time decision-making, often under tight power and thermal constraints. These systems rely on heterogeneous SoCs combining low-power AI accelerators (e.g., NPUs), Cortex-M/R cores for motor control, and MCUs for sensor fusion. In these hybrid architectures, MRAM offers a significant advantage by unifying code and data in a single memory block, thereby replacing both SRAM and embedded Flash.

Typical MRAM implementations (4-16 MB) operate at 100-200 MHz with <30 ns latency and >10¹² write cycles, making them ideal for storing AI weights, environment maps, and log buffers. This sup ports frequent updates and continuous edge inference without performance loss. One of the key design requirements across robotic systems is eliminating boot delays and reducing unnecessary power cycling.

The instant-on capability of MRAM enables faster system recovery and real-time responsiveness. Its robustness and endurance further outperform legacy memory in demanding robotics applications, making it essential for scalable, embedded AI at the edge.

System‑on‑Modules (SoMs): OTA Updates and Secure Boot

SoMs deployed in industrial gateways and smart infrastructure are built around modular processors, such as NXP i.MX 8M Plus or NVIDIA Jetson Nano, supporting embedded AI workloads. These modules combine high-speed LPDDR4 RAM (1–8 GB) with non-volatile memory for persistent storage. While NOR Flash (128–512 MB) remains common for firmware and secure boot, FeRAM is emerging as a preferred solution for write-intensive operations such as OTA updates and real-time logging.

This latest technology offers <100 ns write speeds, up to 10¹² endurance cycles, and densities between 256 KB and 4 MB. Its ability to execute-in-place (XiP) via SPI or memory-mapped interfaces significantly reduces boot time. In applications like predictive maintenance or anomaly detection, where continuous logging is required, FeRAM eliminates the complexity of wear leveling seen in EEPROM or NAND. This ensures system integrity, uptime, and faster recovery—making FeRAM indispensable for robust, edge-aligned SoM architectures operating in dynamic environments.

Medical Devices: Ultra‑Low Power and Secure Personalization

Medical edge devices, including wearables, hearing aids, and portable diagnostics, are defined by their need for ultra-low power consumption, miniaturization, and reliability. These systems typically use ARM Cortex-M0+/M4 MCUs, small SRAM (32–512 KB), and non-volatile memory such as FeRAM or MRAM (256 KB–2 MB). FeRAM is ideal for storing real-time AI outputs like adaptive filter coefficients, personalization profiles, and environmental data tagging, offering <50 ns write speeds, microamp-level power draw, and nearly infinite write endurance.

In applications requiring rapid, frequent data updates, FeRAM eliminates the need for complex memory management. MRAM, while slightly higher in power, is favored for storing AI models and security-critical logs, offering 20+ year retention at 85°C and high read performance. Both memory types are immune to EMI and radiation, critical for hospital use. Their resilience, efficiency, and speed enable medical devices to offer instant-on AI capabilities, secure data retention, and long-term reliability with minimal energy cost.

Here, both MRAM and FeRAM offer unbeatable advantages. They retain data even if power is lost, consume virtually no standby power, and tolerate harsh environments. They enable these life-critical devices to be personalized, reliable, and responsive without compromising safety.

Unified Design Priorities and the Role of Emerging Memory

Edge AI platforms across robotics, System-on-Chips (SoCs), and medical devices are converging on similar memory needs: fast non-volatile access under 100 ns, high endurance ranging from 10^6 to 10^12 write cycles, and moderate memory densities between 0.5 and 16 MB. The radiation and EMI resilience is significant for medical and industrial applications.

Architecturally, these systems are moving toward heterogeneous compute and memory stacks with tightly integrated non-volatile memory. This approach enables scalable, low-latency, and energy-efficient AI directly at the edge, where responsiveness and reliability are mission-critical.

The memory portfolio, including MRAM and FeRAM as offered by Memphis, uniquely meets all of these needs in a single package. They simplify BOM, reduce energy consumption, and improve system reliability, making them the foundational memory technologies for scalable, next-generation edge AI systems.

Conclusion & CTA

The second wave of AI is redefining how intelligent systems are built, especially at the edge. The one-size-fits-all approach to memory no longer works. Edge AI architectures in robotics, medical devices, or System-on-Chip (SoC) devices require faster, more reliable, and power-efficient memory. MRAM and FeRAM solutions are engineered to meet these demands head-on. With instant-on performance, superior endurance, and robust environmental resilience, they offer a unifying memory platform for the edge AI era.