Navigating the Challenges in AI Training

How neuromorphic computing and BrainChip's Akida solution can benefit AI training

Source: DeepMind

This article is based on an article titled, The Advantages of Unified Deep Learning Technology, from the BrainChip blog.

The field of Artificial Intelligence (AI) has experienced rapid growth, with a strong focus on developing large generative models like Open AI's chatGPT. These models rely heavily on neural networks (NNs) for deep learning, which require high-powered, massively parallel computing to calculate the millions of values needed for each inference instance. As a result, the cost of training such large models is in the millions of dollars due to the computational power required for the task.

This article explores how neuromorphic computing can be an alternative to the high power and computational cost required by conventional deep learning models.

Challenges in AI Training

Artificial intelligence has many branches, among which machine learning is the most prevalent. Deep learning, a type of machine learning, empowers machines to discover and enhance even the most subtle patterns within data.

Neural networks are the fundamental building blocks of deep learning algorithms, which learn from millions of examples to infer patterns from data. For example, it is possible to train a model with numerous images of cats and then identify an image of a cat on its own. Convolutional neural networks (CNNs) are the most common neural networks that process visual data, such as images and videos.

Today, Neural networks can use massively parallel processors to perform complex mathematical calculations. Although these networks have achieved high accuracy levels, the computational intensity of each inference results in high energy consumption, excessive heat generation, and a significant environmental impact.

At the same time, generative AI has presented significant commercial advancements. This type of artificial intelligence can create new and original data that is highly similar to human-generated data, such as images, music, text, videos, and voice. However, generative AI models require massive amounts of training data, such as all of Wikipedia or Reddit, and training a single model in the cloud can cost millions of dollars. Therefore, the model is only sometimes completely retrained.

The cost of training an AI model can go up to tens of millions of dollars. However, the real expense lies in running the model. Every time a user needs the service, the model has to answer and respond to queries, which requires computational power. Inferences are used billions of times more than the training process. OpenAI's chatGPT reportedly costs the company $100,000 per day to run. According to Dylan Patel and Afzal Ahmad in SemiAnalysis, "deploying current ChatGPT into every search done by Google would require 512,820 A100 HGX servers with a total of 4,102,568 A100 GPUs. The total cost of these servers and networking exceeds $100 billion of Capex alone, of which NVIDIA would receive a large portion." This creates a significant challenge to the broader proliferation of AI, as the environmental and commercial costs can become issues and continue to grow over time.

Benefits of Neuromorphic Computing

An alternative approach to tackling the challenges of conventional generative AI is neuromorphic computing, which aims to mimic the human brain, considered the most efficient computer. According to an article published in Nature Computational Science, neuromorphic computing can offer up to 1,000 times better performance and 10,000 times better energy efficiency than high-performance computing.

Neuromorphic computing uses the ACH brain cell (neuron) implemented in a hardware circuit that operates in parallel with all other cells. Software models for this type of computation are known as Spiking Neural Networks (SNNs), artificial neural networks inspired by how neurons communicate in the brain. Unlike traditional neural networks that use continuous valued signals, SNNs use discrete, binary signals. This means SNNs consume energy only when active, resulting in much higher performance at a low energy cost, in the 1/1000th of a watt range.

Decades and hundreds of billions of dollars have been invested in CNN and DNNs, but very few ready-to-use SNN models exist in the industry. The advantage of neuromorphic computing is that it can be energy efficient enough to make much more accurate inferences in devices at the Edge, such as smart sensors, cameras, and smart meters. SNNs reduce the need for high-power cloud inference. They also decrease raw data traffic and congestion in networks. This also reduces security risks from exposing sensitive data as well. Neuromorphic computing leads us toward more distributed computing, where localized AI contributes to and enables a leaner, more efficient global AI.

IBMTM and Intel® have already invested in neuromorphic technology and demonstrated working chips. However, these investments still focus on accelerating SNN models, which are currently academic. The actual tools and deployment ecosystems are in their infancy. This makes using the prototypes difficult, if not impossible, in real-world deep-learning CNN environments. Developing real-world applications is complex and has plagued the adoption of neuromorphic technologies.

BrainChip Akida Technology

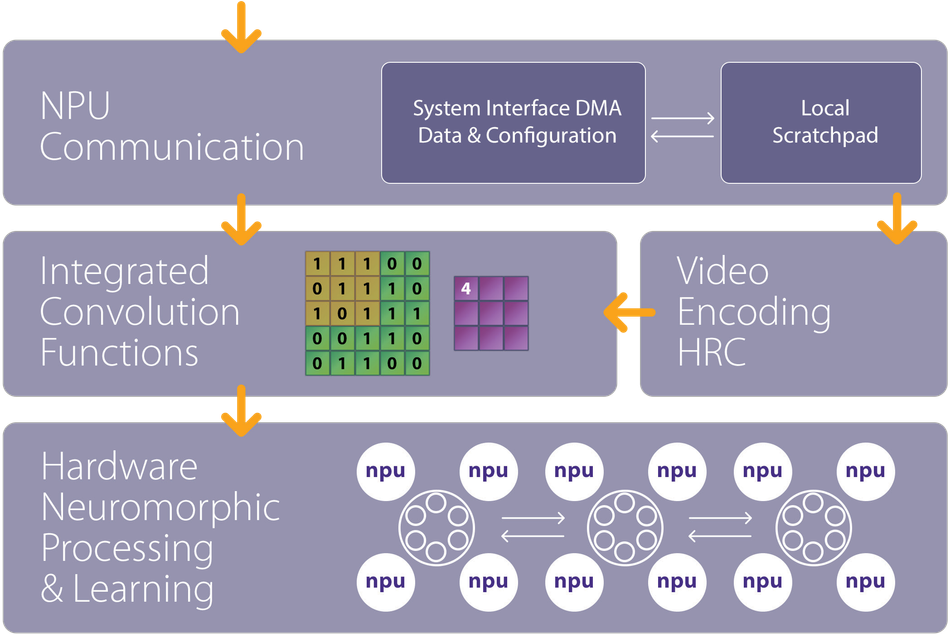

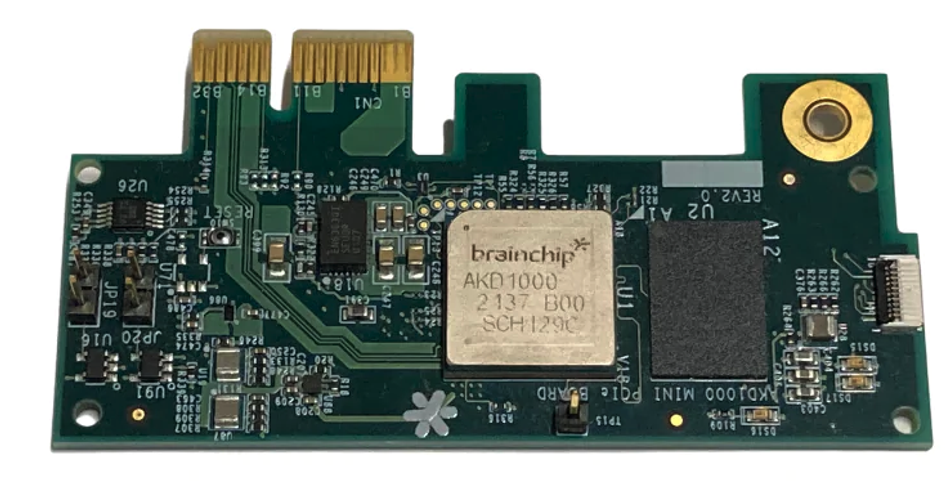

In order to overcome these challenges, BrainChip combined convolution functions with a fully digital computing core early on in their development of neuromorphic technology. To overcome this obstacle, the BrainChip team made a solution that unites the accuracy and capabilities of the deep learning ecosystem with the energy efficiency of neuromorphic computing. BrainChip Akida technology is an all-in-one product that can execute most deep learning networks and perform inference at a fraction of the energy cost of conventional solutions.

The Akida technology's key feature lies in its ability to learn in real-time and the field without undergoing expensive retraining in the cloud. This allows for rapid customization of AI-enhanced products, with the convolutional layers serving as feature extractors and the last layer learning in milliseconds to combine these features into new objects. Furthermore, this customization prioritizes enhanced privacy and security, ensuring that sensitive information remains confidential.

The solution has been designed to simplify the process of developing models and software, using MetaTF™ to attend to developers in their preferred environments and offering a wide range of standard models already available in the Akida Models Zoo. The Brainchip Akida architecture has matured and proven effective in silicon, with major corporations having already licensed the IP.

Future Outlook

Neurons in the brain operate in an analog domain. They integrate thousands of input signals over time and output a single bit "s]pike" when the input signals match stored parameters. The brain contains billions of such cells organized in a functional structure capable of performing amazing feats that are still beyond the capabilities of today's Artificial Intelligence technologies. The brain operates on approximately 230 picowatts (2.3 * 10-10) per cell, or around 20 watts for the entire brain. Any analog function can be simulated in a digital circuit. For example, music recording has evolved from analog tapes and records to digital MP3 and downloadable music files. Therefore, the Brainchip Akida technology accomplishes the same feat by bringing the working of biological neurons into an entirely digital world.

The neuromorphic approach offers other advantages beyond low power consumption in processing. Unlike Edge AI, a cooling fan is not necessary for the device. The digital nature of the design ensures its stability, enabling the reuse of training data between devices. Moreover, All devices are identical, eliminating discrepancies in process properties and drift encountered in analog technologies. Finally, the neuromorphic core's on-chip learning capabilities can be combined with deep learning-derived features, providing in-the-field reconfigurability.

The unified approach serves as a launching point from perception to cognition, which AI can utilize to accelerate from machine learning to machine intelligence in the quest towards achieving Artificial General Intelligence (AGI).