How to Build, Optimize, and Deploy AI Models at the Edge

This guide outlines the key steps to designing, optimizing, and deploying AI at the edge, followed by a look at how purpose-built edge AI toolchains can support and streamline this process.

40 billion. That is the IoT Analytics’ estimate for the global number of connected IoT devices by 2030[1]. This explosion of edge devices creates unprecedented opportunities for real-time data processing, but also exposes the limitations of cloud-based AI.

Transmitting massive volumes of data to centralized servers results in latency, security risks, and higher bandwidth costs. Edge computing offers an alternative by enabling AI to run locally without cloud connectivity. But deploying AI at the edge isn’t as simple as moving a cloud model onto a smaller device. It requires thoughtful model design, optimization, and a deployment strategy tailored to edge constraints.

This guide outlines the key steps to designing, optimizing, and deploying AI at the edge, followed by a look at how purpose-built edge AI toolchains can support and streamline this process.

Understanding the Transition: What Changes at the Edge?

Edge environments introduce distinct challenges for deploying AI. Devices often operate with limited compute, memory, and power, especially in remote or mobile settings. Connectivity is another constraint; network access may be intermittent or nonexistent, making low-latency, on-device processing essential. Processing data locally reduces response times to milliseconds and improves reliability and privacy.

However, models built for the cloud aren’t designed for these constraints. Cloud-native AI workloads typically rely on large, overparameterized models optimized for high-performance servers. These models consume more memory, power, and bandwidth than edge devices can support. While the cloud offers scalability and centralized control, it sacrifices efficiency and responsiveness, both critical at the edge.

As a result, AI design priorities are shifting. Rather than maximizing accuracy alone, engineers are now focused on balancing performance with energy efficiency, model size, and real-time responsiveness. On-device inferencing eliminates the need to send data to the cloud, which enhances privacy, reduces bandwidth costs, and ensures consistent performance, even offline.

This shift requires AI engineers to rethink how models are built and deployed. Optimizing architecture, memory usage, and inference strategy becomes essential, especially when deploying at scale or on low-power devices. Localized AI decision-making calls for models that are compact, fast, and efficient.

For AI engineers, this means optimizing every step of the model pipeline from architecture to deployment strategy. To succeed at the edge, models must be responsive, resource-efficient, and tailored to real-world constraints. This includes minimizing latency, reducing power draw, and ensuring reliable performance in varied and often disconnected environments.

The next section provides a step-by-step guide to moving AI workloads to the edge, from model selection to deployment.

How to Move Your AI Model to the Edge: A 5-Step Process

Step 1: Choose the Right Model

The choice of model is foundational to successful edge AI deployment. Pre-trained models such as MobileNet, YOLOv5n, and ResNet are widely used for their balance of accuracy and efficiency. They offer a practical starting point for general-purpose tasks such as image classification or object detection.

However, edge applications often demand domain-specific intelligence. In these cases, custom models trained on relevant data deliver better performance. Selecting or designing a model architecture that is lightweight and compatible with downstream optimization (like quantization or pruning) will save time and resources later. Engineers should also consider factors like input resolution, number of parameters, and compatibility with edge hardware backends.

Step 2: Quantize and Optimize

Edge devices rarely have the luxury of floating-point computation at scale. Quantization is a key optimization technique that converts model weights and activations from high-precision formats (e.g., FP32) to lower precision types such as INT8 or even binary representations. This compression reduces memory usage and accelerates inference speed, often with negligible accuracy loss.

Other model optimization techniques like weight pruning, layer fusion, operator simplification, and architecture-aware compilation can further shrink the model’s footprint and improve inference speed. When done carefully, these techniques enable AI models to meet the stringent compute and thermal limits of edge devices without compromising functionality.

Step 3: Compile for the Target Hardware

No model is ready for deployment until it is compiled and formatted for the hardware that will run it. Each edge device, whether it’s powered by a CPU, GPU, NPU, or a dedicated AI accelerator, has its own set of instructions, constraints, and supported operations.

Compiling involves translating your model from a training framework (e.g., TensorFlow, PyTorch, ONNX) into an optimized, low-level representation that can be executed on your target device. This step often includes further operator fusion, memory layout optimization, and partitioning across heterogeneous cores.

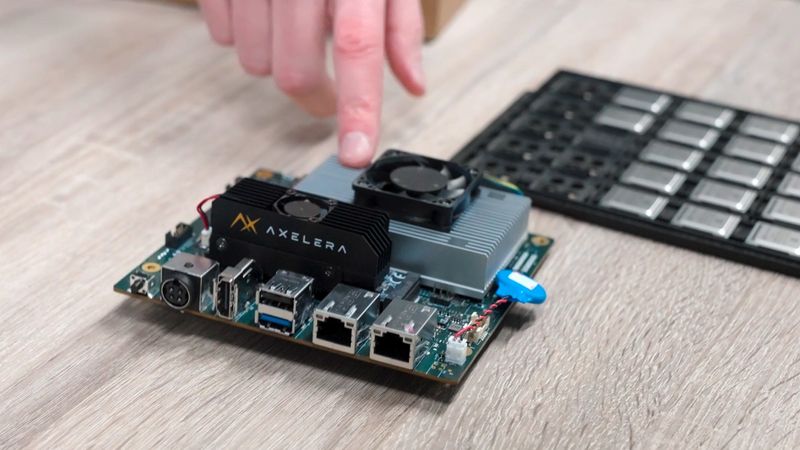

Use toolchains that match your hardware to get the best performance without compatibility issues. For example, developers targeting specialized AI accelerators like the Metis AIPU from Axelera AI can leverage the easy-to-use Voyager SDK that includes a full suite of pre-compiled models, or supports compiling your own. Toolchains like Voyager simplify compilation and optimization to help ensure the resulting binaries are fully optimized for the execution environment.

Step 4: Deploy to the Device

With the compiled model in hand, the next step is integration into the device environment. This means more than just uploading the binary. It involves configuring the runtime engine, setting up data pipelines, and validating input/output flows for real-world conditions.

Deployment might also require custom interfaces to camera modules, sensor inputs, or actuators. For example, with hardware like Axelera's Metis AIPU (featuring four self-sufficient and independently programmable AI cores), the compiled model can be deployed directly to embedded systems such as cameras or robots using a dedicated software stack like Voyager, which supports integration across diverse host architectures. Engineers should consider latency and throughput requirements at this stage, ensuring that the model operates within expected performance envelopes when embedded in the application logic.

Step 5: Test, Monitor, and Optimize

Often overlooked, the final stage is validating and tuning the model under real deployment conditions. Lab benchmarks are helpful, but only real-world testing will expose the true behavior of the system in terms of latency, throughput, thermal performance, and energy consumption.

Profiling tools can measure inference time, memory usage, and bottlenecks. Developers should test not only for accuracy but also for stability and robustness. It may be necessary to revisit earlier steps (i.e., tweaking quantization settings, simplifying architecture, or adjusting input resolution) to meet application-specific goals without overloading the hardware.

Use Cases: Edge AI in Action with Axelera

Edge AI plays a critical role in enabling real-time processing, minimizing latency, and enhancing privacy across a range of industries. Below are examples of how edge computing is being applied in practical settings.

Industrial Automation and Visual Inspection

Factories and industrial facilities rely on rapid, accurate visual inspection to detect defects and maintain product quality. Edge AI enables real-time quality control by running vision models directly on factory floor equipment.

Cameras equipped with embedded AI can detect defects in materials or misalignments in assembly lines without sending data to the cloud, which helps reduce latency and maintain data security. For instance, systems powered by accelerators like Axelera’s Metis AIPU can support high-throughput inference for fast-paced inspection tasks in industrial environments.

Data Security in Sensitive Applications

Applications in healthcare, finance, and surveillance often involve sensitive data that cannot be freely transmitted. By proactively securing training and inference data, AI systems can safeguard both privacy and security. Without robust security measures, AI models become vulnerable to breaches, compliance violations, and trust erosion. Hardware designed for on-device inference, such as the Metis AIPU, can support secure deployment where minimizing data exposure is essential.

Low-Power and Remote Deployments

Edge AI is increasingly used in scenarios with strict power and connectivity constraints. Remote sensors, environmental monitoring stations, and smart meters require energy-efficient models capable of running continuously without draining power sources. Low-power AI accelerators help support inference on these edge devices by managing workload distribution and reducing idle energy consumption.

Autonomous Systems and Mobility

Real-time perception and decision-making are central to autonomous driving. With edge computing, AI models for navigation, object detection, and obstacle avoidance can be executed directly on in-vehicle hardware, ensuring real-time responsiveness. This enables rapid object detection, lane tracking, and collision avoidance without relying on cloud connectivity [2]. For example, embedded systems using hardware accelerators like the Metis AIPU can handle complex vision workloads to support real-time control in automotive systems.

Remote Patient Monitoring and Healthcare Analytics

Wearables and RPM medical devices generate continuous streams of data. Edge AI processes this information locally to deliver real-time health alerts, support diagnostics, and reduce latency in patient monitoring. By running models locally, these systems can provide immediate alerts and diagnostics without needing to connect to a centralized server. This not only speeds up response times but also helps safeguard patient privacy.

Across all these domains, edge AI delivers consistent value by reducing dependence on cloud infrastructure, enabling real-time responsiveness, and aligning better with data governance requirements. Platforms like Axelera’s provide one example of how hardware and software are evolving to support these growing needs.

Tips and Best Practices

Choose Models That Quantize Well

Use architectures known to retain accuracy after INT8 quantization (e.g., MobileNet, the YOLO family).

Avoid complex layers or unsupported ops that don’t translate well to edge hardware.

Lightweight models reduce memory use and improve inference speed without major accuracy loss.

Balance Accuracy, Latency, and Power

Slight accuracy reductions can significantly improve speed and energy efficiency.

Prioritize low latency for real-time applications over perfect precision.

Use benchmarking tools to evaluate trade-offs between model accuracy, speed, and energy efficiency on your target hardware.

Optimize Input Preprocessing

Keep preprocessing simple and consistent with training (e.g., resizing, normalization).

Avoid costly transformations at runtime.

Use tools like Voyager™ SDK that allow preprocessing steps to be defined at compile time to ensure consistency and reduce runtime overhead.

Leverage Batch Size and Parallelism

Set batch size = 1 for low-latency, real-time inference.

Use larger batches for throughput-heavy workloads like video frame processing.

Under certain circumstances, increasing the batch size can increase hardware utilization for the training process, up to a point at which further increases cannot bring similar benefits due to communication overhead or memory constraints [3].

Tune batch size using SDK tools that support parallel execution to align with your target hardware’s latency and throughput requirements.

Use Debugging and Tuning Tools Effectively

Define and manage edge inference pipelines using YAML-based configuration or equivalent runtime orchestration tools.

Profile performance metrics (latency, throughput, memory usage) to identify bottlenecks and optimize deployments.

Debug quantization mismatches and model issues using detailed logs, CLI utilities, and Python APIs.

Design for Edge Constraints from the Start

Don’t adapt cloud models; design specifically for edge from the beginning.

Consider device limits, latency requirements, and deployment context early.

Select models and formats that align with available compute, power, and memory.

Pitfalls to Avoid

Pitfall | Avoid | Do Instead |

Using incompatible model architectures: Some models may fail to compile with your deployment tool. | Complex or cloud-optimized models with unsupported operations | Start with known-compatible architectures like MobileNet, ResNet, or YOLOv5. |

Skipping quantization testing: Unvalidated models may suffer unexpected accuracy drops. | Deploying INT8-quantized models without evaluating performance on real or representative data | Use validation tools to test accuracy before and after quantization. |

Overestimating on-device resources: Edge devices have strict limits on memory, compute, and thermal headroom. | Assuming cloud-scale batch sizes or high-res inputs will run efficiently on edge devices | Tune model size, input dimensions, and batch size based on deployment context. |

Neglecting preprocessing alignment: Input mismatches can break or degrade model performance at inference time. | Using inconsistent image scaling, normalization, or color spacing between training and deployment | Ensure preprocessing matches the training pipeline and aligns with the deployment tool’s expected format and operations. |

Conclusion

Cloud-based inference is no longer sufficient for applications that demand low latency, data privacy, and efficient processing. With the right tools and approach, moving AI workloads to the edge is a practical, scalable strategy.

To support this transition, tools that streamline model optimization, compilation, and deployment are essential. Axelera AI offers the hardware (Metis AIPU) and software (Voyager™ SDK) needed to accelerate this transition from model optimization to deployment. The Metis AIPU delivers high-performance, low-power inference at the edge, while the Voyager™ SDK simplifies model optimization, deployment, and tuning. Together, these technologies exemplify how purpose-built platforms can help developers meet the practical demands of edge AI at scale.

References

[1] S. Sinha, “Number of connected IoT devices growing 13% to 18.8 billion,” IoT Analytics. [Online]. Available: https://iot-analytics.com/number-connected-iot-devices/

[2] M. N. Ahangar, Q. Z. Ahmed, F. A. Khan, and M. Hafeez, “A Survey of Autonomous Vehicles: Enabling Communication Technologies and Challenges,” Sensors, vol. 21, no. 3, p. 706, Jan. 2021, doi: 10.3390/s21030706.

[3] Goyal, P., Dollár, P., Girshick, R. B., Noordhuis, P., Wesolowski, L., Kyrola, A., Tulloch, A., Jia, Y., & He, K. (2017). Accurate, large minibatch SGD: training ImageNet in 1 hour. arXiv (Cornell University). Doi: 10.48550/arxiv.1706.02677