Leveraging low-power embedded computer vision to solve urgent sustainability and safety challenges

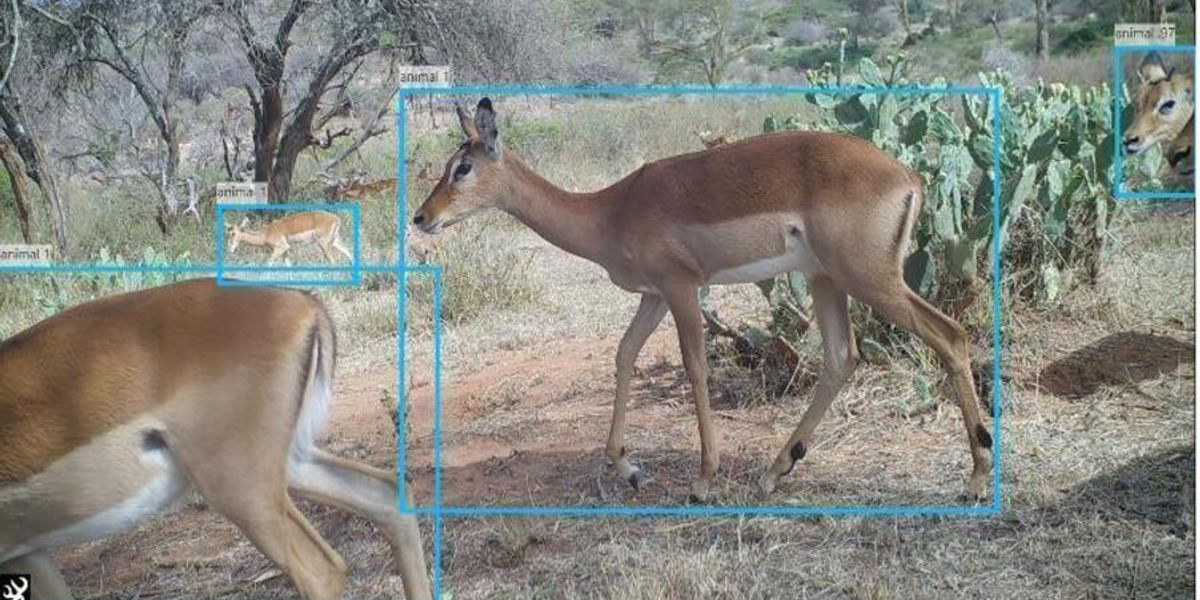

Image credit: Loisaba Conservatory.

Low-power computer vision provides a new opportunity to gain a practical understanding of the world through data collection and vision in remote areas.

These technologies have the potential to play a pivotal role in advancing the United Nations' Sustainable Development Goals (SDGs) and can assist in tackling complex societal issues. For instance, in alignment with Goal 15: Life on Land, these systems can employ edge computing and real-time data analytics to monitor environmental variables, aiding in the early detection of invasive species.

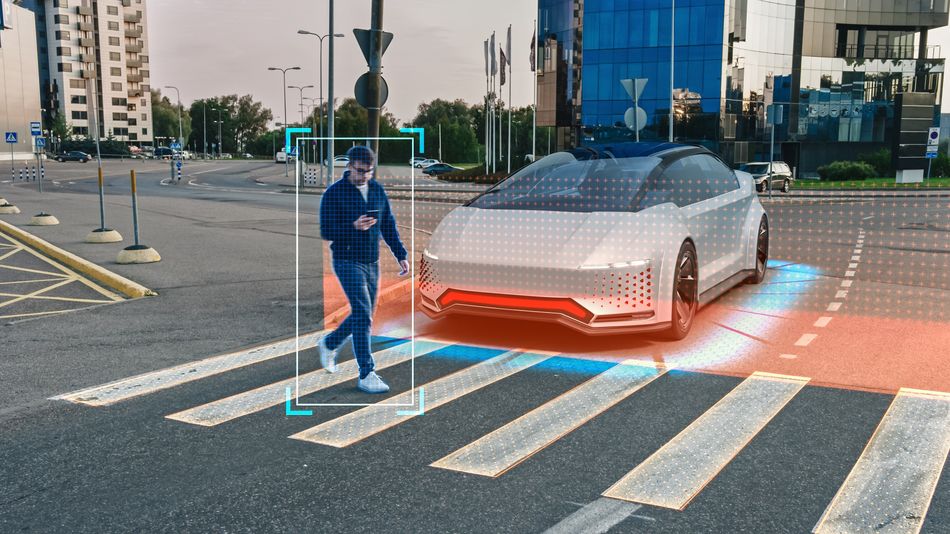

By utilizing low-latency, energy-efficient neural networks, vision systems can also enhance public safety infrastructure at busy city intersections, directly contributing to Goal 11: Sustainable Cities and Communities. In this article, we dig into a few examples of how low-power computer vision is assisting in solving sustainability and safety challenges.

Saving lives with pedestrian tracking

According to data from the State Highway Safety Offices, 6,721 pedestrians were killed on US roads in 2020.[2] That's more than 18 pedestrian fatalities occurring on a daily basis.

This tragic loss of life has the potential to be addressed with the deployment of newly-available low-cost, low-power, high-performance compute devices with high-speed edge AI inference capabilities. When integrated with a suite of sensors, including low-light cameras, radars, lidars, and thermal imagers, these devices could be capable of identifying pedestrians, monitoring and predicting their behaviors, and providing intelligent control of traffic & pedestrian signals to optimize for safety.

Saving the environment with GHG emission reduction

One of the most challenging places to reduce greenhouse (GHG) gasses is air travel. Quantifying GHG emissions is essential to enable airports first to understand and then minimize their impact while still connecting the world. Low-power computer vision can enable the monitoring of runway vehicles and aircraft and identify unnecessary idling to address low-hanging fruit in GHG emission reduction.Real-time object classification and AI inference provided by the neural processing unit would work with the onboard camera to detect when planes are idling for too long or in runway idling practices.

In addition to computer vision, such a device can also be outfitted with an environmental sensor as a reliable means to measure localized engine emissions. This can provide an additional data touchpoint to validate computer vision-based inferencing. CANBus connectivity would make it easy to communicate with the aircraft and airport environmental monitoring infrastructure to relay inferences to the pilot or other relevant personnel.

Maintaining ecological balance with smart traps

A recent study reported that invasive species had cost the global economy an annual mean of US$26.8 billion per year ($1.62 trillion) since 1970, with a threefold increase every decade. These costs include management costs, such as chemical and biological solutions and damage caused to infrastructure and reduced crops or loss of viable forestry areas. Additionally, there are indirect economic costs such as the destruction of ecosystems, loss of biodiversity, impacts on tourism, and reduced property values.

The economic costs of invasive species are much lower when funds are invested in prevention and early detection efforts. Once an invasive species spreads, management is exponentially more expensive and less efficient. Traditional invasive species traps do not discriminate and are just as likely to harm endangered and endemic species.

Leveraging low-power computer vision, “smart traps” use rapid object classification capabilities to selectively trap invasive species without harming non-targeted fauna.

Such a device works so that when an animal enters, the trap’s AI camera deciphers once the animal is inside and shuts, capturing the animal. The camera then takes photos, identifies the animal based on the training set, and notifies the trap’s owner through an app that a new animal has been captured.

The app displays the animal’s multiple images and the AI-deciphered animal type, andthe user may either confirm or override the AI deduction. After 30 seconds, a timer is set by the app for 30 minutes to wait for the owner to act. Without action, the smart trap will either continue to hold or release the animal based on AI inference only.

Towards a sustainable future

The advances in low-power computer vision will boost cutting-edge sustainability and safety solutions for both urban and remote areas. As we move forward, it is clear that this potent intersection of technology and responsible innovation will increasingly shape the sustainable future we envision.

References

[1] https://groupgets-files.s3.amazonaws.com/MistyWest/MistySOM-G2L%20Product%20Brief.pdf

[2] https://www.ghsa.org/resources/Pedestrians21

[3] https://www.renesas.com/us/en/products/microcontrollers-microprocessors/rz-mpus#featured_products

[4] https://groupgets-files.s3.amazonaws.com/MistyWest/MistySOM-V2L%20Product%20Brief.pdf